Intro

For talking about Cross Validation first we should talk about overfit. Overfit occours when a model is training wiht too many examples with are redudant. Our model cant genralize. A direct consecunce of this situation is that our model will work with our train example but it fails on real data. To prevent this situation we can split our dataset on 2 subsets, train and test, this subsets each class must have a representative number of rows.

On the following example we split our dataset into train-test samples and score our training.

import numpy as np

from sklearn.model_selection import train_test_split

from sklearn import datasets

from sklearn import svm

iris = datasets.load_iris()

iris.data.shape, iris.target.shape

X_train, X_test, y_train, y_test = train_test_split(iris.data, iris.target, test_size=0.4, random_state=0)

clf = svm.SVC(kernel='linear', C=1).fit(X_train, y_train)

print("Cross validation score = {0}".format(clf.score(X_test, y_test)))

# Cross validation score = 0.9666666666666667

Doing this only once, will be a error. We must split our full dataset into train-test, with that, we ensure that our model configuration is working properly.

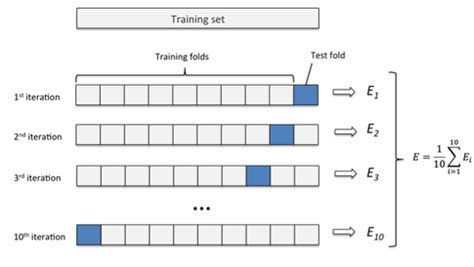

Cross Validation

Splitting our dataset in portions is called cross validation. Cross validation has a lot of variations i will use the simplest K-fold.

You can take a look to this variatios here.

from sklearn.model_selection import KFold

clf = svm.SVC(kernel='linear', C=1)

kf = KFold(n_splits=10)

for train, test in kf.split(iris.data):

clf.fit(X_train, y_train)

print(clf.score(X_test, y_test))

Sklearn has a function that abstracts cross validation and returns score of our model:

from sklearn.model_selection import cross_val_score

clf = svm.SVC(kernel='linear', C=1)

scores = cross_val_score(clf, iris.data, iris.target, cv=10)

for idx, score in enumerate(scores):

print("Cross validation {0} score = {1}".format(idx + 1, score))

print(scores.mean(), scores.std() * 2)

# Cross validation 1 score = 1.0

# Cross validation 2 score = 0.9333333333333333

# Cross validation 3 score = 1.0

# Cross validation 4 score = 1.0

# Cross validation 5 score = 0.8666666666666667

# Cross validation 6 score = 1.0

# Cross validation 7 score = 0.9333333333333333

# Cross validation 8 score = 1.0

# Cross validation 9 score = 1.0

# Cross validation 10 score = 1.0

Playing with penalty

You can see an explanation of penalty on the previous post. I will show a graphic to see how different penalties affect to our model.

penalties = list(

np.arange(

0.5,

10.0,

0.1

)

)

means = []

stds = []

for C in penalties:

clf = svm.SVC(kernel='linear', C=C)

scores = cross_val_score(clf, iris.data, iris.target, cv=10)

means.append(scores.mean())

stds.append(scores.std() * 2)

import matplotlib.pyplot as plt

# plot the data

fig = plt.figure(1)

plt.subplot(211)

plt.plot(penalties, means, 'r')

plt.subplot(212)

plt.plot(penalties, stds, 'r')

plt.show()

Top comments (0)