Originally published at Riter.co.

In this post, we describe how to integrate Gitlab webhooks with Riter API and deploy the service on the Google Cloud Platform using Cloud Functions — a serverless compute solution for creating event-driven applications. Cloud Functions let you reduce the infrastructure costs and provide a relatively convenient way to build and deploy services. The whole code is available on Github along with unit and performance tests.

Service purpose

As you may know, Riter is a project management tool used (and developed) by our team. Each pull request created in Gitlab is always associated with a certain task (a story, as we call it) in Riter. So, up until now, we had to insert a link to the task in the pull request description, and after that — add a corresponding link to pull request inside the task comments. As a result, it was easy to see which particular code changes a specific task includes, and which task a certain pull request relates to. On the other hand, such an approach resulted in some “double work” which we’d like to avoid.

To this end, we’ve written a simple service that uses Gitlab webhooks to track and process merge request events and Riter API — to report these events in appropriate tasks. In our case, we suggest that each Gitlab repository corresponds to a particular Riter project. We also suggest that either a pull request description or a source branch name contain a story slug. If so, then each time when somebody performs a merge command, the service figures out a task to which it belongs and adds a link to this pull request (with its id, title, and action performed) in the task annotations. As a result, we’ve got an automatically generated history of changes in our project management tool.

Requirements to the initial data

- Generate Riter access token

All Riter users have full access to its GraphQL API which covers all existing functionality. Go to “My Profile” settings and open “Access tokens” tab. Here you can generate a personal access token to all your projects at once. However, in our example, we generate several separate access tokens for different projects and save them in appropriate environment variables (see below).

Also, we could create a separate user identity (a bot) to generate annotations on its behalf. However, for ease, let’s suppose that we use just a regular Riter user account to report all events.

- Set up Gitlab webhooks

In Gitlab, go to the project “Settings -> Integration” page to add a webhook. We chose “Merge request events” trigger to process only events when a merge request is created, updated, and merged. In the same way, we could process any other events in the repository.

Here you also need to specify the URL which is unique for each function and appears in the console after deploy. It can be found in the Google Cloud Admin panel as well.

In our case, we call the same function for several Gitlab repositories. However, it would be better to deploy separate instances with different environment variables for each repository.

- Environment variables

We use several environment variables. We’re going to track events only from specific users, so USERS include a space-separated list of Gitlab user nicknames.

USERS: nickname1 nickname2

Also, we create environment variables (“ProjectName_GraphQL_API_URL”) for different project access tokens along with the full API endpoint. For example, for the Demo company, the “The night’s watch” project, the env.yml file could include the variable:

THE_NIGHTS_WATCH_GRAPHQL_API_URL: https://demo.riter.co/the-night_s-watch/graphql?api_token=18dc7...

In our example, we use similar variables defined as TRACKER_GRAPHQL_API_URL, CLIENT_GRAPHQL_API_URL, and WEBHOOK_TEST_GRAPHQL_API_URL.

Source code

The entire code takes 84 lines and is written in pure JavaScript. An example of the same code written in Go is also available.

We get all users (whose pull requests we’d like to track) separated with a space:

const users = process.env.USERS.split(' ');

In GraphQL, we use mutations to perform POST requests. So, we describe a mutation to create a new annotation (input) to a particular story (specified by slug) in Riter:

const query = `

mutation($input: AnnotationTypeCreateInput!) {

createAnnotation(input: $input) {

resource {

slug

}

}

}

`;

Then we list endpoints for different project names:

const projects = {

'tracker': {

endpoint: process.env.TRACKER_GRAPHQL_API_URL

},

'client': {

endpoint: process.env.CLIENT_GRAPHQL_API_URL

},

'webhook-test': {

endpoint: process.env.WEBHOOK_TEST_GRAPHQL_API_URL

}

};

Finally, compose and send a request. We respond immediately to the webhook to speed up request processing:

response.status(200).send();

Get the endpoint specified for a current project:

if(req.body.project) {

project = projects[req.body.project.name];

}

Only calls from specific users and for certain projects are allowed:

if(!project || !req.body.user || req.body.user && !users.includes(req.body.user.username)) {

return 1;

}

Then we validate the presence of content: URL to the pull request, pull request id, title, and action (for example, “open” or “update”):

const attributes = req.body.object_attributes;

And try to define story slug from the pull request description or the related source branch:

let storySlug;

if(attributes.description) {

[, storySlug] = attributes.description.match(/stories\/([\w-]+)/) || [];

}

if(!storySlug && attributes.source_branch) {

[storySlug] = attributes.source_branch.match(/^[\w-]+/) || [];

}

if(!storySlug) {

return 3;

}

Finally, if all the data is correct, we perform the API call:

const { url, iid, title, action } = attributes;

const { endpoint } = project;

const variables = {

input: {

body: `[Merge request !${iid} - "${title}" (${action})](${url})`,

storySlug: storySlug

}

};

request(endpoint, query, variables).catch(() => {});

return 0;

Deploy and run

All commands required to deploy the service on Google Cloud Platform are provided in the README file. For example, here’s how we can deploy the service:

gcloud functions deploy http --env-vars-file .env.yml --trigger-http --runtime nodejs8

We’ve used Cloud Functions Node.js Emulator to deploy, run, and debug the app on the local machine before deploying them to the production. We’ve used the following tutorial to set up Local Functions Emulator.

Performance

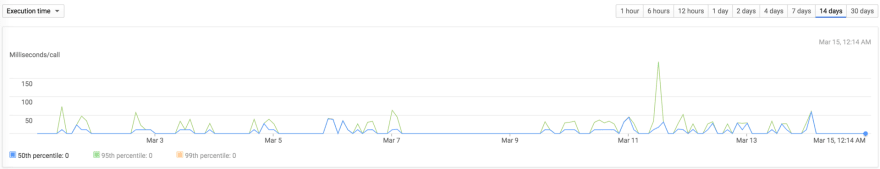

Event processing speed appeared to be quite high. Memory usage, on the contrary, was rather low, as we had expected. That’s well since you are charged based on resources consumption (and on the number of requests to your function). While deploying Cloud Functions, you only have to specify the amount of memory your function needs and CPU resource is allocated proportionally. Here’re some screenshots from Google Cloud statistics:

In such a simple way, Riter can be quickly integrated with any third-party service, and its functionality can be extended as far as you need to meet all the specific requirements of your team and workflow.

Top comments (0)