Backing up elasticsearch indices with curator & minio!

Nowadays, Log management becomes more and more important especially in distributed and microservices architecture. A variety of solutions exist in the market, both commercial and open source! Yet elastic stack is one of the widely used, easy to set up and powerful log aggregation & management platform.

Once everything is green, you'll sooner or later realize the necessity of backing up your elasticsearch cluster (this can be for many reasons: migrating indices, recover from failures or simply freeing up your cluster and getting rid of some old indices). In this post, we'll see how the conjunction of curator and minio helps you set up your snapshot/restore strategy for your elasticsearch clusters!

minio configuration

Minio is a popular open source object storage server that supports the AWS S3 API. It provides an on-prem alternative to AWS S3 and it is intended to provide scalable persistent storage using your Docker infrastructure.

Minio front page provides the necessary steps to install and configure minio on your favorite platform. To interact with the minio server, I recommend using the minio client.

Now, assuming the minio server is up and running at http://some-host, we start by creating a bucket called, for example es-backup, to store elasticsearch snapshots on minio:

mc config host add myminio http://some-host <access_ket> <secret_key>

mc mb myminio/es-backup

elasticsearch configuration

If you've not yet installed the repository-s3 plugin in your cluster, start by adding it first. This plugin adds support for using S3 as a repository for Snapshots/Restores. The plugin must be installed on every node in the cluster:

bin/elasticsearch-plugin install repository-s3

Unsurprisingly, the plugin is configured to send request to S3 by default! To override this configuration, we need to update elasticsearch.yml and specify where the cluster can find minio instance. This can be achieved adding the below config:

s3.client.default.endpoint: "some-host"

s3.client.default.protocol: http

Additionally, in order to successfully connect to minio, elasticsearch needs other information: the access token and the secret token. It was possible until very recently, and more precisely till version 5.x, to send these credentials in the repository HTTP request body! But since credentials are sensitive, the recommended way to do so, starting from version 6.x, is to store them in elasticsearch keystore:

bin/elasticsearch-keystore add s3.client.default.access_key

bin/elasticsearch-keystore add s3.client.default.secret_key

Note that you still can however, send secrets through HTTP request, even if its not recommended, by adding -Des.allow_insecure_settings=true to your jvm.option.

Once done, restart your nodes to allow configurations to take effect!

The very final step is to create the repository, linking it to the minio bucket previously created:

curl -X POST "<es_host>/_snapshot/my_repo"

-H 'Content-Type: application/json'

-d' { "type": "s3",

"settings": {

"bucket": "es-backup"

}

} '

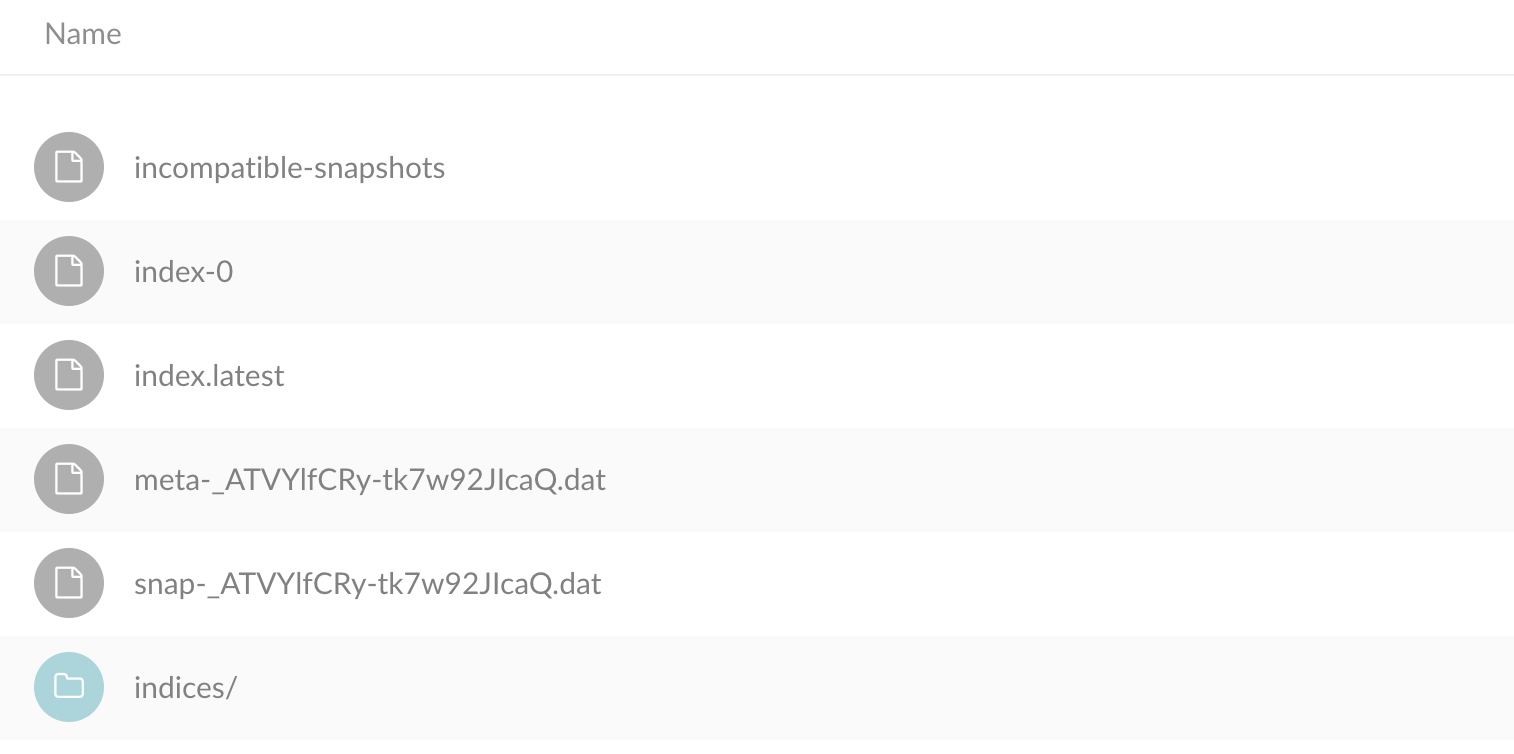

If everything goes well, you should be able to retrieve your repository configuration, my_repo, by running:

curl -X GET "<es_host>/_snapshot/my_repo"

curator config

Curator is a powerful tool, written in python and well supported almost in all operating systems! It offers numerous options and filters to help identity indices and snapshots that meet certain criteria, simplifying management tasks on complex clusters!

Curator can be used as a command line interface (CLI) or Python API. I personally prefer using the CLI, which means defining a curator.yml containing curator configs (client connection, logging settings ...), as follows:

client:

hosts:

- es_host

aws_key: <access_ket>

aws_secret_key: <secret_key>

aws_region: default

ssl_no_validate: False

timeout: 30

logging:

loglevel: INFO

and a action.yml containing actions to be executed on indices. The action file below simply snapshot the indices starting with server_logs in our cluster:

actions:

1:

action: snapshot

options:

repository: my_repo

# Leaving name blank will result in the default 'curator-%Y%m%d%H%M%S'

name: backup-%Y%m%d%H%M%S

ignore_unavailable: False

include_global_state: True

partial: False

wait_for_completion: True

skip_repo_fs_check: False

timeout_override:

continue_if_exception: False

disable_action: False

filters:

- filtertype: pattern

kind: prefix

value: server_logs

exclude: