Lessons learned from debugging application performance in Cloud

Lift and shift migration using tools like

Cloudendure is the fastest way to migrate 'on premises' systems to Google Cloud. When the migration is complete, the environment can be refactored to use cloud-native capabilities. During application testing after a recent

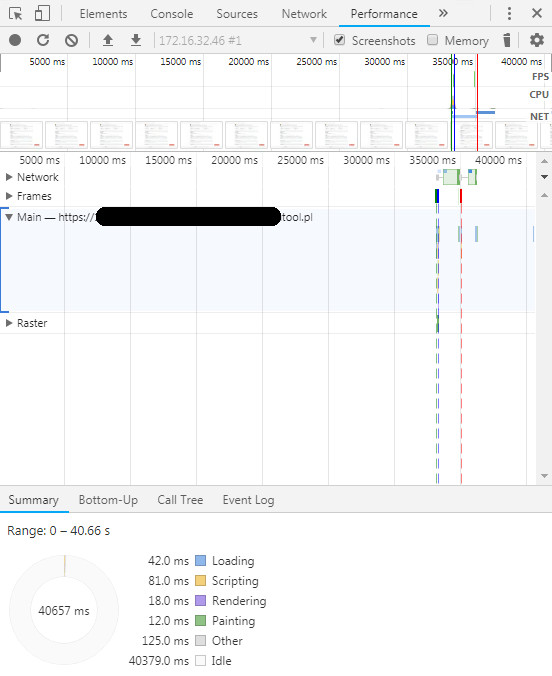

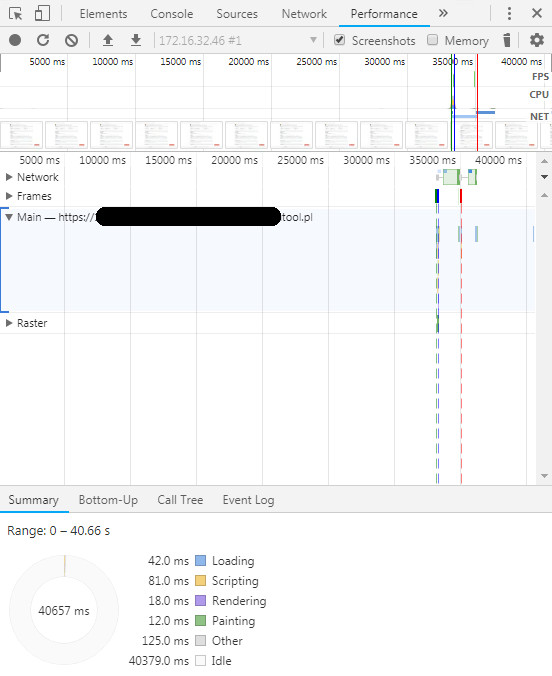

automated migration using Cloudendure to GCP, a legacy web application started to show a heavy performance hit. It took 40 seconds to load the perl web page compared to 5 seconds loading time in 'on premises' system. System side load was very minimal. While debugging in client browser side, I found that most of the time is spend as idle!!

This means loading time was most probably spent on the server side. pstree command showed me that a chmod processes was spawned by perl script. [code language="bash"] # pstree|grep chmod |-httpd-+-httpd---perl---chmod [/code] chmod is trying to "chmod -R 755" on a large number of log files. The process is in uninturruptable_sleep state. [code language="bash"] # ps aux|grep chmod apache 17694 0.0 0.0 4088 588 ? D 11:09 0:00 chmod -R 755 log/*.log root 17722 0.0 0.0 103316 884 pts/0 S+ 11:09 0:00 grep chmod</code> [/code] To understand what is process doing,

sysrq can be used. I used sysrq+t to dump thread state of all processes. On checking the chmod process, it looked like the process is waiting on NFS get attribute calls. [code language="bash"] Nov 27 11:14:45 test kernel: chmod D 0000000000000000 0 17909 17908 0x00000080 Nov 27 11:14:45 test kernel: ffff880036c7fb48 0000000000000082 0000000000000000 ffffffffa01b98ce Nov 27 11:14:45 test kernel: 0000000000000000 0000000000000286 00044e0b542040d0 ffff88003787e2b0 Nov 27 11:14:45 test kernel: ffffffff81ed13c0 00000001483610e6 ffff880037c27068 ffff880036c7ffd8 Nov 27 11:14:45 test kernel: Call Trace: Nov 27 11:14:45 test kernel: [<ffffffffa01b98ce>] ? xs_send_kvec+0x8e/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5b0>] ? rpc_wait_bit_killable+0x0/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5f2>] rpc_wait_bit_killable+0x42/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff8154bc0f>] __wait_on_bit+0x5f/0x90 Nov 27 11:14:45 test kernel: [<ffffffffa01b7ffa>] ? xprt_release_xprt+0x7a/0x80 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5b0>] ? rpc_wait_bit_killable+0x0/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff8154bcb8>] out_of_line_wait_on_bit+0x78/0x90 Nov 27 11:14:45 test kernel: [<ffffffff810a69b0>] ? wake_bit_function+0x0/0x50 Nov 27 11:14:45 test kernel: [<ffffffffa01b440c>] ? call_transmit+0x1ec/0x2c0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01beb35>] __rpc_execute+0xf5/0x350 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff81045fbc>] ? kvm_clock_read+0x1c/0x20 Nov 27 11:14:45 test kernel: [<ffffffff810a67b7>] ? bit_waitqueue+0x17/0xd0 Nov 27 11:14:45 test kernel: [<ffffffffa01bedf1>] rpc_execute+0x61/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01b5475>] rpc_run_task+0x75/0x90 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01b5592>] ? rpc_call_sync+0x42/0x70 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa0273b9c>] ? nfs3_rpc_wrapper.clone.0+0x6c/0xc0 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa0274f27>] ? nfs3_proc_getattr+0x47/0x90 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa0262293>] ? __nfs_revalidate_inode+0xe3/0x220 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa026312e>] ? nfs_getattr+0xde/0x210 [nfs] Nov 27 11:14:45 test kernel: [<ffffffff8119f9b1>] ? vfs_getattr+0x51/0x80 Nov 27 11:14:45 test kernel: [<ffffffff81299e15>] ? _atomic_dec_and_lock+0x55/0x80 Nov 27 11:14:45 test kernel: [<ffffffff8119fa44>] ? vfs_fstatat+0x64/0xa0 Nov 27 11:14:45 test kernel: [<ffffffff8119fbab>] ? vfs_stat+0x1b/0x20 Nov 27 11:14:45 test kernel: [<ffffffff8119fbd4>] ? sys_newstat+0x24/0x50 Nov 27 11:14:45 test kernel: [<ffffffff810ee687>] ? audit_syscall_entry+0x1d7/0x200 Nov 27 11:14:45 test kernel: [<ffffffff8100c6e5>] ? math_state_restore+0x45/0x60 Nov 27 11:14:45 test kernel: [<ffffffff8154e68e>] ? do_device_not_available+0xe/0x10 Nov 27 11:14:45 test kernel: [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b Nov 27 11:14:45 test kernel: Sched Debug Version: v0.09, 2.6.32-696.13.2.el6.x86_64 # [/code] The log files are shared from an 'on premises' NAS server. During the automated migration, the server was moved to us-central1 as it was the cheapest region. The NAS server is in Europe and has a high round-trip time when pinged from a GCP instance: [code language="bash"] # ping nas.test.com PING nas.test.com (192.168.1.50) 56(84) bytes of data. 64 bytes from nas.test.com (192.168.1.50): icmp_seq=1 ttl=254 time=121 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=2 ttl=254 time=120 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=3 ttl=254 time=120 ms [/code] Compared to 'on premises' server: [code language="bash"] # ping nas01 PING nas.test.com (192.168.1.50) 56(84) bytes of data. 64 bytes from nas.test.com (192.168.1.50): icmp_seq=1 ttl=252 time=15.8 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=2 ttl=252 time=15.5 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=3 ttl=252 time=15.9 ms [/code] Redeploying the instance to europe-west3 reduced the page load time from 40 seconds to 5 seconds. The moral of the story is obvious: keep your data as close as possible to the VM instance. Note: * Always plan a data migration strategy before moving VMs. * If possible, migrate data to the cloud.

Filestore can be used in this scenario without major application/system changes. * Rewrite legacy applications as much as possible. A simple solution here could be to decouple chmod from webpage. For example, use a separate process or cron. This would further reduce the page loading time.

This means loading time was most probably spent on the server side. pstree command showed me that a chmod processes was spawned by perl script. [code language="bash"] # pstree|grep chmod |-httpd-+-httpd---perl---chmod [/code] chmod is trying to "chmod -R 755" on a large number of log files. The process is in uninturruptable_sleep state. [code language="bash"] # ps aux|grep chmod apache 17694 0.0 0.0 4088 588 ? D 11:09 0:00 chmod -R 755 log/*.log root 17722 0.0 0.0 103316 884 pts/0 S+ 11:09 0:00 grep chmod</code> [/code] To understand what is process doing,

sysrq can be used. I used sysrq+t to dump thread state of all processes. On checking the chmod process, it looked like the process is waiting on NFS get attribute calls. [code language="bash"] Nov 27 11:14:45 test kernel: chmod D 0000000000000000 0 17909 17908 0x00000080 Nov 27 11:14:45 test kernel: ffff880036c7fb48 0000000000000082 0000000000000000 ffffffffa01b98ce Nov 27 11:14:45 test kernel: 0000000000000000 0000000000000286 00044e0b542040d0 ffff88003787e2b0 Nov 27 11:14:45 test kernel: ffffffff81ed13c0 00000001483610e6 ffff880037c27068 ffff880036c7ffd8 Nov 27 11:14:45 test kernel: Call Trace: Nov 27 11:14:45 test kernel: [<ffffffffa01b98ce>] ? xs_send_kvec+0x8e/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5b0>] ? rpc_wait_bit_killable+0x0/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5f2>] rpc_wait_bit_killable+0x42/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff8154bc0f>] __wait_on_bit+0x5f/0x90 Nov 27 11:14:45 test kernel: [<ffffffffa01b7ffa>] ? xprt_release_xprt+0x7a/0x80 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5b0>] ? rpc_wait_bit_killable+0x0/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff8154bcb8>] out_of_line_wait_on_bit+0x78/0x90 Nov 27 11:14:45 test kernel: [<ffffffff810a69b0>] ? wake_bit_function+0x0/0x50 Nov 27 11:14:45 test kernel: [<ffffffffa01b440c>] ? call_transmit+0x1ec/0x2c0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01beb35>] __rpc_execute+0xf5/0x350 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff81045fbc>] ? kvm_clock_read+0x1c/0x20 Nov 27 11:14:45 test kernel: [<ffffffff810a67b7>] ? bit_waitqueue+0x17/0xd0 Nov 27 11:14:45 test kernel: [<ffffffffa01bedf1>] rpc_execute+0x61/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01b5475>] rpc_run_task+0x75/0x90 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01b5592>] ? rpc_call_sync+0x42/0x70 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa0273b9c>] ? nfs3_rpc_wrapper.clone.0+0x6c/0xc0 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa0274f27>] ? nfs3_proc_getattr+0x47/0x90 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa0262293>] ? __nfs_revalidate_inode+0xe3/0x220 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa026312e>] ? nfs_getattr+0xde/0x210 [nfs] Nov 27 11:14:45 test kernel: [<ffffffff8119f9b1>] ? vfs_getattr+0x51/0x80 Nov 27 11:14:45 test kernel: [<ffffffff81299e15>] ? _atomic_dec_and_lock+0x55/0x80 Nov 27 11:14:45 test kernel: [<ffffffff8119fa44>] ? vfs_fstatat+0x64/0xa0 Nov 27 11:14:45 test kernel: [<ffffffff8119fbab>] ? vfs_stat+0x1b/0x20 Nov 27 11:14:45 test kernel: [<ffffffff8119fbd4>] ? sys_newstat+0x24/0x50 Nov 27 11:14:45 test kernel: [<ffffffff810ee687>] ? audit_syscall_entry+0x1d7/0x200 Nov 27 11:14:45 test kernel: [<ffffffff8100c6e5>] ? math_state_restore+0x45/0x60 Nov 27 11:14:45 test kernel: [<ffffffff8154e68e>] ? do_device_not_available+0xe/0x10 Nov 27 11:14:45 test kernel: [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b Nov 27 11:14:45 test kernel: Sched Debug Version: v0.09, 2.6.32-696.13.2.el6.x86_64 # [/code] The log files are shared from an 'on premises' NAS server. During the automated migration, the server was moved to us-central1 as it was the cheapest region. The NAS server is in Europe and has a high round-trip time when pinged from a GCP instance: [code language="bash"] # ping nas.test.com PING nas.test.com (192.168.1.50) 56(84) bytes of data. 64 bytes from nas.test.com (192.168.1.50): icmp_seq=1 ttl=254 time=121 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=2 ttl=254 time=120 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=3 ttl=254 time=120 ms [/code] Compared to 'on premises' server: [code language="bash"] # ping nas01 PING nas.test.com (192.168.1.50) 56(84) bytes of data. 64 bytes from nas.test.com (192.168.1.50): icmp_seq=1 ttl=252 time=15.8 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=2 ttl=252 time=15.5 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=3 ttl=252 time=15.9 ms [/code] Redeploying the instance to europe-west3 reduced the page load time from 40 seconds to 5 seconds. The moral of the story is obvious: keep your data as close as possible to the VM instance. Note: * Always plan a data migration strategy before moving VMs. * If possible, migrate data to the cloud.

Filestore can be used in this scenario without major application/system changes. * Rewrite legacy applications as much as possible. A simple solution here could be to decouple chmod from webpage. For example, use a separate process or cron. This would further reduce the page loading time.

This means loading time was most probably spent on the server side. pstree command showed me that a chmod processes was spawned by perl script. [code language="bash"] # pstree|grep chmod |-httpd-+-httpd---perl---chmod [/code] chmod is trying to "chmod -R 755" on a large number of log files. The process is in uninturruptable_sleep state. [code language="bash"] # ps aux|grep chmod apache 17694 0.0 0.0 4088 588 ? D 11:09 0:00 chmod -R 755 log/*.log root 17722 0.0 0.0 103316 884 pts/0 S+ 11:09 0:00 grep chmod</code> [/code] To understand what is process doing,

sysrq can be used. I used sysrq+t to dump thread state of all processes. On checking the chmod process, it looked like the process is waiting on NFS get attribute calls. [code language="bash"] Nov 27 11:14:45 test kernel: chmod D 0000000000000000 0 17909 17908 0x00000080 Nov 27 11:14:45 test kernel: ffff880036c7fb48 0000000000000082 0000000000000000 ffffffffa01b98ce Nov 27 11:14:45 test kernel: 0000000000000000 0000000000000286 00044e0b542040d0 ffff88003787e2b0 Nov 27 11:14:45 test kernel: ffffffff81ed13c0 00000001483610e6 ffff880037c27068 ffff880036c7ffd8 Nov 27 11:14:45 test kernel: Call Trace: Nov 27 11:14:45 test kernel: [<ffffffffa01b98ce>] ? xs_send_kvec+0x8e/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5b0>] ? rpc_wait_bit_killable+0x0/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5f2>] rpc_wait_bit_killable+0x42/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff8154bc0f>] __wait_on_bit+0x5f/0x90 Nov 27 11:14:45 test kernel: [<ffffffffa01b7ffa>] ? xprt_release_xprt+0x7a/0x80 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5b0>] ? rpc_wait_bit_killable+0x0/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff8154bcb8>] out_of_line_wait_on_bit+0x78/0x90 Nov 27 11:14:45 test kernel: [<ffffffff810a69b0>] ? wake_bit_function+0x0/0x50 Nov 27 11:14:45 test kernel: [<ffffffffa01b440c>] ? call_transmit+0x1ec/0x2c0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01beb35>] __rpc_execute+0xf5/0x350 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff81045fbc>] ? kvm_clock_read+0x1c/0x20 Nov 27 11:14:45 test kernel: [<ffffffff810a67b7>] ? bit_waitqueue+0x17/0xd0 Nov 27 11:14:45 test kernel: [<ffffffffa01bedf1>] rpc_execute+0x61/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01b5475>] rpc_run_task+0x75/0x90 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01b5592>] ? rpc_call_sync+0x42/0x70 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa0273b9c>] ? nfs3_rpc_wrapper.clone.0+0x6c/0xc0 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa0274f27>] ? nfs3_proc_getattr+0x47/0x90 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa0262293>] ? __nfs_revalidate_inode+0xe3/0x220 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa026312e>] ? nfs_getattr+0xde/0x210 [nfs] Nov 27 11:14:45 test kernel: [<ffffffff8119f9b1>] ? vfs_getattr+0x51/0x80 Nov 27 11:14:45 test kernel: [<ffffffff81299e15>] ? _atomic_dec_and_lock+0x55/0x80 Nov 27 11:14:45 test kernel: [<ffffffff8119fa44>] ? vfs_fstatat+0x64/0xa0 Nov 27 11:14:45 test kernel: [<ffffffff8119fbab>] ? vfs_stat+0x1b/0x20 Nov 27 11:14:45 test kernel: [<ffffffff8119fbd4>] ? sys_newstat+0x24/0x50 Nov 27 11:14:45 test kernel: [<ffffffff810ee687>] ? audit_syscall_entry+0x1d7/0x200 Nov 27 11:14:45 test kernel: [<ffffffff8100c6e5>] ? math_state_restore+0x45/0x60 Nov 27 11:14:45 test kernel: [<ffffffff8154e68e>] ? do_device_not_available+0xe/0x10 Nov 27 11:14:45 test kernel: [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b Nov 27 11:14:45 test kernel: Sched Debug Version: v0.09, 2.6.32-696.13.2.el6.x86_64 # [/code] The log files are shared from an 'on premises' NAS server. During the automated migration, the server was moved to us-central1 as it was the cheapest region. The NAS server is in Europe and has a high round-trip time when pinged from a GCP instance: [code language="bash"] # ping nas.test.com PING nas.test.com (192.168.1.50) 56(84) bytes of data. 64 bytes from nas.test.com (192.168.1.50): icmp_seq=1 ttl=254 time=121 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=2 ttl=254 time=120 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=3 ttl=254 time=120 ms [/code] Compared to 'on premises' server: [code language="bash"] # ping nas01 PING nas.test.com (192.168.1.50) 56(84) bytes of data. 64 bytes from nas.test.com (192.168.1.50): icmp_seq=1 ttl=252 time=15.8 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=2 ttl=252 time=15.5 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=3 ttl=252 time=15.9 ms [/code] Redeploying the instance to europe-west3 reduced the page load time from 40 seconds to 5 seconds. The moral of the story is obvious: keep your data as close as possible to the VM instance. Note: * Always plan a data migration strategy before moving VMs. * If possible, migrate data to the cloud.

Filestore can be used in this scenario without major application/system changes. * Rewrite legacy applications as much as possible. A simple solution here could be to decouple chmod from webpage. For example, use a separate process or cron. This would further reduce the page loading time.

This means loading time was most probably spent on the server side. pstree command showed me that a chmod processes was spawned by perl script. [code language="bash"] # pstree|grep chmod |-httpd-+-httpd---perl---chmod [/code] chmod is trying to "chmod -R 755" on a large number of log files. The process is in uninturruptable_sleep state. [code language="bash"] # ps aux|grep chmod apache 17694 0.0 0.0 4088 588 ? D 11:09 0:00 chmod -R 755 log/*.log root 17722 0.0 0.0 103316 884 pts/0 S+ 11:09 0:00 grep chmod</code> [/code] To understand what is process doing,

sysrq can be used. I used sysrq+t to dump thread state of all processes. On checking the chmod process, it looked like the process is waiting on NFS get attribute calls. [code language="bash"] Nov 27 11:14:45 test kernel: chmod D 0000000000000000 0 17909 17908 0x00000080 Nov 27 11:14:45 test kernel: ffff880036c7fb48 0000000000000082 0000000000000000 ffffffffa01b98ce Nov 27 11:14:45 test kernel: 0000000000000000 0000000000000286 00044e0b542040d0 ffff88003787e2b0 Nov 27 11:14:45 test kernel: ffffffff81ed13c0 00000001483610e6 ffff880037c27068 ffff880036c7ffd8 Nov 27 11:14:45 test kernel: Call Trace: Nov 27 11:14:45 test kernel: [<ffffffffa01b98ce>] ? xs_send_kvec+0x8e/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5b0>] ? rpc_wait_bit_killable+0x0/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5f2>] rpc_wait_bit_killable+0x42/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff8154bc0f>] __wait_on_bit+0x5f/0x90 Nov 27 11:14:45 test kernel: [<ffffffffa01b7ffa>] ? xprt_release_xprt+0x7a/0x80 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01be5b0>] ? rpc_wait_bit_killable+0x0/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff8154bcb8>] out_of_line_wait_on_bit+0x78/0x90 Nov 27 11:14:45 test kernel: [<ffffffff810a69b0>] ? wake_bit_function+0x0/0x50 Nov 27 11:14:45 test kernel: [<ffffffffa01b440c>] ? call_transmit+0x1ec/0x2c0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01beb35>] __rpc_execute+0xf5/0x350 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffff81045fbc>] ? kvm_clock_read+0x1c/0x20 Nov 27 11:14:45 test kernel: [<ffffffff810a67b7>] ? bit_waitqueue+0x17/0xd0 Nov 27 11:14:45 test kernel: [<ffffffffa01bedf1>] rpc_execute+0x61/0xa0 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01b5475>] rpc_run_task+0x75/0x90 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa01b5592>] ? rpc_call_sync+0x42/0x70 [sunrpc] Nov 27 11:14:45 test kernel: [<ffffffffa0273b9c>] ? nfs3_rpc_wrapper.clone.0+0x6c/0xc0 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa0274f27>] ? nfs3_proc_getattr+0x47/0x90 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa0262293>] ? __nfs_revalidate_inode+0xe3/0x220 [nfs] Nov 27 11:14:45 test kernel: [<ffffffffa026312e>] ? nfs_getattr+0xde/0x210 [nfs] Nov 27 11:14:45 test kernel: [<ffffffff8119f9b1>] ? vfs_getattr+0x51/0x80 Nov 27 11:14:45 test kernel: [<ffffffff81299e15>] ? _atomic_dec_and_lock+0x55/0x80 Nov 27 11:14:45 test kernel: [<ffffffff8119fa44>] ? vfs_fstatat+0x64/0xa0 Nov 27 11:14:45 test kernel: [<ffffffff8119fbab>] ? vfs_stat+0x1b/0x20 Nov 27 11:14:45 test kernel: [<ffffffff8119fbd4>] ? sys_newstat+0x24/0x50 Nov 27 11:14:45 test kernel: [<ffffffff810ee687>] ? audit_syscall_entry+0x1d7/0x200 Nov 27 11:14:45 test kernel: [<ffffffff8100c6e5>] ? math_state_restore+0x45/0x60 Nov 27 11:14:45 test kernel: [<ffffffff8154e68e>] ? do_device_not_available+0xe/0x10 Nov 27 11:14:45 test kernel: [<ffffffff8100b0d2>] ? system_call_fastpath+0x16/0x1b Nov 27 11:14:45 test kernel: Sched Debug Version: v0.09, 2.6.32-696.13.2.el6.x86_64 # [/code] The log files are shared from an 'on premises' NAS server. During the automated migration, the server was moved to us-central1 as it was the cheapest region. The NAS server is in Europe and has a high round-trip time when pinged from a GCP instance: [code language="bash"] # ping nas.test.com PING nas.test.com (192.168.1.50) 56(84) bytes of data. 64 bytes from nas.test.com (192.168.1.50): icmp_seq=1 ttl=254 time=121 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=2 ttl=254 time=120 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=3 ttl=254 time=120 ms [/code] Compared to 'on premises' server: [code language="bash"] # ping nas01 PING nas.test.com (192.168.1.50) 56(84) bytes of data. 64 bytes from nas.test.com (192.168.1.50): icmp_seq=1 ttl=252 time=15.8 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=2 ttl=252 time=15.5 ms 64 bytes from nas.test.com (192.168.1.50): icmp_seq=3 ttl=252 time=15.9 ms [/code] Redeploying the instance to europe-west3 reduced the page load time from 40 seconds to 5 seconds. The moral of the story is obvious: keep your data as close as possible to the VM instance. Note: * Always plan a data migration strategy before moving VMs. * If possible, migrate data to the cloud.

Filestore can be used in this scenario without major application/system changes. * Rewrite legacy applications as much as possible. A simple solution here could be to decouple chmod from webpage. For example, use a separate process or cron. This would further reduce the page loading time.