Our goal on the Couchbase Kubernetes testing team is to rigorously test the Autonomous Operator (AO) and certify the underlying Couchbase Server clusters that the AO manages. We test the AO for proper creation, management, and failure recovery of the managed CB cluster. Furthermore, we test that the CB cluster, services, and features themselves all work correctly. Previously, we have done all of our testings on custom Kubernetes and Openshift clusters on-prem, but now we are certifying the AO on multiple Kubernetes services in the cloud. The first such certification is for Azure Kubernetes Service (AKS). Running our tests with AKS posed new challenges in creating and configuring the test environment compared to custom on-prem environments. The solutions to these challenges are highlighted in this post.

*Note – Couchbase Autonomous Operator is still in developer preview on Azure AKS, and we’re targeting GA in our upcoming 1.2 release targeted for Q1 2019.

Requirements for our Kubernetes Test Framework

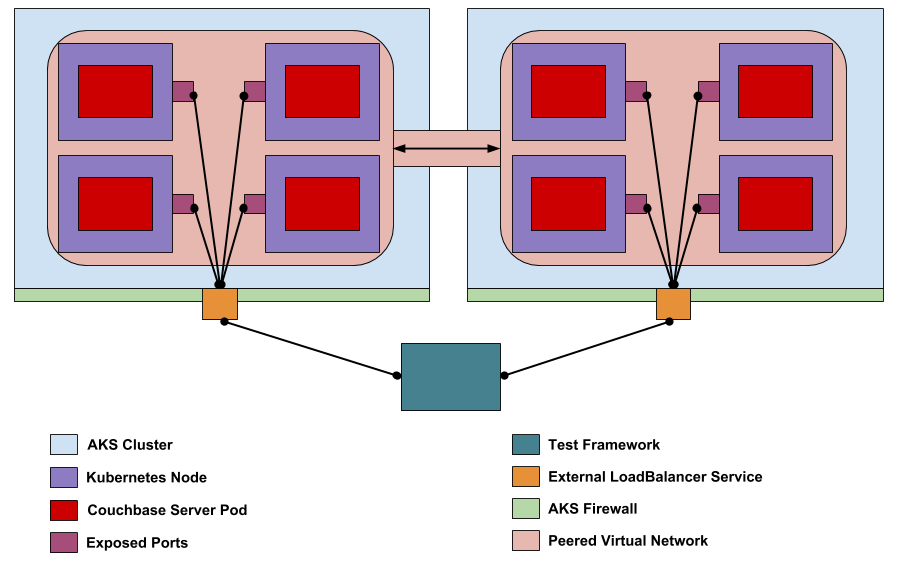

Our test framework executes several different types of tests ranging from necessary cluster creation to multi-node failure recovery with persistent volumes. We also test XDCR across 3 different topologies defined by where the two CB clusters reside: single K8s cluster, two different K8s clusters, and one K8s to one non-K8s. To run the full test suite we must (1) set up two separate AKS cluster that allows for XDCR. (2) Our test framework must be able to interact with each AKS cluster over public internet (3) We also must be able to reach the CB clusters within the AKS clusters from the public internet. (4) At least one dynamic storage class must be present in AKS for our persistent volume tests. In the following section, the steps taken to meet these requirements will be elucidated.

Creating XDCR-Ready AKS Clusters

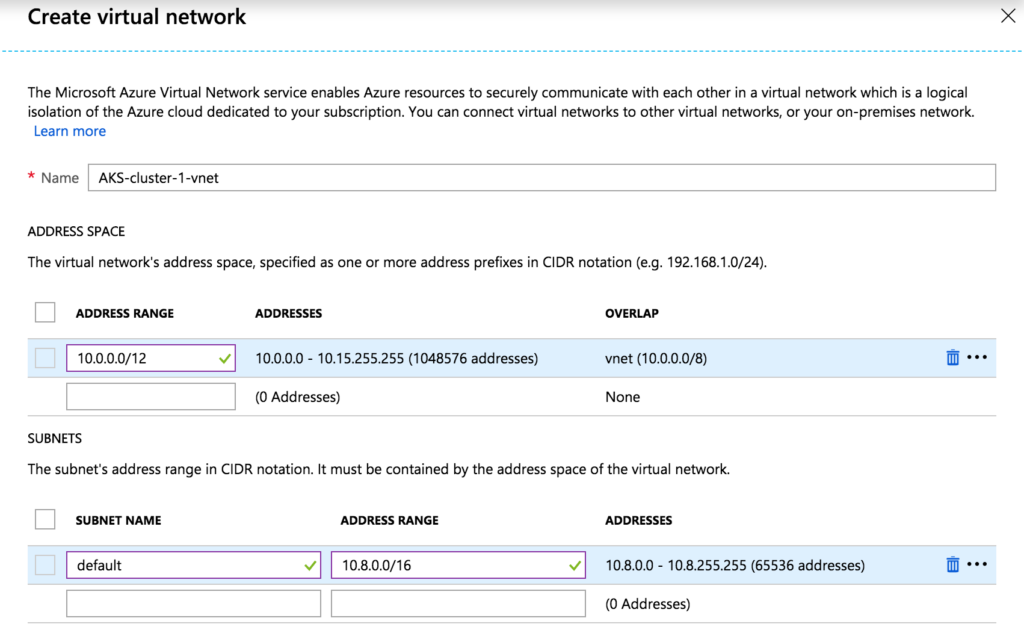

The main hurdle for XDCR to work between to CB clusters (in K8s or otherwise) is that a Layer 3 route must exist between the two CB clusters. CB nodes will use internal IP addresses provided by K8s API based on the network configuration of the AKS cluster. By default, two AKS clusters will use the same internal address ranges which will cause outgoing XDCR traffic to never reach its destination in the other AKS cluster. The solution is to set up the two AKS clusters such that their internal networks can peer. Peering will allow the CB clusters in each AKS cluster to communicate correctly using their internal IP addresses. To set up network peering in AKS we need to determine the non-overlapping network prefixes to use for each AKS cluster. Then based on these prefixes, we need to determine a cluster subnet for the Kubernetes nodes, a service subnet for Kubernetes pods that don’t overlap with the cluster subnet, a DNS address in each service subnet, and a Docker overlay network. The following table shows the proper network configuration for each AKS cluster.

| Cluster | AKS-cluster-1 | AKS-cluster-2 |

| Prefix | 10.0.0.0/12 | 10.16.0.0/12 |

| Cluster Subnet | 10.8.0.0/16 | 10.24.0.0/16 |

| Service Subnet | 10.0.0.0/16 | 10.16.0.0/16 |

| DNS Address | 10.0.0.10 | 10.16.0.10 |

| Docker Bridge Address | 172.17.0.1/16 | 172.17.0.1/16 |

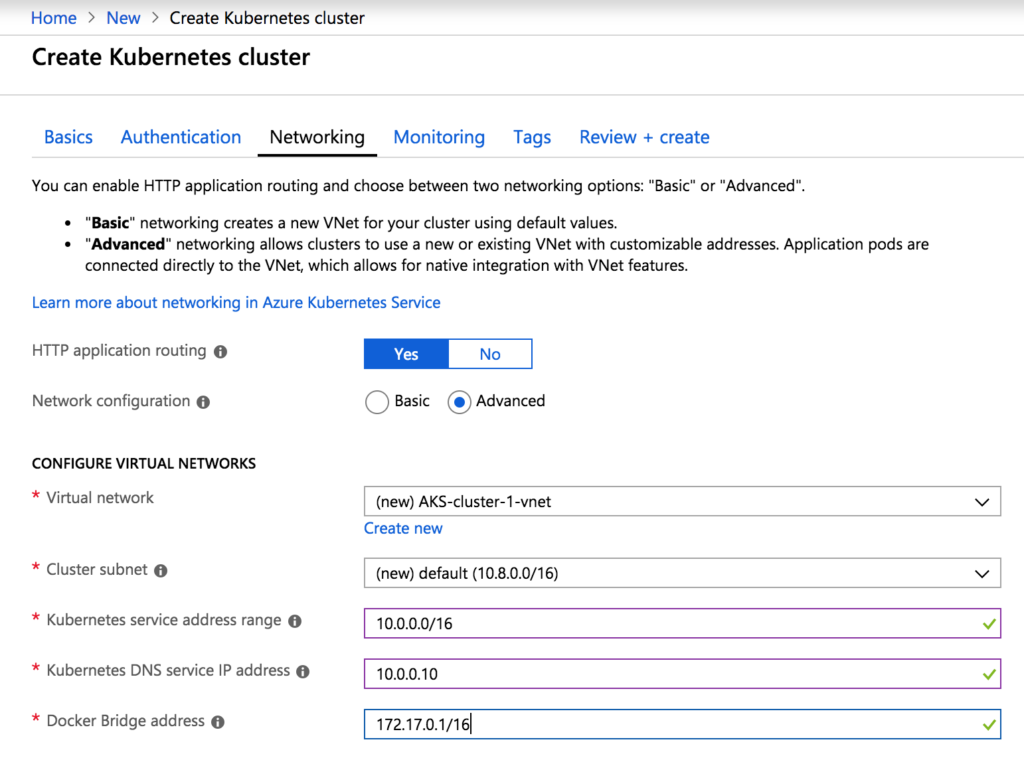

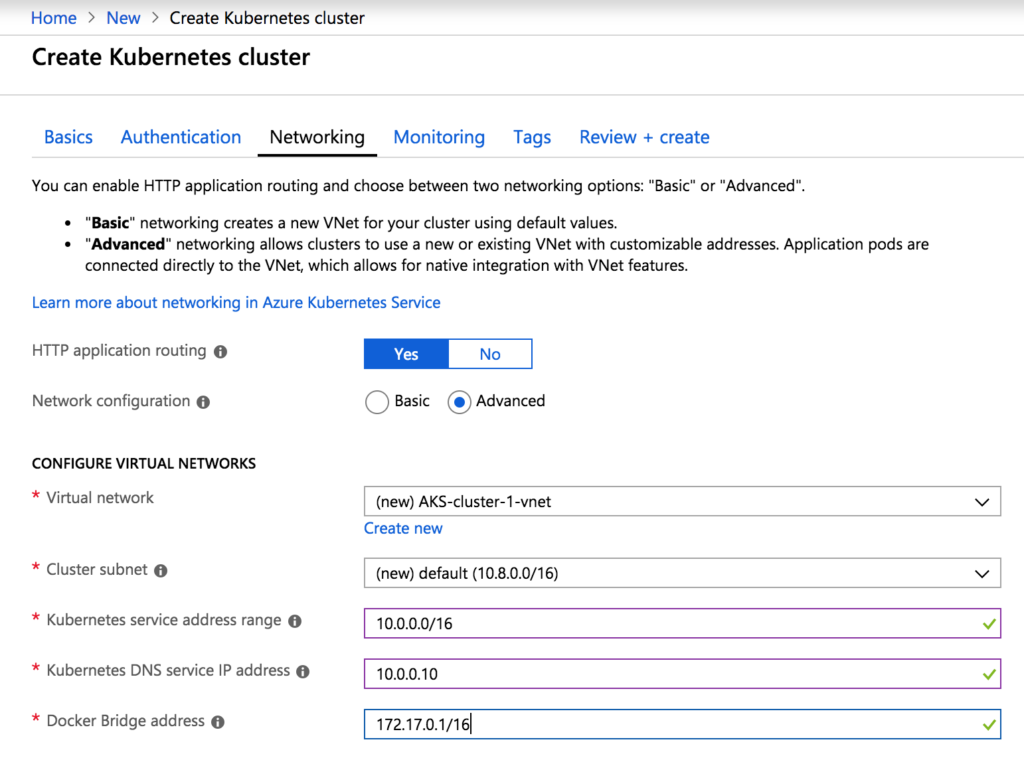

Now that the proper network configuration is determined we can create these two AKS clusters from the Azure Portal. Creating each cluster will mostly follow the instruction provided by Azure here, https://docs.microsoft.com/en-us/azure/aks/kubernetes-walkthrough-portal [1], with the only difference being in the network setup (step 3). These step will be for AKS-cluster-1 and will use the corresponding network values determined earlier. Steps for AKS-cluster-2 will be the same, using the AKS-cluster-2 network values. Once the networking step is reached, enable HTTP application routing and choose advanced network configuration.

Create a new virtual network using the prefix and cluster subnet determined earlier.

Fill in the remaining fields in the networking tab with the service subnet, DNS address, and Docker overlay values.

Proceed setting up the AKS cluster according to the documentation. While AKS-cluster-1 is deploying, setup AKS-cluster-2 using the same steps.

Proceed setting up the AKS cluster according to the documentation. While AKS-cluster-1 is deploying, setup AKS-cluster-2 using the same steps.

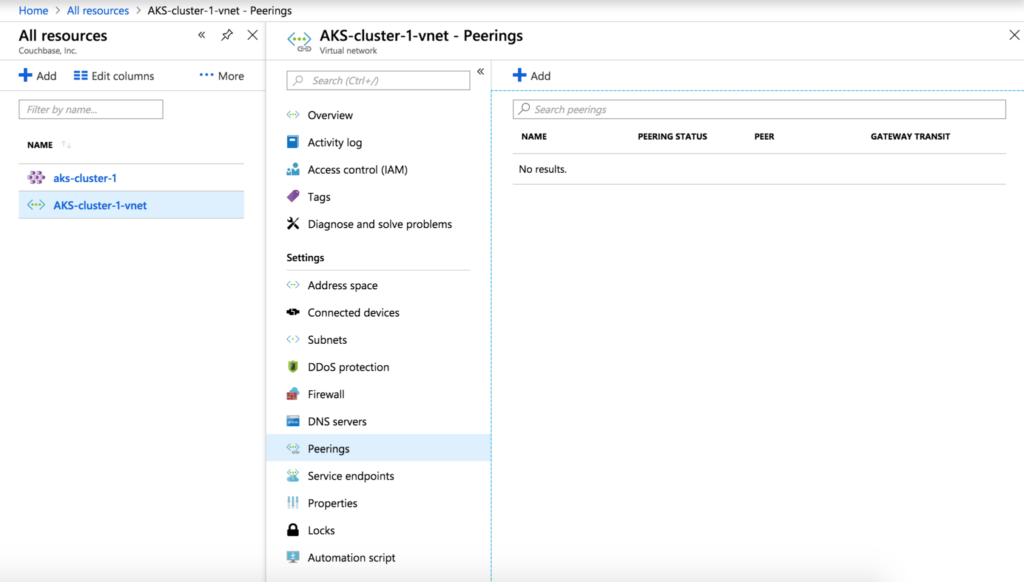

Now that we have two AKS clusters with proper network configuration, we can set up network peering. In the UI, navigate to the virtual network AKS-cluster-1-vnet created for AKS-cluster-1, select the Peerings tab and click Add to create a new peering.

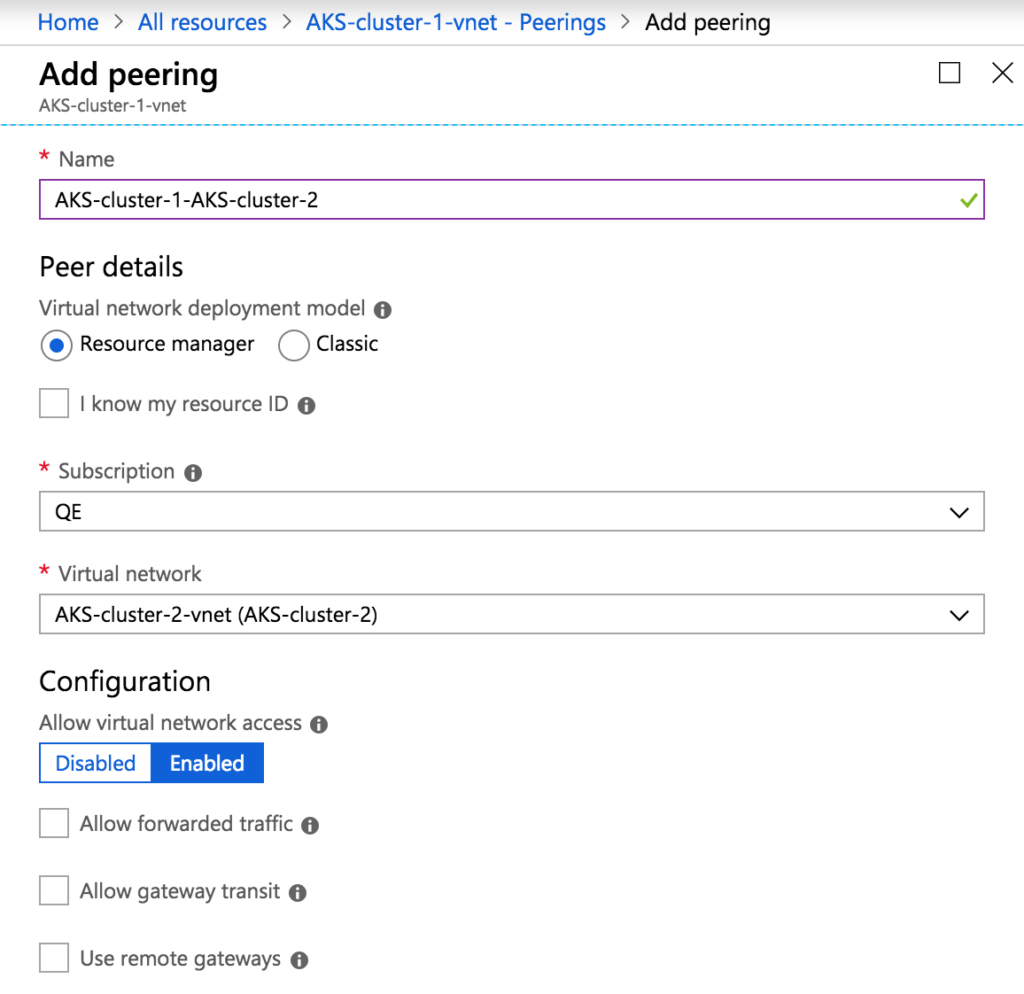

Give the peering a name like AKS-cluster-1-AKS-cluster-2 and select AKS-cluster-2-vnet as the virtual network.

Give the peering a name like AKS-cluster-1-AKS-cluster-2 and select AKS-cluster-2-vnet as the virtual network.

Peering requires both networks to peer, so we must also set up peering from AKS-cluster-2-vnet to AKS-cluster-1-vnet in a similar manner. Once complete, the two AKS clusters can host CB clusters with XDCR.

Accessing AKS Kubernetes API Over Public Internet

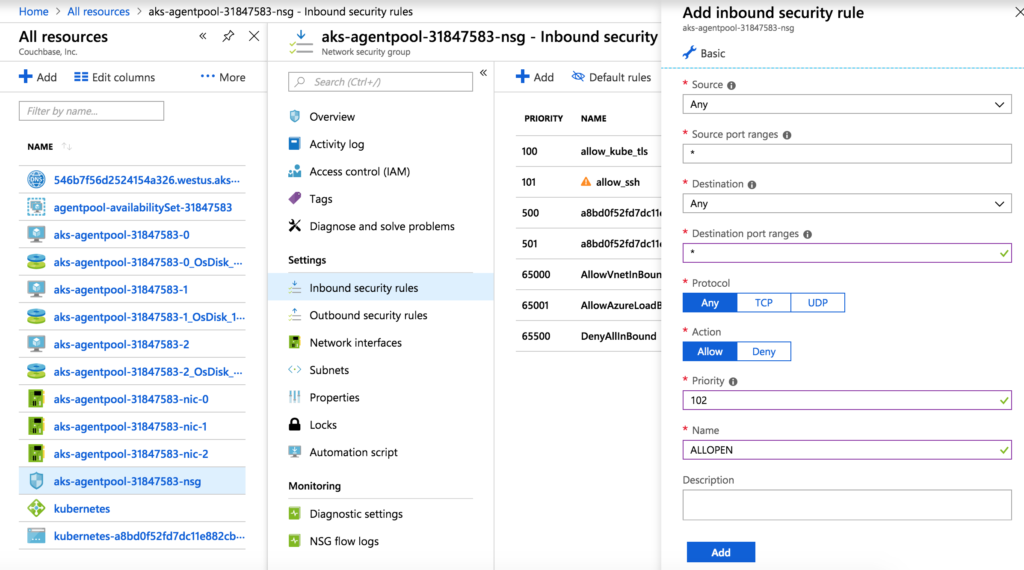

Since our test framework runs from outside AKS, we will need to set up external access to the Kubernetes API for each AKS cluster. This process is relatively straightforward. We need to modify the Network Security Group Inbound and Outbound rules for each AKS cluster. Navigate to the Network Security Group for AKS-cluster-1, select Inbound security rules and then Add to create a new rule. To make the setup simple, create this rule allowing any source IP/port and destination IP/port. Give this rule the priority number 102.

Now, do the same for outbound security rules. Then modify the inbound and outbound security rules for AKS-cluster-2 similarly.

The next step is to pull down the kubeconfig file (credentials) for each AKS cluster. These files are used by the test framework to access and interact with the Kubernetes API. Make sure you have the Azure CLI installed locally, as outlined here: https://docs.microsoft.com/en-us/cli/azure/install-azure-cli?view=azure-cli-latest [2]. You will also need to have kubectl installed locally, as outlined here: https://kubernetes.io/docs/tasks/tools/install-kubectl/ [3]. Once both are installed and working, run the following commands to pull down the kubeconfig file for AKS-cluster-1 and AKS-cluster-2:

|

1 2 |

az aks get-credentials --resource-group AKS-cluster-1 --name aks-cluster-1 az aks get-credentials --resource-group AKS-cluster-2 --name aks-cluster-2 |

The cluster credentials will be stored in ~/.kube/config file. For our testing framework, we separate out each cluster’s credentials into their own files: ~/.kube/config_aks_cluster_1 and ~/.kube/config_aks_cluster_2.

Accessing CB Pods in AKS

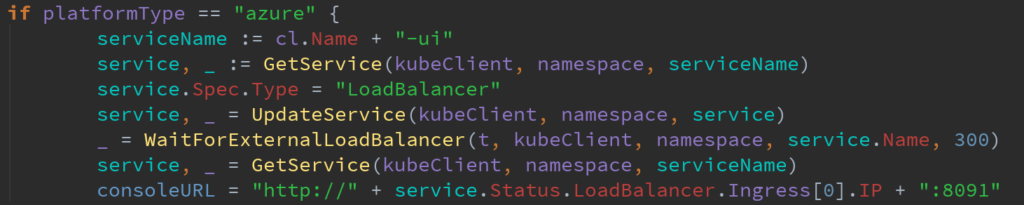

At this point, our test framework can interact with two AKS clusters’ Kubernetes API. This allows the test framework to install the AO and create AO managed CB clusters for our tests. The test framework, however, needs to interact with CB pods via REST calls for most of our tests. Previously, the test framework would have the AO expose the CB REST API as a Kubernetes nodeport service. This service would create a port on the Kubernetes node, that would forward traffic to the CB pod REST API port inside the Kubernetes node. These services were accessible via the Kubernetes node’s private IP address. This was not a problem since our on-prem Kubernetes cluster, and test framework lived in the same private network. With AKS, however, our test framework is not colocated with the AKS Kubernetes nodes and cannot access a node port service that uses the private IPs of these nodes. The solution to this is simple: once the AO creates the CB cluster and nodeport service, have the test framework change the nodeport service Spec. Type field to LoadBalancer. This is accomplished in the following chunk of Go code:

When the nodeport service Type is changed to LoadBalancer, AKS will assign a public IP to the service. Using this new public IP, the test framework can successfully make calls to the CB REST API, even from outside AKS. In the next major release, Autonomous Operator 1.2, the AO will have an option to expose either a nodeport service or a load balancer service and any changes to AO deployed services will cause the AO to reconcile the service back. Therefore, the solution we use in our test framework is only temporary, and in the future, we will move to create standalone load balancer services and using the AO created the load balancer.

Enabling Dynamic Persistent Volumes

The AO allows a CB cluster to bind to dynamically provisioned persistent volumes. This makes the CB cluster very resilient to data loss in the event one or more CB pod goes down. Our testing framework has many complex failure scenarios that involve CB pods storing data in persistent volumes. In some failure scenarios, the AO will restart the failed pod on a different Kubernetes node using the failed pods persistent volume. Therefore, we must use persistent volumes that can be moved from one Kubernetes node to another. On AKS two types of storage classes can be used for persistent volumes: AzureDisk and AzureFile. The default storage class that AKS provide is AzureDisk, but this storage class cannot create moveable persistent volumes. AzureFile is implemented in a way that allows moveable persistent volumes and will be the storage class solution we test with on AKS. Azure provides instruction to set up the AzureFile storage class here:

https://docs.microsoft.com/en-us/azure/aks/azure-files-dynamic-pv [4].

The setup involves first creating a storage account with:

|

1 2 3 4 |

az aks show --resource-group AKS-cluster-1 --name AKS-cluster-1 --query nodeResourceGroup -o tsv MC_AKS-cluster-1_aks-cluster-1_westus az storage account create --resource-group MC_AKS-cluster-1_aks-cluster-1_westus --name mystorageaccount --sku Standard_LRS |

Then, submit a storage class, cluster role, and cluster role binding spec to Kubernetes:

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

##azure-file-sc.yaml kind:StorageClass apiVersion: storage.k8s.io/v1 metadata: name: azurefile provisioner: kubernetes.io/azure-file mountOptions: - dir_mode=0777 - file_mode=0777 - uid=1000 - gid=1000 parameters: skuName: Standard_LRS storageAccount: mystorageaccount |

|

1 |

kubectl apply -f azure-file-sc.yaml |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

##azure-pvc-roles.yaml --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:azure-cloud-provider rules: - apiGroups: [''] resources: ['secrets'] verbs: ['get','create'] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:azure-cloud-provider roleRef: kind: ClusterRole apiGroup: rbac.authorization.k8s.io name: system:azure-cloud-provider subjects: - kind: ServiceAccount< name: persistent-volume-binder namespace: kube-system |

|

1 |

kubectl apply -f azure-pvc-roles.yaml |

Now we have our AzureFile storage class setup and ready use in AKS-cluster-1. We must do the same process for AKS-cluster-2.

Testing The Operator

At this point, we have everything we need to run the full suite of tests for the AO: 2 XDCR-ready Kubernetes cluster, accessible from public internet (Kubernetes API and CB REST API), with dynamic persistent volumes enabled. During the initial test, we noticed some odd failures. These were caused mostly by timeouts in our test framework, and we were able to pinpoint the cause: AKS is extremely slow compared to on-prem Kubernetes cluster. The time it takes to spin up a CB pod can take up to 5 times longer. To resolve this issue, we had to create variable timeouts depending on what type of Kubernetes cluster is being used. After that, the tests all ran fine, and no significant issues with the AO or CB cluster were found.

Conclusion

Recently, the Couchbase testing team has been focusing on certifying the AO for use on primary cloud-provided Kubernetes services such as AKS, EKS, and GCP. The first cloud we focused on was AKS, and it brought several platform-specific challenges such as cloud-specific network configuration, accessibility, and storage class creation. However, we resolved these issues and now can run our automated test framework using AKS clusters. We will continue working on certifying other clouds in the coming months, but in the meantime, have some fun on AKS.

References:

[1] https://docs.microsoft.com/en-us/azure/aks/kubernetes-walkthrough-portal

[2] https://docs.microsoft.com/en-us/cli/azure/install-azure-cli?view=azure-cli-latest

[3] https://kubernetes.io/docs/tasks/tools/install-kubectl/

[4] https://docs.microsoft.com/en-us/azure/aks/azure-files-dynamic-pv