This post has results for performance regressions in all versions of 6.x, 7.x and 8.x using a small server. The workload is IO-bound and RocksDB uses O_DIRECT. Unlike the previous two posts I skip RocksDB 4.x and 5.x here because I am not sure they had good support for O_DIRECT. In a previous post I shared results for RocksDB 7.x and 8.x on a larger server. A post for a cached workload is here and for IO-bound with buffered IO is here.

The workload here is IO-bound and has low concurrency. When performance changes one reason is changes to CPU overheads, but that isn't the only reason.

Update - this blog post has been withdrawn. Some of the results are bogus because the block cache was too large and the iodir (IO-bound, O_DIRECT) setup was hurt by swap in some cases.

I will remove this post when the new results are ready.

Warning! Warning! Some of the results below are bogus.

- QPS for fillseq in 8.x is ~1/3 of what it was in early 6.x. There was a small drop from 6.13 to 6.21 and a big drop from 6.21 to 6.22. This problem doesn't reproduce when buffered IO is used instead of O_DIRECT. I will explain this in another post.

- Read QPS for read-only benchmarks decreased by up to 5% from RocksDB 6.x to 8.x

- Read QPS for read-write benchmarks is stable from RocksDB 6.x to 8.x

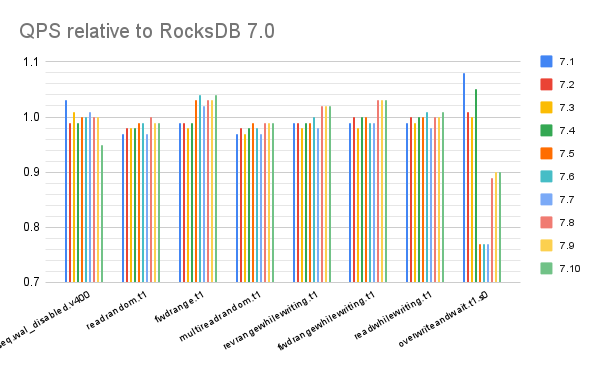

- QPS for overwriteandwait decreased by ~20% from 6.0 to 8.x. It is stable from RocksDB 7.5 through 8.x, excluding 8.6. It has been stable since RocksDB 7.8. Most of the decrease is from RocksDB 7.4 to 7.5.

- A spreadsheet with all of the charts is here

I compiled all versions of 6.x, 7.x and 8.x using gcc. The build command line is:

make DISABLE_WARNING_AS_ERROR=1 DEBUG_LEVEL=0 static_lib db_bench

- 6.0.2, 6.1.2, 6.2.4, 6.3.6, 6.4.6, 6.5.3, 6.6.4, 6.7.3, 6.8.1, 6.9.4, 6.10.4, 6.11.7, 6.12.8, 6.13.4, 6.14.6, 6.15.5, 6.16.5, 6.17.3, 6.18.1, 6.19.4, 6.20.4, 6.21.3, 6.22.3, 6.23.3, 6.24.2, 6.25.3, 6.26.1, 6.27.3, 6.28.2, 6.29.5

- 7.0.4, 7.1.2, 7.2.2, 7.3.2, 7.4.5, 7.5.4, 7.6.0, 7.7.8, 7.8.3, 7.9.3, 7.10.2

- 8.0.0, 8.1.1, 8.2.1, 8.3.3, 8.4.4, 8.5.4, 8.6.7, 8.7.2, 8.8.0

The benchmark used the Beelink server explained here that has 8 cores, 16G RAM and 1TB of NVMe SSD with XFS and Ubuntu 22.04 with the 5.15.0-79-generic kernel. There is just one storage device and no RAID. The value of max_sectors_kb is 512. For RocksDB 8.7 and 8.8 I reduced the value of compaction_readahead_size from 2MB (the default) to 480KB. Everything used the LRU block cache.

I used my fork of the RocksDB benchmark scripts that are wrappers to run db_bench. These run db_bench tests in a special sequence -- load in key order, read-only, do some overwrites, read-write and then write-only. The benchmark was run using 1 client thread. How I do benchmarks for RocksDB is explained here and here.

- cached - database fits in the RocksDB block cache

- iobuf - IO-bound, working set doesn't fit in memory, uses buffered IO

- iodir - IO-bound, working set doesn't fit in memory, uses O_DIRECT

- QPS for fillseq in 8.x is ~1/3 of what it was in early 6.x. There was a small drop from 6.13 to 6.21 and a big drop from 6.21 to 6.22. This problem doesn't reproduce when buffered IO is used instead of O_DIRECT.

- Read QPS for read-only benchmarks decreased by up to 5% from RocksDB 6.x to 8.x

- Read QPS for read-write benchmarks is stable from RocksDB 6.x to 8.x

- QPS for overwriteandwait decreased by ~20% from 6.0 to 8.x. It is stable from RocksDB 7.5 through 8.x, excluding 8.6. It has been stable since RocksDB 7.8. Most of the decrease is from RocksDB 7.4 to 7.5.

- Compaction wall clock time (c_wsecs) increased by ~3X in 6.22

- Compaction CPU time (c_csecs) increased by ~1.4X in 6.22

- Stall% increased from 48.2 to 77.7

- User and system total CPU time (u_cpu, s_cpu) increased

- Process RSS increased from 0.6GB to 12.0GB

Results: 8.x

.png)

No comments:

Post a Comment