Recently I came across a very interesting conference talk by a member of Amazon’s Driver Assistance Technology team. This team is responsible for the Android app that powers the driver’s displays inside Amazon’s Rivian vans. In this talk the speaker described the motivation for a major refactoring project that they’ve been conducting for the past months and shared their long-term vision for the app’s architecture.

The reason this talk immediately drew my attention is because it touches upon three of my favorite subjects: architecture, dependency injection and refactoring. Furthermore, the level and the density of the content was such, that the speaker managed to cover a lot of ground in this presentation. I think that this talk provides a very rare window into real-world, complex refactoring project.

In this post, I’ll share my analysis of Amazon’s refactoring initiative based on the contents of this talk. It is equivalent to a report that I’d write after reviewing the project, if Amazon would hire me to assist with it. In other words, this is an unsolicited consulting based on the limited information provided in that talk.

Old Architecture

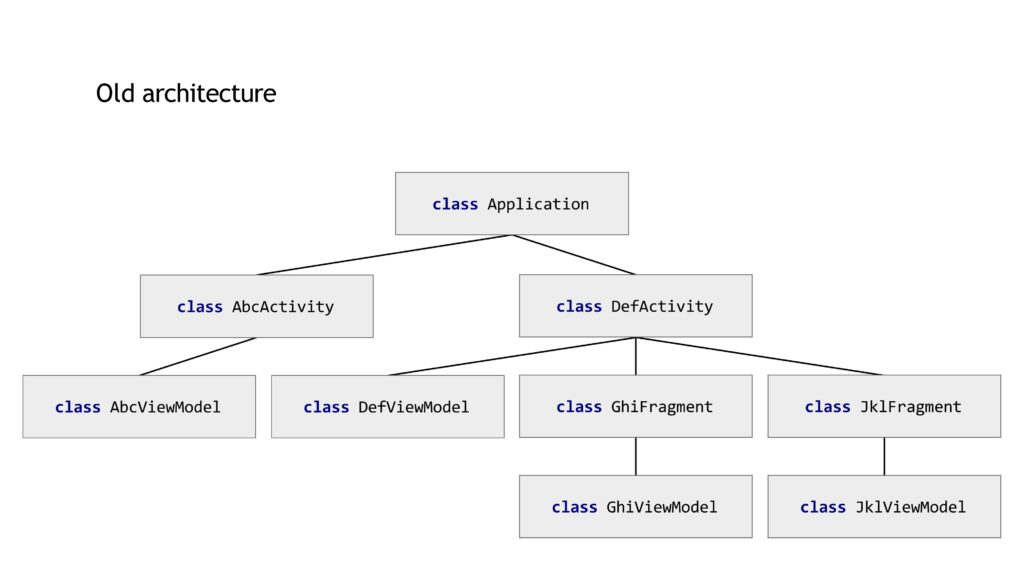

This is the project’s legacy “architecture”:

Right off the bat, I’d like to note that this is not the architecture of the application, but a depiction of a specific presentation layer architectural pattern (hereinafter: PLAP). This distinction has practical implications. Developers who treat PLAPs as the app’s architecture tend to give this aspect too much attention, while neglecting other parts of the project, even if they’re more important. That said, the choice of a PLAP is indeed an important architectural consideration.

The above PLAP is the standard MVVM approach recommended by Google. I try to avoid ViewModels in my projects and prefer a specific flavor of MVC, but MVVM is what most small and medium Android projects use today. Therefore, no surprise here.

In the context of PLAPs, if you’re using Fragments in your project to represent screens, then it’s better to standardize this approach. What I mean is that it would be better for AbcActivity in the above diagram to contain a Fragment, even if that Activity corresponds to just one screen. The reason is that, as the project grows in size and complexity, you want to be able to say “in this project, every screen is represented by a top-level Fragment”, without listing a bunch of exceptions.

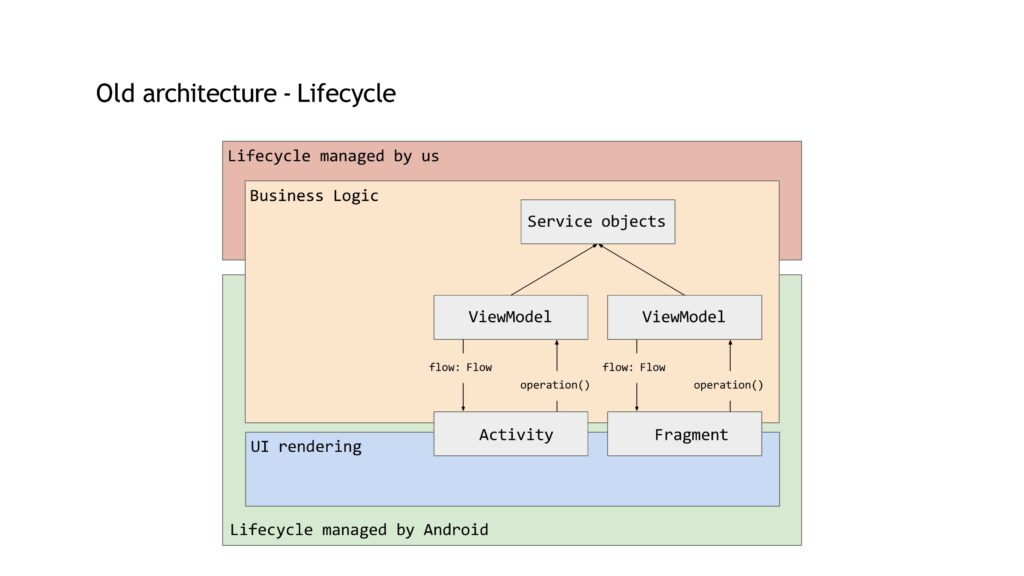

Another representation of the old architecture is this diagram, which shows the lifecycles that affect the different entities:

Good and clear naming is very important in software, so I recommend avoiding “service” terminology in Android projects, except for when referring to Android’s Service class. Otherwise, you create ambiguity for no good reason. As far as I understand, what they call “service objects” correspond to either use cases, or repositories, or global managers. These are basically objects that contain business logic and/or state.

If my assumption is correct, then this diagram, just like the previous one, simply shows the officially recommended approach for Android development today. Therefore, whatever drawbacks and limitations Amazon devs found and experienced, they are directly attributable to that approach.

Problems with the Old Architecture

Amazon devs associated the following list of “bottlenecks” with the old “architecture”:

- Driven by Android and its window management and not by business logic.

- Different lifecycles with different teardown hooks.

- Custom lifecycles like user scope adjacent.

- Coroutine scopes provided by Android components.

Furthermore, since they didn’t implement a Unidirectional Data Flow pattern (hereinafter: UDF), they experienced these additional challenges:

- Challenges synchronizing multiple screens.

- No holistic overview of the state of the application.

- Way too easy to mix business logic with UI code.

- Many services pushed into application scope.

The speaker elaborated on each of these points and gave examples of issues that they experienced. You can watch the talk to learn the full context.

Goals of the Refactoring Project

Amazon devs set to achieve these goals with the refactoring:

- Strongly decouple business logic from Android lifecycle.

- Features are device agnostic.

- Avoid thread and memory leaks.

They also adopted these guiding principles for the future of the codebase:

- Modular design.

- Composition over inheritance.

- Dependency inversion by default.

- Unidirectional Data Flow.

The above goals and principles look like a nice collection of desirable attributes. However, the devil is in the details. Therefore, in the next sections of this article, I’ll perform a critical review of Amazon’s app architecture, based on the information provided in this talk.

Migration to Anvil Dependency Injection Framework

Anvil is a wrapper around Dagger. This means that it exposes a different set of conventions to developers, but, under the hood, Dagger still runs the show. From a functional standpoint, Anvil’s biggest feature is that instead of listing modules inside components, which is the standard Dagger’s approach, it allows you to list components that a module should be installed in. This is Dependency Inversion Principle in action – modules point to components in the source code, instead of components pointing to modules. This aspect is very similar to Hilt’s implementation (which is also a wrapper around Dagger), but, unlike Hilt, Anvil doesn’t offer a predefined structure of components. Therefore, in theory, Anvil is less beginner-friendly, but more flexible.

Another very useful feature that Anvil provides is the ability to replace specific injected services in tests. This makes it simple to e.g. substitute the networking objects with fake implementations during integration testing.

From performance standpoint, Anvil can utilize Kotlin compiler plugin architecture instead of Dagger’s annotation processing and code generation, thus yielding faster builds.

I don’t have a strong opinion on whether Anvil is better than Dagger or Hilt, but I think that Dependency Injection frameworks are best suited for small and medium projects. They are especially useful at the initial stages of development, when their “magic” allows developers to experiment quickly, learn the app’s business domain and converge on the ultimate long-term architecture. For mature or bigger projects with slower pace of change, I find Pure Dependency Injection a better choice. Pure DI is verbose and requires more upfront manual work, but is simpler to learn and debug, and doesn’t impose any build or runtime penalties.

I wonder whether this team evaluated Pure Dependency Injection option before they decided to replace one DI framework with another.

Strongly Decouple Business Logic from Android Lifecycles

When I listened to the talk for the first time, this point surprised and confused me. See, it’s not that hard to decouple business logic from Android lifecycles. For example, you can use global objects (aka application-scoped objects) to host the business logic so that they aren’t affected by any internal lifecycle. Therefore, I wondered why this relatively straightforward objective is the first goal of what sounds like a major project?

The answer is that the team’s real goal wasn’t to decouple from Android lifecycles, but to introduce their own custom lifecycles. That’s much bigger goal, indeed.

To that end, they introduced a concept of a “scope”. That’s how the speaker defined what a “scope” is:

Scopes define the boundary software components operate in. A scope is a space with a well-defined lifecycle that can be created and torn down. Scopes host other objects and can bind them to their lifecycle. Sub-scopes or child scopes have the same or a shorter lifecycle as their parent scope.

Ralf Wondratschek

Once again, surprising naming. Dagger already uses “scopes” terminology, but this new “scope” is not Dagger’s scope. Coroutines have “scopes” too, which are different from this new scope as well. So, why reuse the same term for a new concept? Even if you believe that your use case is the “correct” one, I still recommend avoiding ambivalent and confusing naming.

Even bigger surprise here is that if you replace the term “scope” with “Dagger component” in the above definition, it’ll make perfect sense. It’s not a coincidence, because these new “scopes” fulfill the same fundamental role as Dagger components. Therefore, the team didn’t just reuse the same term for a new concept, but applied this name to a concept which had already had a different name in the codebase.

The new scopes are implementations of this interface:

interface Scope {

val name: String

val parent: Scope?

fun buildChild(name: String, builder: (Builder.() -> Unit)? = null): Scope

fun children(): Set<Scope>

fun register(scoped: Scoped)

fun isDestroyed(): Boolean

fun destroy()

fun <T : Any> getService(key: String): T?

}

This looks very much like an extended API of Dagger component.

And that’s how the new scopes are initialized:

rootScope = Scope.buildRootScope {

addDaggerComponent(createDaggerComponent())

}

application.rootScope

.buildChild(name = this::class.java.simpleName) {

addDaggerComponent(

application.rootScope

.daggerComponent<ActivityComponent.ParentComponent>()

.createActivityComponent()

)

}

From this code snippet it becomes immediately clear that the new scopes are wrappers around Dagger components.

Why did the team need these wrappers? Why couldn’t they just use Dagger components directly and avoid all the additional complexity and confusion?

My reading is that they wanted to track the individual “scoped services” contained within those “scopes” in order to delegate some calls to them whenever the containing scope changes its lifecycle state. Dagger components don’t have APIs which could support this requirement.

As I said earlier, the team wanted to introduce their own custom lifecycles. These lifecycles are implemented using “runtime scopes” which correspond to specific business runtime states of the application (e.g. “logged out scope”). You can implement custom lifecycles using Dagger components too, but Hilt made it somewhat more challenging.

On the topic of “runtime scopes”, my long-term conviction is that it’s an anti-pattern. Dependency injection should be about resolution of object graphs and it should be decoupled from application’s business logic. Therefore, jumping through hoops to tie “scopes” to business flows inside the app is a bad idea. Sure, given enough time, you can couple your DI infrastructure to the business flows, but the fact that you can do something doesn’t mean that you should. I saw this anti-pattern in multiple codebases and it always leads to complex, tricky and brittle code. In most cases, this anti-pattern can be replaced with several relatively simple objects. No need to hack with dependency injection, which is already complex enough on its own.

For instance, this is an example of a new “scoped service”:

@SingleIn(AppScope::class)

@ContributesMultibindingScoped(AppScope::class)

class QeCommandReceiver @Inject constructor(

private val application: Application,

) : BroadcastReceiver(), Scoped {

override fun onEnterScope(scope: Scope) {

application.registerReceiver(this, IntentFilter("..."))

}

override fun onExitScope() {

application.unregisterReceiver(this)

}

override fun onReceive(context: Context, intent: Intent) {

when (intent.getStringExtra("cmd")) {

...

}

}

}

Note how this class implements Scoped interface and is annotated with ContributesMultibindingScoped annotation. This is a new custom annotation which enables some custom magic that automatically registers this object to receive Scoped callbacks.

Now consider this alternative implementation, which doesn’t require any custom “scope”, new annotations, multibindings, etc.:

@Singleton

class QeCommandReceiverImpl @Inject constructor(

private val application: Application,

private val initController: InitializationController,

) : BroadcastReceiver(), QeCommandReceiver, Initializable {

init {

initController.registerListener(this)

}

override fun onInitialized() {

application.registerReceiver(this, IntentFilter("..."))

}

override fun onDeinitialized() {

application.unregisterReceiver(this)

}

override fun onReceive(context: Context, intent: Intent) {

when (intent.getStringExtra("cmd")) {

...

}

}

}

In this scenario, InitializationController would basically contain the same logic that the new custom “scope” uses to handle its own lifecycle and notifications.

As you might’ve noticed, I changed this class a bit such that it implements QeCommandReceiver interface. I did that to emphasize that this implementation also resolves the problem of “leaking” the initialization aspect into interface’s API. The interface doesn’t know about either InitializationController or Initializable, so these are implementation details, just like the team wanted. In fact, this implementation is better in this context because it allows you to hide the “initializable” aspect even if you don’t use interfaces. Just add an inner private class that implements Initializable and register it, instead of the parent object. As far as I can tell, you can’t do that if you use the manually created ContributesMultibindingScoped annotation.

Lastly, the presence of getService method in the Scope interface means that the team consciously sacrificed Dagger’s biggest feature: compile-time safety. Granted, they seem to use this service-locator-ish approach sparingly and wrap it into extension functions, such that the external clients don’t use this method directly, but, still, this is a major code smell.

All in all, I’m pretty sure that most (maybe all) use cases that the team wanted to accommodate by implementing their custom “runtime scopes” could be addressed in simpler manner, without additional “magic”.

Features and Device Agnostic

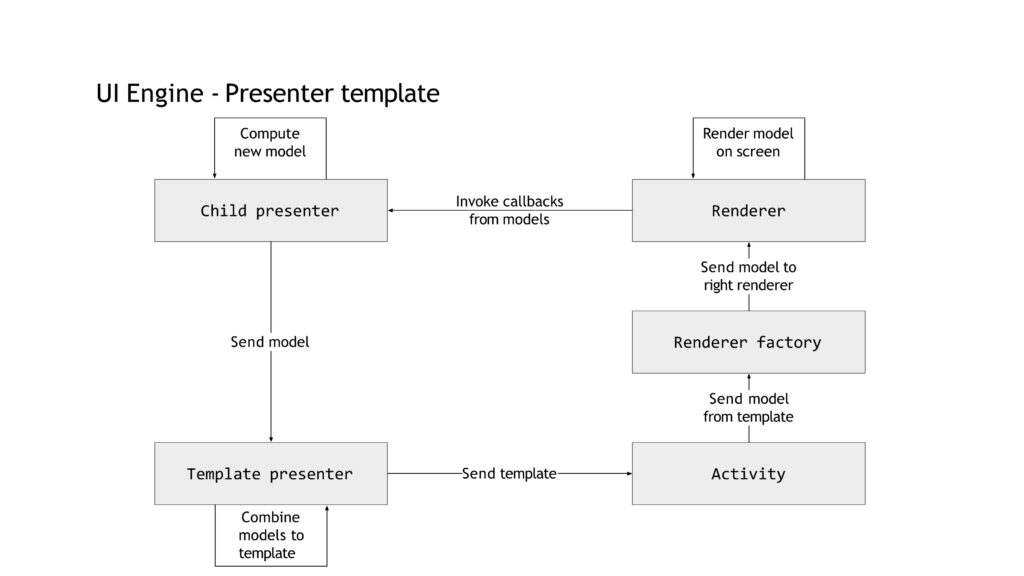

To make their presentation layer decoupled from Android components and device specifics to support potential future requirements, the team implemented a new “UI engine”. As far as I can tell, that’s just another name for presentation layer architectural pattern.

So, the new PLAP implements the following pipeline:

There is way so much to unpack here. If you’d like to get the full context provided in the talk, I invite you to watch it. In this section, I’ll just highlight the key points.

As far as I understand, the team encapsulated all the “application logic” in the hierarchy of “presenter” objects. “Application” logic, in this context, is the logic that decides what state should be rendered, and how to handle user interactions and device state changes.

The “UI logic” is encapsulated in “renderer” objects. “UI” logic is the logic that translates the state from the “presenter” into the actual user interface (basically, draws pixels) and captures user’s interactions with the screen. Importantly, even though UI logic captures user interactions, it shouldn’t handle them – that’s the responsibility of the “application logic”. Effectively, with this PLAP, Amazon developers acknowledged that Activities aren’t UI elements, and introduced “renderers” to fulfill this role. This is a major deviation from the MVx PLAPs common in the Android industry which designate Activities (and Fragments) as MVx “views”.

Even though extracting UI logic from Activities is a deviation from the standard approach in the Android ecosystem, this technique isn’t new. I employ a similar philosophy in “my” MVC PLAP, which I’ve been utilizing since 2015. In fact, it’s the realization that Activities and Fragments shouldn’t be UI elements that prompted me to write the very first article on this blog. Several years ago, I reviewed the PLAP utilized by Netflix Android application and found out that it also follows this paradigm. So, yeah, this is a big deal and kudos to Amazon devs for this fundamental realization.

That said, I can’t understand why the team decided to introduce so many abstractions into this PLAP. In theory, a “presenter” can have a reference to a specific “rendered” (a “view” in MVx terminology) and communicate with it directly. If more than one “rendered” needs to be driven by one “presenter”, this should be rather straightforward to accommodate. Unfortunately, the speaker didn’t explain why they didn’t let “presenters” and “renderers” communicate directly, what’s the purpose of having a hierarchy of “presenters” and what practical benefits “templates” provide. As it currently stands, I suspect that an act of over-engineering and over-abstracting could’ve taken place.

Avoid Thread and Memory Leaks

The speaker claimed that one of the goals of the refactoring project was to “cleanup some thread and memory leaks that we see, for example, with Coroutine scopes”.

As of today, I’m not aware of any intrinsic thread or memory leaks in Coroutines framework. Sure, concurrency is difficult and there is always a room for a bug, but I don’t think that you get a memory leak if you just create new Coroutine scopes. Even if I assume that there are intrinsic leaks inside Coroutines framework, I can’t see how the described changes actually resolve this problem. If such leaks exist, the correct approach would probably be to report them as bugs and see them fixed.

Therefore, either there is something about Coroutines that I’m not aware of (and the speaker should’ve clarified), or this “goal” is just a bullet point, not a real technical objective.

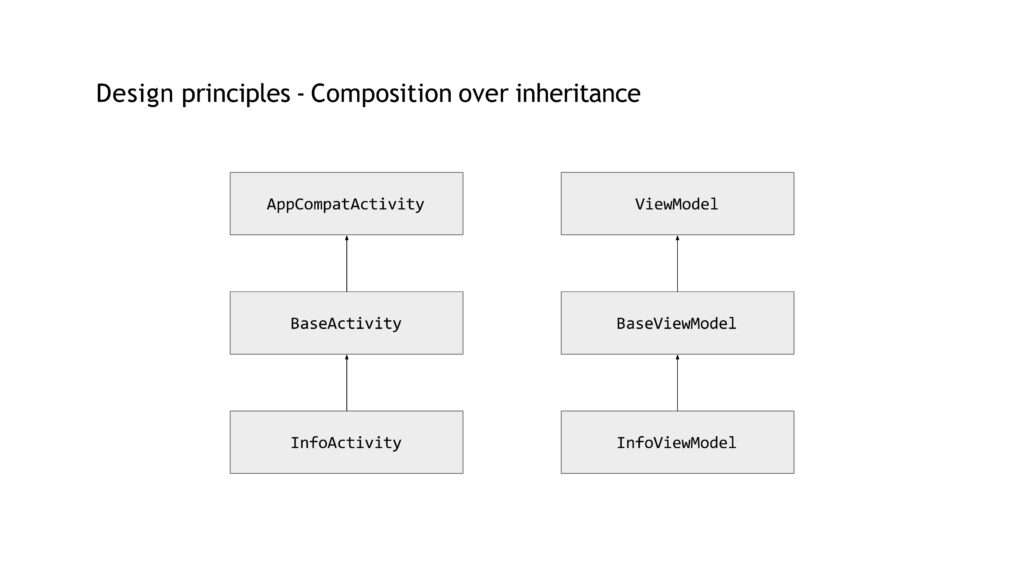

Composition Over Inheritance

This article becomes longer than I anticipated, so I’ll allow myself to address just one additional point from the talk: composition over inheritance.

The speaker claimed that “we wanted to use composition over inheritance because inheritance doesn’t scale well” and proceeded to show this diagram to explain what they saw as problematic inheritance:

To understand the point that I’m going to make, you need to know where the “composition over inheritance” paradigm comes from and what it says. The source of this “rule” is a book called Effective Java. It’s an amazing text, one of my all-time favorites and a member of the list of programming books that I recommend.

Item 18 in Effective Java (third edition) is called “Favor composition over inheritance”. The very first sentences state:

Inheritance is a powerful way to achieve code reuse, but it is not always the best tool for the job. Used inappropriately, it leads to fragile software.

Bloch, Effective Java

As you can see, the author doesn’t say that inheritance doesn’t scale well. Instead, he acknowledges that inheritance is a powerful tool which can be misused.

Later in that section of Effective Java you can read this:

Luckily, there is a way to avoid all the problems described above. Instead of extending an existing class, give your new class a private field that references an instance of the existing class. This design is called composition because the existing class becomes a component of the new one.

Bloch, Effective Java

And then:

Inheritance is appropriate only in circumstances where the subclass really is a subtype of the superclass.

Bloch, Effective Java

A discussion of the difference between subclass and subtype is outside the scope of this post (I covered this topic in my course about SOLID principles, in Liskov Substitution Principle module), but please take my word that the above image shows proper subtypes and constitutes a valid use case for inheritance.

Ironically, there was an example of inappropriate inheritance right in this talk. I’m talking about QeCommandReceiver class that you saw earlier in this article:

@SingleIn(AppScope::class)

@ContributesMultibindingScoped(AppScope::class)

class QeCommandReceiver @Inject constructor(

private val application: Application,

) : BroadcastReceiver(), Scoped {

override fun onEnterScope(scope: Scope) {

application.registerReceiver(this, IntentFilter("..."))

}

override fun onExitScope() {

application.unregisterReceiver(this)

}

override fun onReceive(context: Context, intent: Intent) {

when (intent.getStringExtra("cmd")) {

...

}

}

}

Note how this class extends BroadcastReceiver. Does it have to do that? Nope. Since the receiver is registered and unregistered internally, BroadcastReceiver could’ve been a private property inside QeCommandReceiver. This would allow removing onReceive method from the public API of this class because the application’s code doesn’t call this method at all.

Now, when it comes to complex topics like Dependency Injection, presentation layer architectural patterns, concurrency, etc., there will always be professional disagreements. What I see like an unfortunate anti-pattern, someone else can see as a best practice. In many cases, both interpretations are valid, depending on the context. However, I think that the discussion of inheritance in this talk doesn’t leave much space for interpretation: the information regarding inheritance provided to the audience was wrong. That’s very unfortunate.

Dependency Injection with Dagger and Hilt Course

Learn Dependency Injection in Android and master Dagger and Hilt dependency injection frameworks.

Go to CourseConclusion

In conclusion, following my analysis of the available information, I’d like to provide unsolicited advice to Amazon’s Driver Assistance Technology team.

It looks like the goals of this refactoring project weren’t processed and scrutinized sufficiently. Some of the project’s motivations seem somewhat irrelevant. It is unclear which alternatives were considered and what are the benefits of the chosen techniques and approaches. The current direction of this project will yield very complex codebase, which will be coupled to several complex frameworks. The barrier to entry for new developers will be very high. The fate of the project might end up being tied to just few developers who understand how everything fit together, thus reducing team’s “bus factor”.

My recommendation would be to pause the refactoring activities, review the motivation for the project, scrutinize team’s assumptions and predictions, evaluate alternative solutions and perform a meticulous trade-offs analysis. Then course correct, according to the outcome.

There is one important point that teams that refactor legacy code often miss: whatever you thought about the previous state of the codebase, at least you could refactor it. Hopefully, you could even refactor incrementally. Once you introduce many complex frameworks, abstract the hell out of everything and adopt unique and opinionated in-house patterns, you might lose that ability. The current tech leadership might have full confidence in their efforts, but, in several years, someone might be giving a talk about how they had to rewrite the entire app because the legacy code was too over-engineered and intercoupled.

Simplicity is often underappreciated.