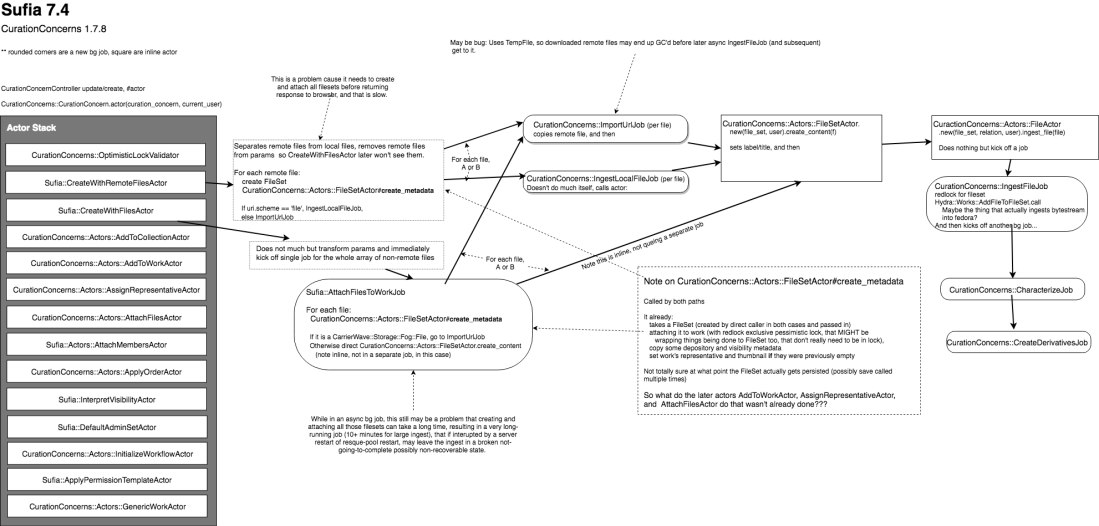

[With diagram of some Sufia ingest classes]

So, our staff that ingests files into our Sufia 7.4-based repository regularly needs to ingest dozens of 100MB+ TIFFs. For our purposes here, we’re considering uploading a bunch of “children” (in our case usually page images) of a single “work”, through the work edit page.

Trying to upload so much data through the browser ends up being very not great — even with the fancy JS immediately-upload-with-progress-bar code in Sufia. Takes an awful long time (hours; in part cause browsers’ 3-connections-per-host limit is a bottleneck compared to how much network bandwidth you could get), need to leave your browser open the whole time, and it actually locks up your browser from interacting with our site in any other tabs (see again 3-connections-per-host limit).

The solution would seem to be getting the files on some network-accessible storage, and having the app grab them right from there. browse_everything was already included in sufia, so we decided to try that. (Certainly another solution would be having workflow put the files on some network-accessible storage to begin with, but there were Reasons).

After a bunch of back-and-forth’s, for local reasons we decided to use AWS S3. And a little windows doohickey that gives windows users a “folder” they can drop things into, that will be automatically uploaded to S3. They’ve got to wait until the upload is complete before the things are available in the repo UI. (But it goes way faster than upload through browser, doesn’t lock up your browser, you don’t even need to leave your browser open, or your computer on at all, as the windows script is actually running on a local network server). When they do ask the sufia app to ingest, the sufia app (running on EC2) can get the files from S3 surprisingly quickly — in-region AWS network is pretty darn fast.

Browse_everything doesn’t actually work in stock Sufia 7.4

The first barrier is, it turns out browse_everything doesn’t actually work in Sufia 7.4, the feature was broken.

(Normally when I do these things, I try to see what’s been fixed/changed in hyrax: To see if we can backport hyrax fixes; to get a sense of what ‘extra’ work we’re doing by still being in sufia; and to report to you all. But in this case, I ended up just getting overwhelmed and couldn’t keep track. I believe browse_everything “works” in Hyrax, but may still have problems/bugs, not sure, read on.)

ScholarSphere had already made browse-everything work with their sufia 7.x, by patching various parts of sufia, as I found out from asking in Slack and getting helpful help from PSU folks, so that could serve as a model. The trick was _finding_ the patches in the scholarsphere source code, but it was super helpful to not have to re-invent the wheel when I did. Sometimes after finding a problem in my app, I’d have a better sense of which files to look at in ScholarSphere for relevant patches.

Browse-everything S3 Plugin

Aside from b-e integration on the sufia side, the S3 plugin for browse-everything also had some problems. The name of the file(s) you choose in the b-e selector didn’t show up in the sufia edit screen after you selected it, because the S3 b-e adapter wasn’t sending it. I know some people have told me they’re using b-e with S3 in hyrax (the successor to sufia) — I’m not sure how this is working. But what I did is just copy-and-paste the S3 adapter to write a custom local one, and tell b-e to use that.

The custom local one includes a fix for the file name thing (PR’d to browse-everything), and also makes the generated S3 public links have a configurable expires_in (PR’d to browse-everything) — which I think you really want for S3 use with b-e, to keep them from timing out before the bg jobs get to them.

Both of those PR’s have been merged to b-e, but not included in a release yet. It’s been a while since a b-e release (As I write this latest b-e is 0.15.1 in Dec 2017; also can we talk about why 0.15.1 isn’t just re-released as 1.0 since it’s being used in prod all over the place?). Another fix in b-e which isn’t in prod yet, is a fix for directories with periods in them, which I didn’t notice until after we had gone live with our implementation, and then back-ported in as a separate PR.

Instead of back-porting this stuff in as patches, one could consider using b-e off github ‘master’. I really really don’t like having dependencies to particular un-released git trees in production. But the other blocker for that course of action is that browse-everything master currently has what I consider a major UX regression. So back-port patches it is, as I get increasingly despondent about how hard it’s gonna be to ever upgrade-migrate our sufia 7.4 app to (some version of) hyrax.

The ole temp file problem

Another problem is that the sufia ImportUrlJob creates some files as ruby Tempfiles, which means the file on disk can/will be deleted by Tempfile code whenever it’s reference gets garbage collected. But those files were expected to stay around for other code, potentially background jobs, to have to process. But bg jobs are in entirely different ruby processes, they aren’t keeping a reference to the TempFile keeping it from being deleted.

In some cases the other things expecting the file are able to re-download it from fedora if it’s not there (via the WorkingDirectory class), which is a performance issue maybe, but works. But in other cases, they just 500.

I’m not sure why that wasn’t a problem all along for us, maybe the S3 ingest changed timing to make it so? It’s also possible it still wasn’t a problem, I just mistakenly thought it was causing the problems I was having, but I noticed the problem code-reading trying to figure out the mysterious problems we were having, so I went ahead and fixed it it into our custom ImportUrlJob.

Interestingly, while the exact problem I had already been fixed in Hyrax — a subsequent code-change in Hyrax re-introduced a similar TempFile problem in another way, then fixed again by mbklein. That fix is only in Hyrax 2.1.0.

But then the whole Sufia/Hyrax ingest architecture…

At some point I had browse-everything basically working, but… if you tried to ingest say 100 files via S3, you would have to wait a long time for your browser to get a response back. In some cases timing out.

Why? Because while a bunch of things related to ingest are done in background jobs, the code in sufia tried to create all the FileSet objects and attach them to the Work in Sufia::CreateWithRemoteFilesActor, which ends up called in the foreground, during the request-response loop. (I believe this is the same in Hyrax, not positive). (This is not how “local”/”uploaded” files are handled).

And this is a very slow thing to do in Sufia. Whether that’s becuase of Fedora, ActiveFedora, the usage patterns of ActiveFedora in sufia/hyrax… I think it’s combo of all of them. The code paths being used sometimes do slow things things once-per-new file that really could be done just once for the work. But even fixing that, it still ain’t really speedy.

At this point (or maybe after a day or two of unsuccessfully hacking things, I forget), I took a step back, and spent a day or two getting a handle on the complete graph of classes involved in this ingest process, and diagraming it.

You may download XML you can import into draw.io to edit, if you’d like to repurpose for your own uses, for updating for Hyrax, local mods, whatever.

This has changed somewhat in Hyrax, but I think many parts are still substantially the same.

A few thoughts.

If I’m counting right, we have nine classes/objects involved in: Creating some new “child” objects, attaching an uploaded file to each one (setting a bit of metadata based on original file name), and then attaching the “child” objects to a parent (copying a bit of metadata from parent). (This is before any characterization or derivatives).

This seems like a lot. If we were using ActiveRecord and some AR file attachment library (CarrierWave, or I like the looks of shrine) this might literally be less than 9 lines of code.

Understanding why it ended up this way might require some historical research. My sense is that: A) The operations being done are so slow (again, whether due to Fedora, AF, or Sufia architecture) that things had to be broken up into multiple jobs that might not have to be otherwise. B) A lot of stuff was added by people really not wanting to touch what was already there (cause they didn’t understand it, or cause it was hard to get a grasp on what backwards incompat issues might arise from touching it), so new classes were added on top to accomodate new use cases even if a “greenfield” implementation might result in a simpler object graph (and less code duplication, more DRY).

But okay, it’s what we got in Sufia. Another observation though is that the way ‘local’ files (ie “uploaded” files, via HTTP, to a dir the web app can access) and ‘remote’ files (browse-everything) are handled is not particularly parallel/consistent, the work is divided up between classes in pretty different ways for the two paths. I suspect this may be due to “B” above.

And if you get into the implementations of various classes involved, there seems to be some things being done _multiple times_ accross different classes, the same things. Which doesn’t help when the things are very slow (if they involve saving a Work). Again I suspect (B) above.

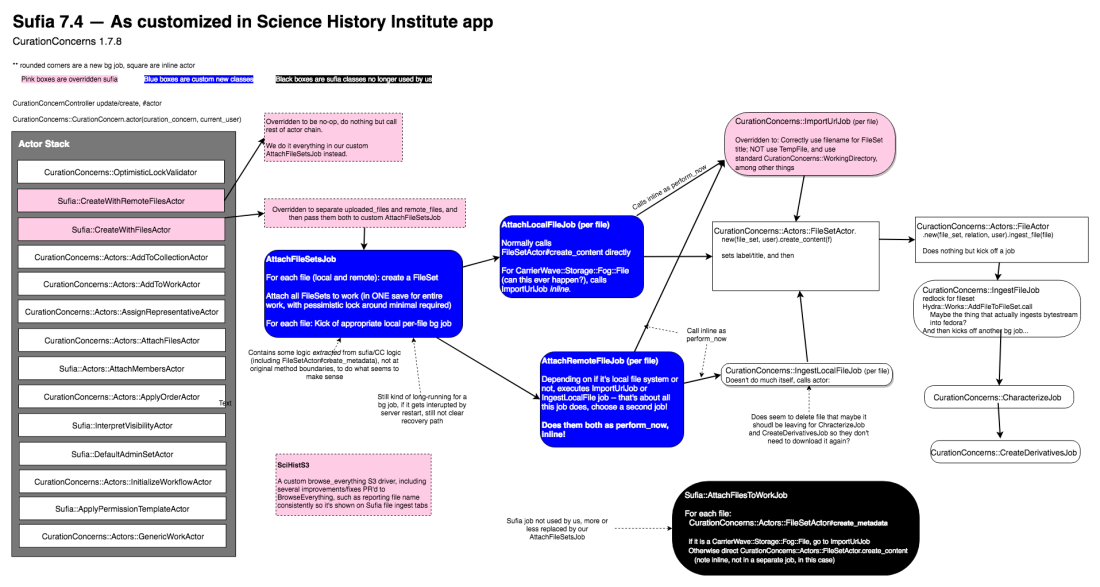

So, okay, at this point I hubristically thought, okay, let’s just rewrite some parts of this to make more sense, at least to my view of what makes sense. (What was in Hyrax did not seem to me to be substantially different in the ways relevant here). Partially cause I felt it would be really hard to figure out and fix the remaining bugs or problems in the current code, which I found confusing, and it’s lack of parallelism between local/remote file handling meant a problem could be fixed in one of those paths and not in the other which did things very differently.

Some of my first attempts involved not having a class that created all the new “filesets” and attached them to the parent work. If we could just have a job for each new file, that created a fileset for that file and attached it to the work, we’d be fitting into the ActiveJob architecture better — where you ideally want a bunch of fairly small and quick and ideally idempotent jobs, not one long-running job doing a lot of things.

The problem I ran into there, is that every time you add a member to a ‘Work’ in the Sufia/Fedora architecture, you actually need to save that Work, and do so by updating a single array of “all the members”. So if a bunch of jobs are running concurrently trying to add members to the same Work at once, they’re going to step on each others toes. Sufia does have a “locking” mechanism in place (using redlock), so they shouldn’t actually overwrite each others data. But if they each have to wait in line for the lock, the concurrency benefits are significantly reduced — and it still woudln’t really be playing well with ActiveJob architecture, which does’t expect jobs to be just sitting there waiting for a lock blocking the workers. Additionally, in dev, i was sometimes getting some of these jobs timing out trying to get the lock (which may have been due to using SQLite3 in dev, and not an issue if I was using pg, which I’ve since switched to in dev to match prod).

After a few days of confusion and banging my head against the wall here, I returned to something more like stock sufia where there is one mega-job that creates and associates all the filesets. But it does it in some different ways than stock sufia, in a couple places having to use “internal” Sufia API — with the goal of _avoiding_ doing slow/expensive things multiple times (save the work once with all new filesets added as members, instead of once for each member as stock code did), and getting the per-file jobs queued as soon as possible under the constraints.

I also somewhat reduced the number of different bg jobs. There was at least one place in stock code where a bg job existed only to decide which of two other possible bg jobs it really wanted to invoke, and then perform_later on them. I had my version of a couple jobs do a perform_now instead — I wanted to re-use the logic locked in the two ActiveJob workers being dispatched, but there was no reason to have a job that existed only for milliseconds whose purpose was only to queue up another job, it could call that existing logic synchronously instead.

I also refactored to try to make “uploaded” (local) vs “remote” file ingest much more consistently parallel — IMO it makes it easier to get the code right, with less code, and easier to wrap your head around.

Here’s a diagram of where my architecture ended up:

Did it work?

So I began thinking we had a solution to our staff UX problem that would take “a couple days” to implement, because it was “already a Sufia feature” to use browse-everything from S3.

In fact, it took me 4-5 weeks+ (doing some other parts of my job in those weeks, but with this as the main focus). Here’s the PR to our local app.

It involved several other fixes and improvements that aren’t mentioned in this report.

We found several bugs in our implementation — or in sufia/cc — both before we actually merged and after we merged (even though we thought we had tested all the use cases extensively, there were some we hadn’t until we got to real world data — like the periods-in-directory-names b-e bug).

In general, I ran into something I’ve run into before — not only does sufia has lots of parts, but they are often implicitly tightly-coupled, assuming that other parts are doing things in a certain way, where if the other things change that certain way, it breaks the first things, with none of these assumptions documented (or probably intentional or even conscious from the code writers).

Another thing I think happens, is that sometimes there can be bugs in ActiveFedora, but the particular way the current (eg) Sufia implementation is implemented doesn’t hit them, but you change the code in certain ways that probably ought to be fine, and now they hit bugs that were actually always there, but nobody noticed since the shared implementation didn’t hit them.

Some time after we deployed the new feature, we ran into a bug that I eventually traced to an ActiveFedora bug (one I totally don’t understand myself), which had already been fixed and available in AF 11.5.2 (thanks so much to Tom Johnson for, months ago, backporting the fix to AF 11.x, not just in 12.x). We had been running ActiveFedora 11.1.6. After some dependency hell of getting a consistent dependency tree with AF 11.5.2, it seems to have fixed the problem without breaking anything else or requiring any other code changes (AF appears to have not actually introduced backwards incommpats between these minor version releases, which is awesome).

But what’s a mystery to me (well, along with what the heck is up with that bug, which I don’t understand at all in the AF source), is why we didn’t encounter this bug before, why were the functions working just fine with AF 11.1.6 until recently? It’s a mystery, but my wild guess is that the changes to order and timing of how things are done in my ingest refactor made us hit an AF bug that the previous stock Sufia usage had not.

I can’t hide it cause I showed you the PR, I did not write automated tests for the new ingest functionality. Which in retrospect was a mistake. Partially I’m not great at writing tests; partially because when I started it was so experimental and seemed like it could be a small intervention, but also implementation kept changing so having to keep changing tests could have been a slowdown. But also partially cause I found it overwhelming to figure out how to write tests here, it honestly gave me anxiety to think about it. There are so many fairly tightly coupled moving parts, that all had to change, in a coordinated fashion, and many of them were ActiveJob workers.

Really there’s probably no way around that but writing some top-level integration tests, but those are so slow in sufia, and difficult to write sometimes too. (Also we have a bunch of different paths that probably all need testing; one of our bugs ended up being with when someone had chosen a ‘format’ option in the ‘batch create’ screen; something I hadn’t been thinking to test manually and wouldn’t have thought to test automated-ly either. Likewise the directory-containing-a-period bug. And the more separate paths to test, the more tests, and when you’re doing it in integration tests… your suite gets so so slow. But we do plan to add at least some happy path integration tests, we’ve already got a unit of work written out and prioritized for soonish. Cause I don’t want this to keep breaking if we change code again, without being caught by tests.

So… did it work? Well, our staff users can ingest from S3 now, and seems to have successfully made their workflow much more efficient, productive, and less frustrating, so I guess I’d say yes!

What does this say about still being on Sufia and upgrade paths?

As reported above, I did run into a fair number of bugs in the stack that would be have been fixed if we had been on Hyrax already. Whenever this happens, it rationally makes me wonder “Is it an inefficient use of our developer time that we’re still on Sufia dealing with these, should we have invested developer time in upgrading to Hyrax already?”

Until roughly March 2018, that wouldn’t have really been an option, wasn’t even a question. At earlier point in the two-three-ish year implementation process (mostly before I even worked here), we had been really good at keeping our app up to date with new dependency releases. Which is why we are on Sufia 7.4 at least.

But at some point we realized that getting off that treadmill was the only way we were going to hit our externally-imposed deadlines for going live. And I think we were right there. But okay, since March, it’s more of an open book at the moment — and we know we can’t stay on Sufia 7.4.0 forever. (It doesn’t work on Rails 5.2 for one, and Rails before 5.2 will be EOL’d before too long). So okay the question/option returns.

I did spend 4-5 weeks on implementing this in our sufia app. I loosely and roughly and wild-guessedly “estimate” that upgrading from our Sufia 7.4 app all the way to Hyrax 2.1 would take a lot longer than 4-5 weeks. (2, 3, 4 time as long?)

But of course this isn’t the only time I’ve had to fight with bugs that would have been fixed in Hyrax, it adds up.

But contrarily, quite a few of these bugs or other architecture issues corrected here are not fixed in Hyrax yet either. And a couple are fixed in Hyrax 2.1.0, but weren’t in 2.0.0, which was where Hyrax was when I started this. And probably some new bugs too. Even if we had already been on Hyrax before I started looking at “ingest from S3”, it would not have been the “couple day” implementation I naively assumed. It would have been somewhere in between that and the 4-5 week+ implementation, not really sure where.

Then there’s the fact that even if we migrate/upgrade to Hyrax 2.1 now… there’s another big backwards-incompatible set of changes slated to come down the line for a future Hyrax version already, to be based on “valkyrie” instead.

So… I’m not really sure. And we remain not really sure what’s going to become of this Sufia 7.4 app that can’t just stay on Sufia 7.4 forever. We could do the ‘expected’ thing and upgrade to hyrax 2.1 now, and then upgrade again when/if future-valkyrie-hyrax comes out. (We could also invest time helping to finish future-valkyrie-hyrax). Or we could actually contribute code towards a future (unexpected!) Sufia release (7.5 or 8 or whatever) that works on Rails 5.2 — not totally sure how hard that would be.

Or we could basically rewrite the app (copying much of the business logic of course, easier in business logic we managed to write in ways less coupled to sufia) — either based on valkyrie-without-sufia (as some institutions have already done for new apps, I’m not sure if anyone has ported a sufia or hyrax app there yet; it would essentially be an app rewrite to do so) or…. not. If it would be essentially an app rewrite to go to valkyrie-without-hyrax anyway (and unclear at this point how close to an app rewrite to go to a not-yet-finished future hyrax-with-valkyrie)…

We have been doing some R&D development into what an alternate digital collections/repo architecture could look like, not necessarily based on Valkyrie — my attr_json gem is part of that, although doesn’t demonstrate a commitment to actually use that gem in the future here at MPOW, we’re just exploring different things.

Tom was recently talking about wanting to try for a backwards-compatible hyrax-on-valkyrie.

Perhaps ironically (I never know if I’m using that word right), all the internal changes and overrides to Sufia we’ve done to, well, make the app work for our needs (of which this is one major example and one the biggest, but there are dozens of others) — are going to make the upgrade treadmill have a LOT steeper slope for us.

It may be that most of that would have to be encountered in just getting to hyrax 1.x or 2.x, before the additional jump to future hyrax-valkyrie (I know a hyrax-valkyrie is in progress but not complete, I am not sure of the status beyond this myself). Although in the case of looking at 3-4 major version jumps each with their own picadillos, I’d seriously consider trying to do it all in one go (because it might result in less developer hours overall then refactoring code to API’s that are gonna change in the next jump anyway, several times), which I think would end up looking essentially like a rewrite anyway.

“Backwards compatible” is one thing if you haven’t done much local customization. I’m not sure how many sufia/hyrax apps are in a state of little to no local customization. If you have done some local customization (say, anything involving writing ruby code beyond “configuration”, or maybe slightly more constrained, use any samvera stack class/method API that isn’t in the tutorials) — I’m not sure it’s totally clear what “backwards compatible” means exactly, what sorts of uses of class/method-level API should be considered supported and public API, and what sorts aren’t. (For a concrete example… this craziness I did above, where my custom code both expects to be called by engine code and then calls internal-ish engine code in turn?) I expect most (maybe not all) people’s customized apps are not gonna just run with no breaks even after a ‘backwards compatible’ upgrade, but there are certainly degrees of pain.

I appreciate (for real) Tom’s intention/interest to minimizing em, and I know if anyone can pull off something that ends up being reasonably backwards compatible, he can — but I’m not counting my chickens before they hatch, as they say.

Yep I agree!