Adding Zonal Resiliency to Etsy’s Kafka Cluster: Part 2

The first time I performed a live upgrade of Etsy's Kafka brokers, it felt exciting. There was a bit of an illicit thrill in taking down a whole production system and watching how all the indicators would react. The second time I did the upgrade, I was mostly just bored. There’s only so much excitement to be had staring at graphs and looking at patterns you've already become familiar with.

Platform upgrades made for a tedious workday, not to mention a full one. I work on what Etsy calls an “Enablement” team -- we spend a lot of time thinking about how to make working with streaming data a delightful experience for other engineers at Etsy. So it was somewhat ironic that we would spend an entire day staring at graphs during this change, a developer experience we would not want for the end users of our team’s products.

We hosted our Kafka brokers in the cloud on Google's managed Kubernetes. The brokers were deployed in the cluster as a StatefulSet, and we applied changes to them using the RollingUpdate strategy. The whole upgrade sequence for a broker went like this:

- Kubernetes sends a SIGTERM signal to a broker-pod-n

- The Kafka process shuts down cleanly, and Kubernetes deletes the broker-pod

- Kubernetes starts a new broker-pod with any changes applied

- The Kafka process starts on the new broker-pod, which begins recovering by (a) rebuilding its indexes, and (b) catching up on any replication lag

- Once recovered, a configured readiness probe marks the new broker as Ready, signaling Kubernetes to take down broker-pod-(n-1).

Kafka is an important part of Etsy's data ecosystem, and broker upgrades have to happen with no downtime. Topics in our Kafka cluster are configured to have three replicas each to provide us with redundancy. At least two of them have to be available for Kafka to consider the topic “online.” We could see to it that these replicas were distributed evenly across the cluster in terms of data volume, but there were no other guarantees around where replicas for a topic would live. If we took down two brokers at once, we'd have no way to be sure we weren't violating Kafka's two-replica-minimum rule.

To ensure availability for the cluster, we had to roll out changes one broker at a time, waiting for recovery between each restart. Each broker took about nine minutes to recover. With 48 Kafka brokers in our cluster, that meant seven hours of mostly waiting.

Entering the Multizone

In the fall of 2021, Andrey Polyakov led an exciting project that changed the design of our Kafka cluster to make it resilient to a full zonal outage. Where Kafka had formerly been deployed completely in Google’s us-central1-a region, we now distributed the brokers across three zones, ensuring that an outage in any one region would not make the entire cluster unavailable.

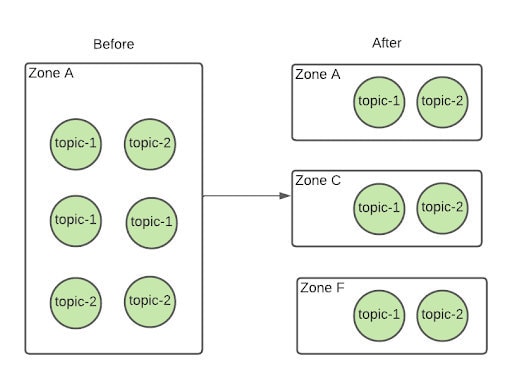

In order to ensure zonal resilience, we had to move to a predictable distribution of replicas across the cluster. If a replica for a topic was on a broker located in zone A, we could be sure the second replica was on a broker in zone C with the third replica in zone F.

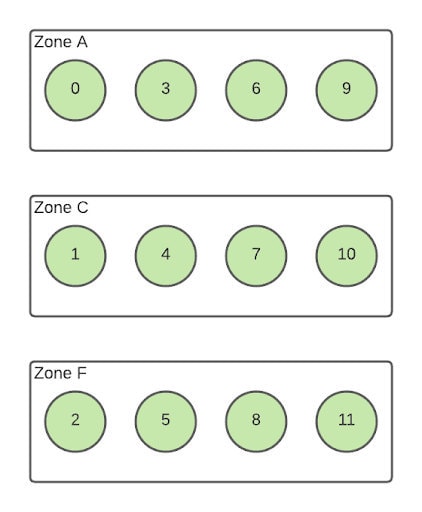

This new multizone Kafka architecture wiped out the one-broker-at-a-time limitation on our upgrade process. We could now take down every single broker in the same zone simultaneously without affecting availability, meaning we could upgrade as many as twelve brokers at once. We just needed to find a way to restart the correct brokers.

Kubernetes natively provides a means to take down multiple pods in a StatefulSet at once, using the partitioned rolling updates rollout strategy. However, that requires pods to come down in sequential order by pod number, but our multizonal architecture placed every third broker in the same zone. We could have reassigned partitions across brokers, but that would have been a lengthy and manual process, so we opted instead to write some custom logic to control the updates.

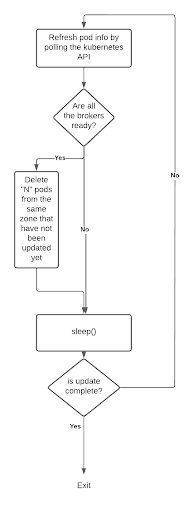

We changed the RolloutPolicy on the StatefulSet Kubernetes object for Kafka to OnDelete. This means that once an update is applied to a StatefulSet, Kubernetes will not automatically start rolling out changes to the pods, but will expect users to explicitly delete the pods to roll out their changes. The main loop of our program essentially finds some pods in a zone that haven’t been updated and updates them. It waits until the cluster has recovered, and then moves on to the next batch.

We didn’t want to have to run something like this from our local machines (what if someone has a flakey internet connection?), so we decided to dockerize the process and run it as a Kubernetes batch job. We set up a small make target to deploy the upgrade script, with logic that would prevent engineers from accidently deploying two versions of it at once.

Performance Improvements

We tested our logic out in production, and with a parallelism of three we were able to finish upgrades in a little over two hours. In theory we could go further and restart all the Kafka brokers in a zone en masse, but we have shied away from that. Part of broker recovery involves catching up with replication lag: i.e., reading data from the brokers that have been continuing to serve traffic. A restart of an entire zone would mean increased load on all the remaining brokers in the cluster as they saw their number of client connections jump -- we would find ourselves essentially simulating a full zonal outage every time we updated.

It’s pretty easy just to look at the reduced duration of our upgrades and call this project a success -- we went from spending seven hours rolling out changes to about two. And in terms of our total investment of time—time coding the new process vs. time saved on upgrades—I suspect by now, eight months in, we’ve probably about broken even. But my personal favorite way to measure the success of this project is centered around toil -- and every upgrade I’ve performed these last eight months has been quick, peaceful, and over by lunchtime.