How to Perform an SEO Audit for Your Website

Maybe you didn’t think you’d have to worry about something like SEO. That’s what writers and SEO pros are for. But a lot of what you do when you build and maintain your digital product contributes to SEO. As such, SEO audits should become a regular part of your website maintenance routine.

Search engine optimization is an ever-evolving thing. While there’s a lot that designers and developers can do when initially building a website to help it perform well in search results, SEO requires just as much maintenance as a website itself does in order for it to be effective.

If you’re taking the time to audit your website regularly—for performance, security and other general maintenance—that alone will help keep your SEO in good shape. However, there’s more you can do as a web designer or developer to maximize your results.

In this post, we’ll take a look at some of the things that you can do in your website audits as well as tools you can use to improve your website’s ability to rank.

Website SEO Audits for Web Designers & Developers

As a web designer or developer, no one is going to expect you to know how to write SEO content. What you should be able to do, however, is to manage the technical SEO side of a website and also be able to identify other areas (mainly on-page SEO) where the site has fallen short. You can then outsource those optimizations to a writer or SEO specialist to handle.

Let’s have a look at what kinds of things you should be including in your website SEO audits going forward:

1. Run Your Site Through Core Web Vitals and Look for Technical SEO Issues

The best place to start is with technical SEO. Google’s web.dev Measure tool is a good one to use to quickly evaluate how your site is doing on this front:

The tool is going to provide you with four scores:

- Performance (i.e., loading speed)

- Accessibility

- Best practices (e.g., HTTPS, image quality, errors, etc.)

- SEO (mostly on-page SEO related to crawlability of the content)

Although green is what you want to aim for, I’d tell you to take it easy on yourself when it comes to Performance. I’ve run the top websites through this tool and many of them score in the yellow and red. It’s a really tough one to get a green on, especially since Google evaluates websites based on their mobile experience.

What’s most important is that you consider the issues that Google has detected and then attempt to fix each of them. If you click the down-arrow next to the noted problems, Google will provide you with a tip to help you do so.

2. Confirm That All Pages Are Indexed

One of the mistakes I’ve seen people make is connecting their websites to Google (via Analytics and Search Console) and then assuming that’s enough to get their content indexed. However, messy site architectures can prohibit that from happening. Incorrectly submitted sitemaps can interfere as well.

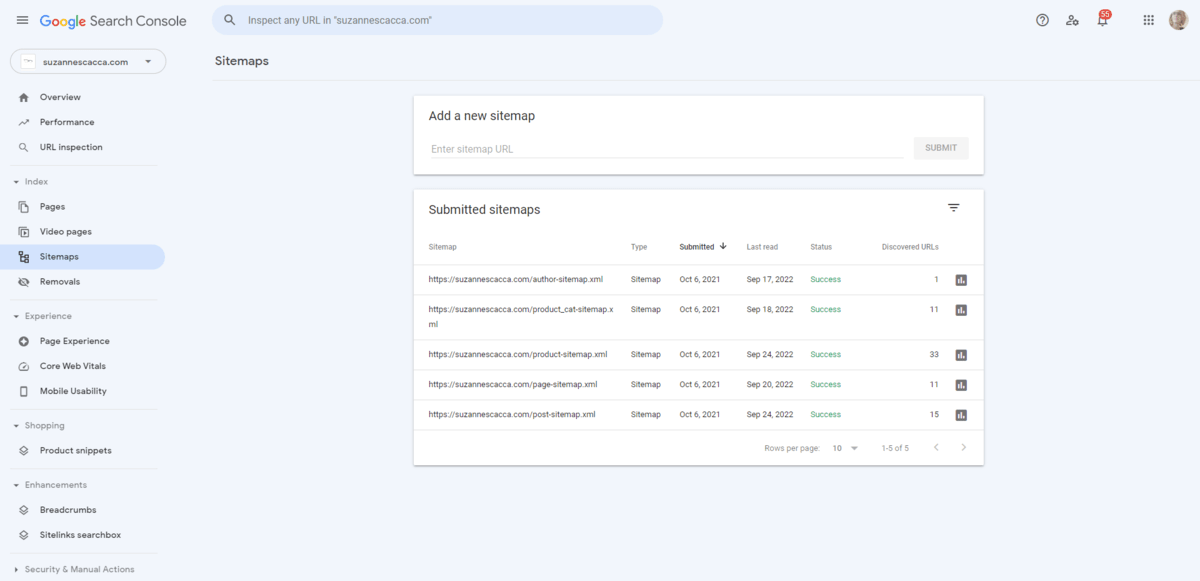

So the next thing to do is turn your attention to Google Search Console. Make your way over to the Sitemaps tab:

Here you can see a list of the various sitemaps I’ve uploaded to Google Search Console. There’s one for:

- Author

- Product categories

- Products

- Pages

- Posts

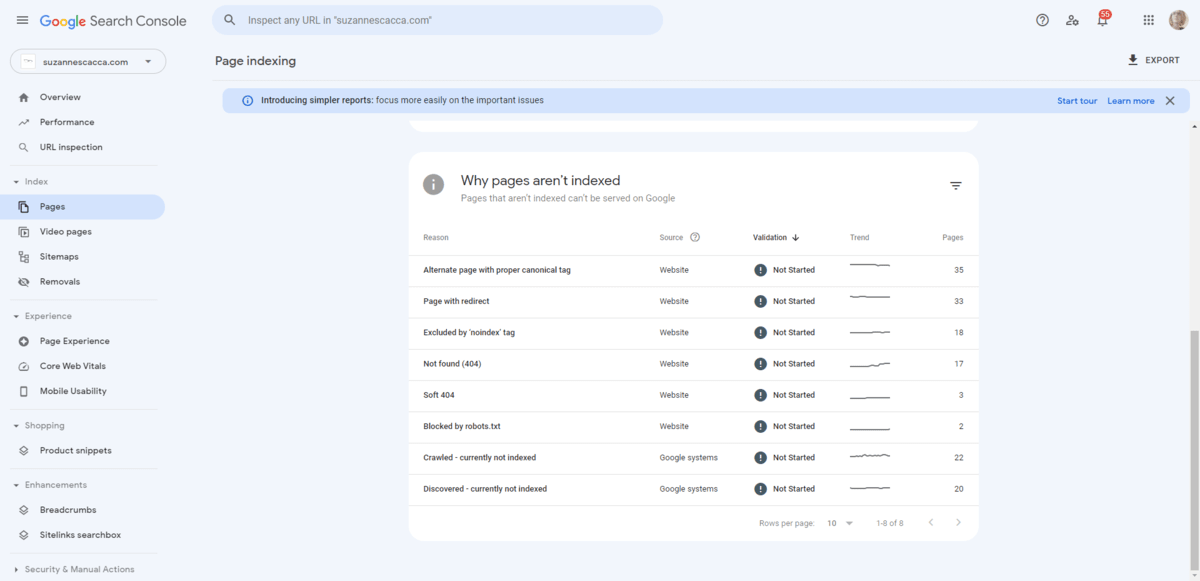

On the far right, Google will tell you the last time it scanned your sitemap, whether it was a success or failure, and how many of the URLs in the sitemap have been indexed. If there are any errors or Google has skipped indexing certain pages, you can find that information by clicking on the corresponding sitemap or going to the Pages tab:

There are various reasons why some of my content isn’t indexed. A lot of it has to do with the age of my site. It’s been up for almost 10 years and it’s undergone numerous makeovers during that time. Some pages I’ve deleted, some I’ve renamed and redirected, and others I’ve chosen to block the search engines from indexing altogether.

When performing your audit, make sure you click through each of the non-indexed reasons. You’ll find a list of pages under each.

If there are pages that don’t belong there, evaluate the reason given and then fix it on your website. The next time Google scans your sitemap, the indexing issue should resolve.

3. Make Sure That Each Mission-Critical Page Has Been Optimized

This is where we start getting into the on-page SEO aspect of the audit. While it will ultimately be up to the writer or SEO specialist to choose keywords, write metadata, craft alt text for imagery, add structured data and so on, you should be able to look at what’s been done and spot any gaps in the optimization.

My favorite tool for this is MozBar. It’s a free browser extension. When enabled, it provides you with data on any web page that you look at. It’s a great option if you’re evaluating a small to medium-sized website or if you want to do an audit of random (yet mission-critical) pages on a larger website.

We’re going to use the home page on the Kraken website as an example of what to look for when using MozBar:

Ignore the information in the toolbar itself. We’ll address that in the next step. For now, let’s break down what we find under On-Page Elements:

There are a lot of on-page SEO elements under Tag/Location and what the search engine’s indexing bots can actually pick up from the page under Content. Some of these you can ignore, like Meta Keywords, Bold/strong and Italic/em. The rest should have data in them.

Like I said before, you don’t need to be an SEO expert in order to perform this audit. All you need to do is review each of the remaining fields and ensure that there’s data present as well as the right type. Here are some things to look for:

- URL is no more than 75 characters.

- Page Title is readable and between 30 and 60 characters.

- Meta Description is readable and no more than 160 characters.

- H1 sums up the basic premise of the page.

- H2 contains a list of the main headings on the page and they tell a basic story of what the page is about.

- Alt text is present for the most important and descriptive images on the page.

You can let your writer or SEO worry about keywording. Just focus on the structure and overall readability of this data. If you spot any errors on your pages, pass them over to your content team and they will provide you with the fixed data (or they’ll implement it themselves).

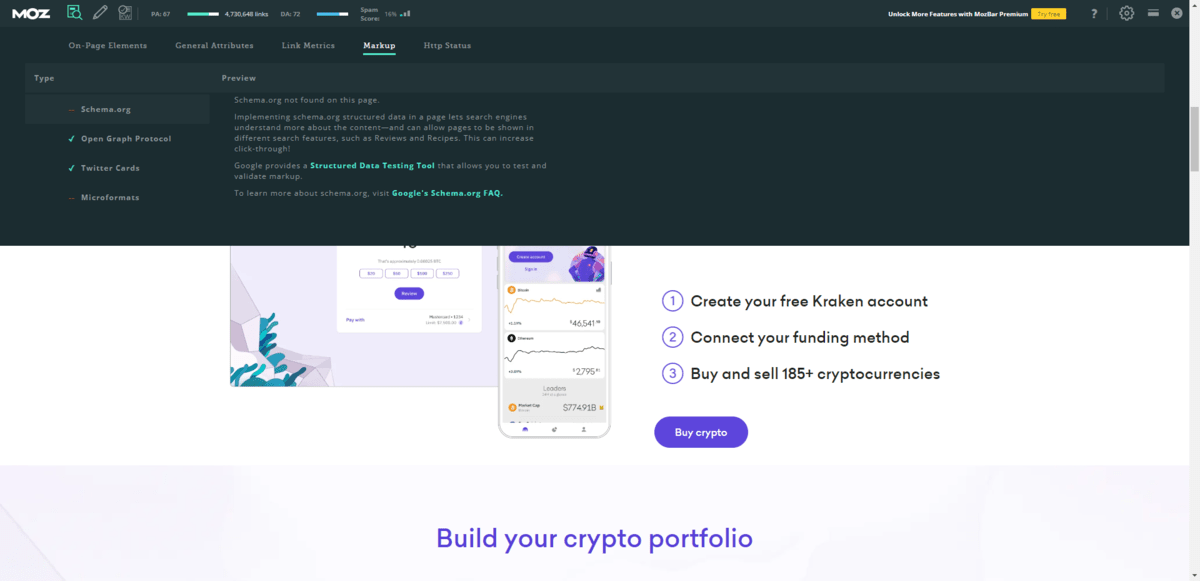

One other thing to check while you’re here is under Markup:

Structured data can be useful for some webpages. For instance, if you want to improve how your website appears in local search results, then adding schema markup to the page that describes the establishment type, physical address and contact information would be useful.

If schema markup has been added, you should see it here. If not, then there’s an issue with how it’s been coded into the page. And if you believe that the page would benefit from schema markup but you or your team has yet to create it, then this is your reminder to add some. It’s never too late to do this.

4. Review Your Backlinks

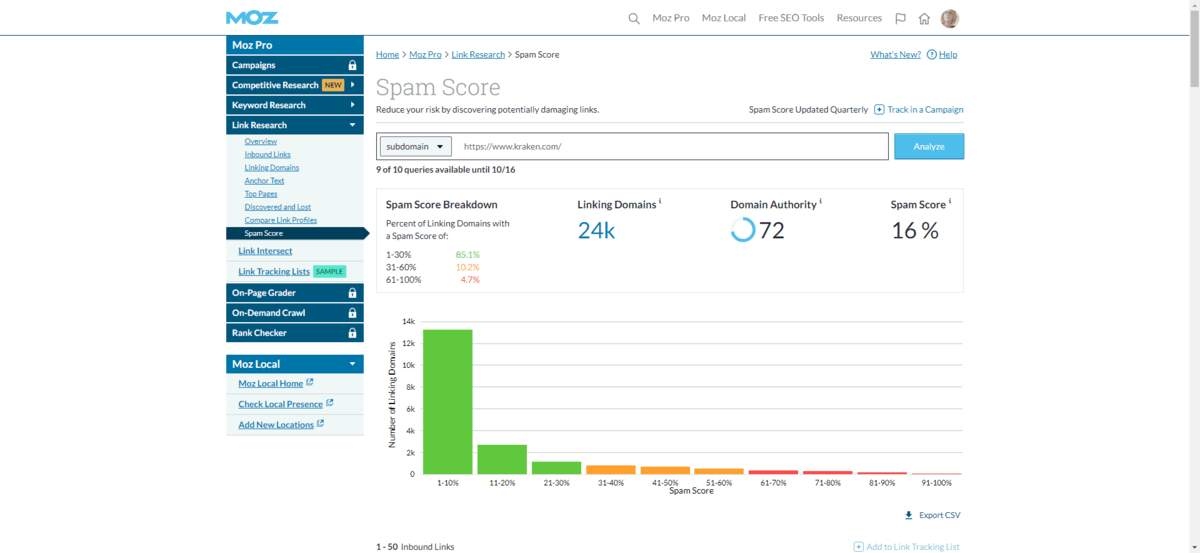

Before we leave the MozBar behind, let’s take a look at the data that appears in the main toolbar:

PA stands for “page authority.”

While you want a good score, “good” is somewhat subjective. What’s more important is to keep track of this number so you can compare it with your previous audit’s score. The real goal is to see that number continually go up.

DA stands for “domain authority.”

This is the overall reputation and trust score associated with the website. Again, the focus shouldn’t be on getting as close to a score of 100 as possible. You need a majorly popular website and brand in order to do that. Instead, the goal should be to improve the user experience so much that your DA consistently increases.

Spam Score doesn’t necessarily refer to the spamminess of your site and content (though it could). It more pertains to the spamminess of the sites linking to the page:

There’s not a lot you can do with this as a web designer. However, your writer, SEO and anyone else managing the marketing for your site should be informed ASAP if that score is too high. 1% or 2% is fine for small and medium websites. When you start to see double digits, though, you should be worried.

There are a couple of things that could be causing this. The one that your team should focus on is the black hat SEO techniques that lead to the creation of inauthentic (i.e., spammy) backlinks. This commonly happens when a company pays a link farm or other bad actor to generate a ton of backlinks for their website.

Quantity of backlinks is no longer an important ranking signal in search engines because of this shady SEO practice. Quality of backlinks, on the other hand, does matter. A lot.

This is why you should monitor your spam score. Because if a tool like Moz can spot all of these questionable backlinks, then so too can the search engines. Whether it was intentional or not, those bad backlinks need to go.

5. Fix Your Site Architecture

Backlinks might be on Google’s official list of ranking signals, but they’re not the only kinds of links that matter. The way your site is structured can have an impact on SEO as well.

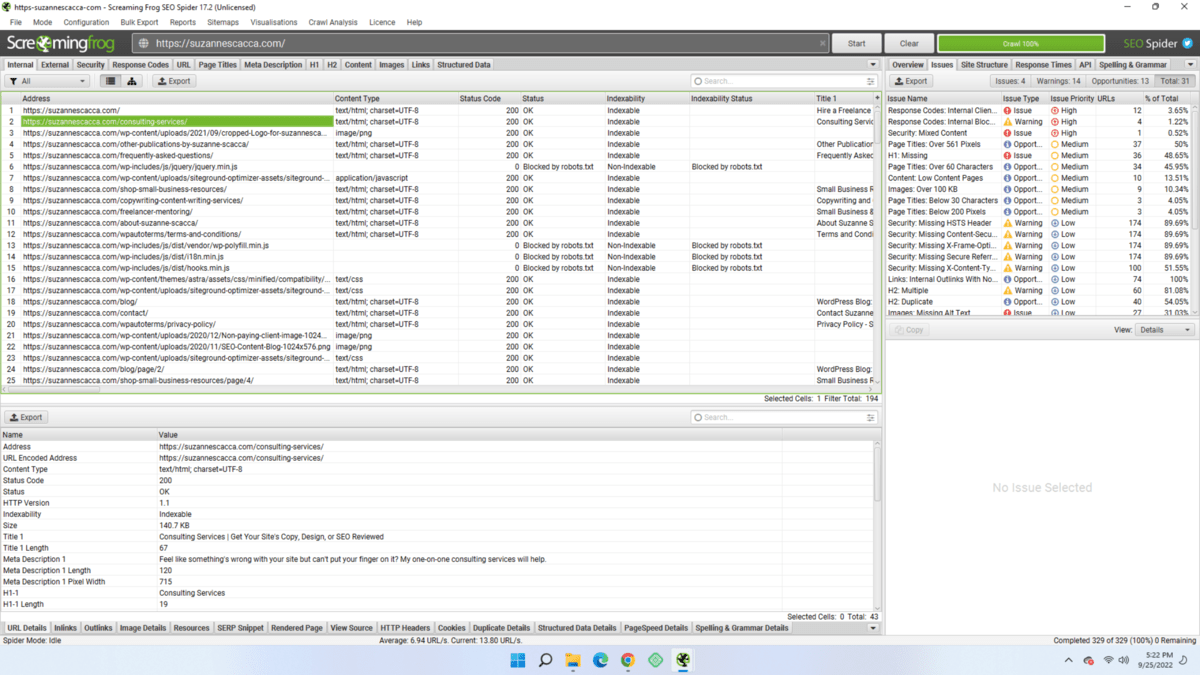

The tool I recommend for this is Screaming Frog’s SEO Spider Website Crawler. This is a desktop app you can use to analyze your site architecture:

Everything we looked at in point #3 can actually be found within this tool. However, I find the table format a bit overwhelming, which is why I don’t outright suggest it as a way of looking up your SEO data. Plus, I like to see the data in the context of the page so I can scroll down and confirm that it checks out.

That said, now that you can see what it does, you have another option if you’d prefer to work like this as opposed to inside of the browser.

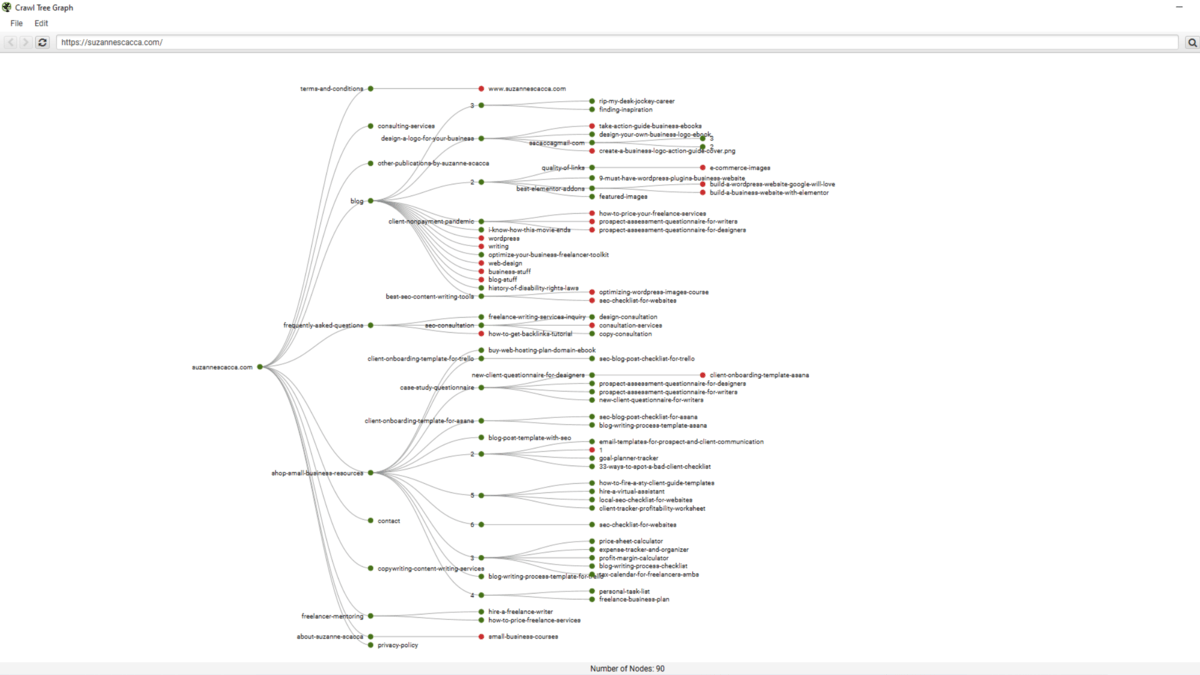

One of the tools that I use this app for is the Crawl Tree Graph:

This gives me the perfect bird’s-eye view of all the content on my website. The links in green are active and indexable. The ones in red are not.

If your website is disorganized, if the labels are too long, or if you have incomplete areas, this visualization is going to show you where those issues are right away. It’s the fastest way I’ve found to evaluate one’s site architecture.

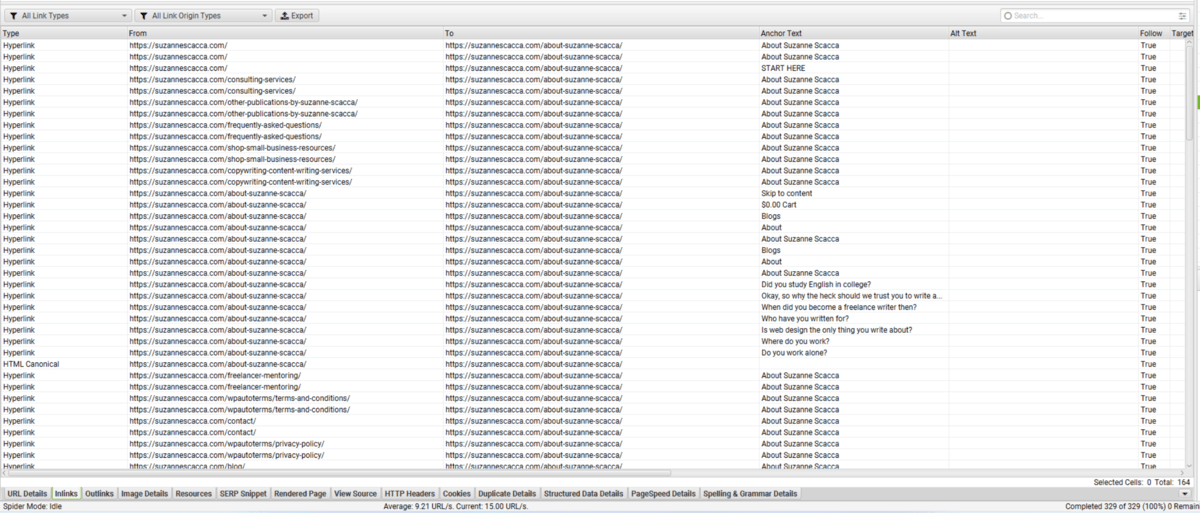

Another tool I use is the internal links “Inlinks” tool (it’s a small button in the bottom-left corner of the app):

Click on it and it will show you all the internal links that appear on each page of your website. These are the true internal links—not the navigation or footer links that are sometimes added to the total internal link counts.

Internal links are critical for SEO for a couple of reasons.

For starters, a well-linked website structure ensures that visitors aren’t constantly running up against dead ends. Secondly, lots of internal links suggest to search engines that your content is related, relevant and useful.

Scan through the list of webpages. Look for any pages without internal links and see if there are opportunities to add some. Don’t worry about terms of conditions or policy pages. Focus on your most important and useful content. You should have at least two or three internal links on each of those pages. It’s also a good idea to even out how many links appear across your pages so that certain pages don’t feel denser in terms of information than others.

Again, since it’s not your responsibility to write the content for your site, it’s not your responsibility to create those links. However, you have a tool that tells you if your content is falling short, which will be useful information to share with your team so they can improve upon your internal linking structure.

Wrapping Up

You don’t need to be a master wordsmith or an SEO pro in order to ensure a website keeps ranking well after it launches.

As a web designer or developer, there’s a lot you can do to keep your website in good standing in search results. And if you’re already performing regular maintenance every few months, then you may already be doing some of these things anyway. If not, then now’s the perfect time to start evaluating your technical, on-page and even off-page SEO and to improve your website’s ranking in the process.

Suzanne Scacca

A former project manager and web design agency manager, Suzanne Scacca now writes about the changing landscape of design, development and software.