This post is a follow-up to the first part.

However, before proceeding with the usual format as in the previous post, I will make a small comment.

I was very amazed by the first part of the book, so before starting the second part I viewed the book’s references. One book caught my attention: “What is the Name of this Book?”. So I ordered it and I read it. I found the book’s author’s style to be very similar to GEB’s style: puns, self-references, discussions about meaning, and paradoxes. So if you follow GEB’s references, you can almost see how GEB’s author’s style came to be.

Chapter X: Levels of Description, and Computer Systems

Some things can be understood at different levels. E.g. when watching TV, what is more “real”, the show or the pixels on the screen? It depends on whether you are a human, a dog, or a computer. Bridging the gap between these two is AI’s biggest challenge.

Computer systems also have different levels of description – binary, machine language, high-level languages, etc. Discussion about program code, data (piece of information that gets manipulated by the code), memory (where data gets stored), CPU (what executes the code), CPU instructions (commands that execute the code), compilers (translate from one language to CPU instructions and store it on a file), interpreters (translate from one language to CPU instructions directly), bootstrapping (implement evaluation strategy of lang X in X itself), and operating systems. Talks about the connection between computer science and languages.

Computer understanding what it needs to do. Is a programmer just a fancy word for someone that can talk to computers? Like, for example, an English-speaking person, or a computer-speaking person.

Epiphenomena – visible consequence of the overall system. E.g. system capable of handling 30 users – 30 is not stored anywhere, it is a consequence.

Chapter XI: Brains and Thoughts

In the brain, we have “active symbols” (neurons store and transmit information), compared to a formal system’s typographical symbols.

Fantasy and fact intermingle very closely in our minds

Explains how neurons work (they fire given some threshold, etc.). (I already tackled some of that previously). Refers to them as ants; but if they are the ants, where’s “team”? “Signals”? “Symbols”?

Earthworms have isomorphic brains (neurons in the thousands), but what about humans? We all think differently.

Mentions classes (abstraction), instances (“concrete” of a specific abstraction), and prototypes (interfaces, describes “what an abstraction does”, while the implementation describes “how an abstraction does X”).

The most specific event can serve as a general example of a class of events.

Is every neuron capable of holding a single symbol, or is this symbol scattered across neurons?

Chapter XII: Minds and Thoughts

Talks about different human languages and translating the same story into multiple languages.

We can also do the same with programs and different programming languages.

How can you compare a program written in APL with one written in Algol? You will again chunk these programs in your mind, looking for conceptual, functional units which correspond. Thus, you are not comparing hardware, you are not comparing software-you are comparing “etherware”-the pure concepts which lie back of the software. There is some sort of abstract “conceptual skeleton” which must be lifted out of low levels before you can carry out a meaningful comparison of two programs in different computer languages, of two animals, or of two sentences in different natural languages.

Talks about how soulists would say “consciousness is in the soul” and that’s that, and then gives an opposing view to that attempting to describe consciousness mechanically. Ends the chapter with:

The paradoxes of consciousness arise because a conscious being can be aware of itself, as well as of other things, and yet cannot really be construed as being divisible into parts. It means that a conscious being can deal with Godelian questions in a way in which a machine cannot.

Chapter XIII: BlooP and FlooP and GlooP

Shares an anecdote that starts with a sequence and asks the person to guess the next number. Is nature chaotic, or is it patterned? Can every problem be seen from an angle that its secret is revealed? This discussion reminded me of Dynamic Programming and finding optimal substructures*.

The author introduces the programming language BlooP (Bounded loops – primitive recursion) which allows loops (only with finite recursive steps) but not stuff like while (1) {. FlooP (Free loops – normal recursion) is the language that allows infinite loops. As a result, FlooP is more powerful, but then it is affected by the Halting problem. GlooP (Greater loops) includes programs that can’t be created in FlooP, but, according to the Church-Turing thesis, no such programming languages exist.

Uses Cantor’s diagonal argument** to disprove that “every number-theoretical function must be calculable within a predictable number of steps”, that is, prove that BlooP cannot express all possible theorems.

Chapter XIV: On Formally Undecidable Propositions of TNT and Related Systems

Two key ideas for Gödel’s proof:

- Proof pairs:

and

form a proof pair iff

is the Gödel number of a proof derivation whose last line is the string with Gödel number

. For example,

is valid because

is the derivation of

MI -> MII -> MIIII -> MUIandis

MUI. The property of being a proof-pair is a primitive recursive number-theoretical property, and can therefore be tested for by a BlooP program. - Substitution.

These lead to the key: arithmoquining – substituting a formula’s own Gödel number into itself.

If we reinterpret and

for “generalized natural numbers” instead of only for “natural numbers” we end up with a new class of numbers: supernaturals. While this can free a system of contradictions, it comes at a price – we can’t really have a “clear idea” of what these numbers are

One way to index I is to say it is (9,8,1). Then I+1 is (9,8,2). There is no unique way to index the supernaturals; different methods offer different advantages and disadvantages.

Diophantine equation is a polynomial with fixed integral coefficients and exponents set to 0. Finding solutions is a hard problem in general. If we have a formal system and a Godelian numbering for it, there exists a Diophantine equation that can be mapped.

Chapter XV: Jumping out of the System

Gives some extensions to TNT but shows how Godel’s argument can be tweaked to also capture those.

Godel’s theorem seems to me to prove that Mechanism is false, that is, that minds cannot be explained as machines. Thanks to Godel’s theorem, the mind always has the last word.

Minds, Machines, and Godel

Can humans ever jump out of ourselves? A program or a human can “modify” itself but is still stuck in the language’s instruction set so hasn’t really jumped out.

Chapter XVI: Self-reference and Self-replicate

Talks about sentences that are self-referential and programs that are self-referential and that can self-replicate when run.

Typogenetics: molecular logic. Manipulating four symbols: A C G T. Any string of symbols is called a strand. Strands can be changed according to some rules – those rules are enzymes. Similar to the MU puzzle, however, in this case, strands can also affect enzymes, so in a way, every theorem deduced in MU would alter the rules thus mixing levels. In TNT, language and metalanguage are also intertwined. Thus, quining (self-rep) and Godel numbering (self-ref) are also applicable to Typogenetics.

A natural and fundamental question […] is: “How did they ever get started in the first place?” It is truly a baffling thing. One has to imagine some sort of a bootstrap process occurring, somewhat like that which is used in the development of new computer languages […] For the moment, we will have to content ourselves with a sense of wonder and awe, rather than with an answer. And perhaps experiencing that sense of wonder and awe is more satisfying than having an answer-at least for a while.

Chapter XVII: Church, Turing, Tarski, and Others

In this chapter, the author will look at a few properties under the assumption that the brain can be formalized into a formal system (and as the author states, this has some philosophical implications). But even if it can be formalized, and it’s so complex we still look at it as an informal system.

There is no infallible method for telling true from false statements of number theory.

Tarski-Church-Turing theorem

The author also keeps in mind the assumption that the brain can be formalized, and challenges that assumption.

Some kinds of things which a brain can do can be vaguely approximated on a computer but not most, and certainly not the interesting ones. But anyway, even if they all could, that would still leave the soul to explain, and there is no way that computers have any bearing on that.

Church-Turing thesis (Soulists’ version)

Chapter XVIII: Artificial Intelligence: Retrospects

Most of the stuff in this and the following chapters I’ve read elsewhere. E.g., the Turing test is introduced.

When a car has “wash me” drawn on the back, who is this “me”? Is the car truly speaking? Is it the writer? Is it the phrase itself? The dust? Most problems exist in a “problem space” and being able to step back and consider the space that you’ve put the problem in is a core function of intelligence. If your dog keeps barking at the fence, never considering running away from the bone a bit to get closer to it via a gate, he’ll never get it. He has to reconsider the problem space.

Another GEB overview

Chapter XIX: Artificial Intelligence: Prospects

Think how poorer our mental lives would be if we didn’t have this creative capacity for slipping out of reality into soft “what if”‘s

Chapter XX: Strange Loops, Or Tangled Hierarchies

The author states that “reasoning involves an infinite regress” and relates to Carroll’s Tortoise: no step of reasoning can be done without invoking some rule on a higher level to justify the step.

Talks about mechanistic views and free will. States that the central issue of the book is “Do words and thoughts follow formal rules?” with an answer “Yes-provided that you go down to the lowest level-the hardware-to find the rules.”. Though this can be challenged, e.g., is it possible to break down thoughts into e.g. neurons? Is “neurons” the best model, does there exist a better model for representation, etc. Are formal systems even the best model, or is there a better model?

He states that when humans think they are capable of changing the “mental rules” (and the rules that change the rules, etc.), these are “software rules”. However, the rules at the bottom do not change – humans have access to their thoughts but not to their neurons.

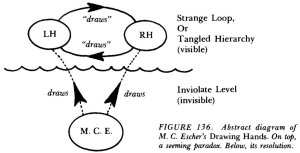

As shown in the image, strange loop paradoxes can be escaped by introducing a metalevel. Similar to Lisp programs that can change their structure: at one level, Lisp programs change themselves, at another level since data is code and code is data, these are simply changes in data. In any case, the Lisp interpreter is shielded from these changes.

“What is evidence?” is not just a philosophical question, for it intrudes into life in all sorts of places. You are faced with an extraordinary number of choices as to how to interpret evidence at every moment.

The total picture of “who I am” is integrated in some enormously complex way inside the entire mental structure, and contains in each one of us a large number of unresolved, possibly unresolvable, inconsistencies. Thus, ironically, […] the fact of being self-reflecting conscious beings-leads to the […] major forces in creating distinct individuals.

All of the metamathematical theories (Godel’s Incompleteness Theorem, Church’s Undecidability Theorem, Turing’s Halting Theorem, and Tarski’s Truth Theorem) seem to suggest that “you can never represent yourself totally”.

To seek self-knowledge is to embark on a journey which … will always be incomplete, cannot be charted on any map, will never halt, cannot be described.

The relation between “How do symbols work?” and “How do our minds work?”.

If a person never contradicts himself, it must be that he says nothing.

Miguel de Unamuno

It could be that our brains […] are stubborn and intractable systems which we cannot neatly decompose in any way. But even if we do fail to understand ourselves, there need not be any Godelian “twist” behind it; it could be simply an accident of fate that our brains are too weak to understand themselves.

Strange Loop: an interaction between levels in which the top level reaches back down towards the bottom level and influences it, while at the same time being itself determined by the bottom level. The author’s belief is that phenomena such as ideas, hopes, images, consciousness, etc. are a kind of Strange Loop.

Beyond Godelian twists, this made me think about love. For example, my kids say that they “want to be like [their] dad”. I take teaching maths to them very seriously, and they know this expectation and they accept it – they want to make me happy. But the truth is, I want to teach them maths so that they can think clearly and in turn so that they are happy, and I will be happy when I know they’re happy. Note the self-reference. 🙂

* E.g., I solved one task with brute-force which gave the idea of the recurrence relation , with

. This led to construct the DP array

dp[n][k][t] with base case dp[0][0][0] = dp[0][1][0] = ... = 1 and the inductive step dp[n][k][t] = sum(dp[n-1][k][t-j] for j in range(1, k + 1)).

** A constructive proof that some sets are uncountable: start with (0, 0, …), (1, 1, …), … Take digits in diagonal, e.g. (0, 1, …) – we can form infinitely new sequences this way.

One thought on “GEB: An EGB overview (Part II)”