YugabyteDB column-level locking

Imagine having a tool that can automatically detect JPA and Hibernate performance issues. Wouldn’t that be just awesome?

Well, Hypersistence Optimizer is that tool! And it works with Spring Boot, Spring Framework, Jakarta EE, Java EE, Quarkus, or Play Framework.

So, enjoy spending your time on the things you love rather than fixing performance issues in your production system on a Saturday night!

Introduction

In this article, we are going to see how YugabyteDB allows you to scale writes by employing column-level locking instead of the traditional row-level locking used by Oracle, MySQL, PostgreSQL, or SQL Server.

If you’re new to YugabyteDB, then you can start with this article first, as it explains what YugabyteDB is and why you should definitely consider using it.

Row-level locking

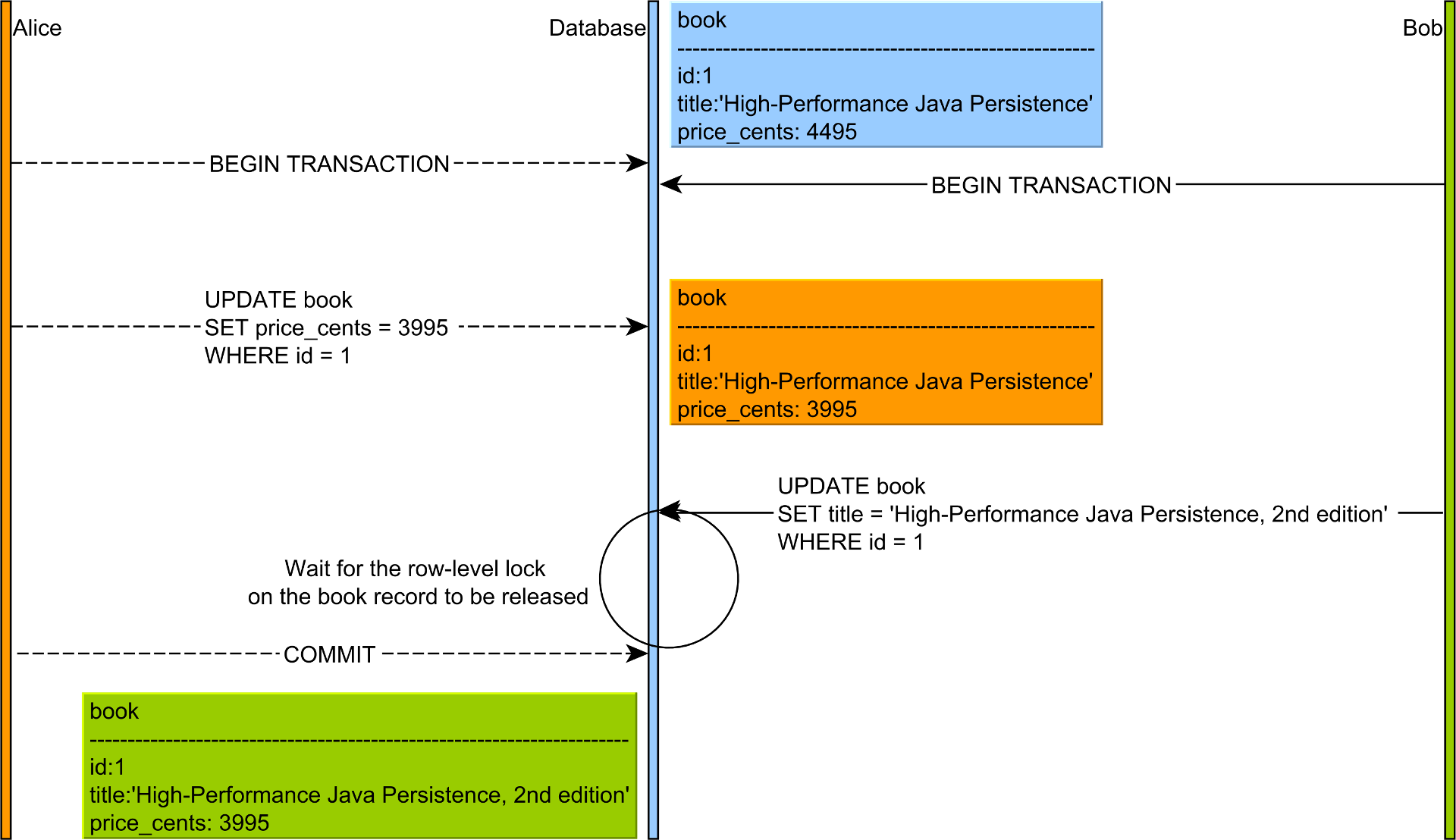

In Oracle, MySQL, PostgreSQL, or SQL Server, you cannot concurrently modify a given database table record because the UPDATE and the DELETE statements take an exclusive lock on the underlying record.

So, once a transaction has modified a record, that record is guarded by an exclusive lock, and other concurrent transactions that want to execute an UPDATE or a DELETE statement on the same record will block until the first transaction commits or rollbacks, as illustrated by the following diagram:

In a relational database system, once a transaction acquires an exclusive lock, that lock cannot be released prior to ending the currently running transaction.

This is not just theory, as we can easily replicate this behavior in practice:

Book post = entityManager.find(Book.class, 1L);

post.setPriceCents(3995);

LOGGER.info("Alice updates the book record");

entityManager.flush();

executeSync(() -> {

doInJPA(_entityManager -> {

executeStatement(_entityManager, "SET lock_timeout TO '1s'");

Book _post = _entityManager.find(Book.class, 1L);

_post.setTitle("High-Performance Java Persistence, 2nd edition");

LOGGER.info("Bob updates the book record");

try {

_entityManager.flush();

} catch (Exception expected) {

assertTrue(

ExceptionUtil

.rootCause(expected)

.getMessage()

.contains("canceling statement due to lock timeout")

);

}

});

});

When running the above test case, we get the following output:

[Alice]: o.h.e.t.i.TransactionImpl - begin

[Alice]: SELECT

b.id as id1_0_0_,

b.price_cents as price_ce2_0_0_,

b.title as title3_0_0_

FROM book b

WHERE b.id=?

Params:[(1)]

[Alice]: Alice updates the book record

[Alice]: UPDATE book

SET price_cents=?

WHERE id=?

Params:[(3995, 1)]

[Bob]: o.h.e.t.i.TransactionImpl - begin

[Bob]: SET lock_timeout TO '1s'

[Bob]: SELECT

b.id as id1_0_0_,

b.price_cents as price_ce2_0_0_,

b.title as title3_0_0_

FROM book b

WHERE b.id=?

Params:[(1)]

[Bob]: Bob updates the book record

[Bob]: UPDATE book

SET title=?

WHERE id=?

Params:[(High-Performance Java Persistence, 2nd edition, 1)]

[Bob]: o.h.e.j.s.SqlExceptionHelper - SQL Error: 0, SQLState: 55P03

[Bob]: o.h.e.j.s.SqlExceptionHelper - ERROR: canceling statement due to lock timeout

[Bob]: o.h.e.t.i.TransactionImpl - rolling back

[Alice]: o.h.e.t.i.TransactionImpl - committing

As you can see, Bob’s UPDATE blocks and a lock timeout is thrown since we instructed that Bob shouldn’t wait for more than one second to acquire a lock.

Atomicity and Dirty Writes

If you wonder why relational databases behave like that, then you should know that they have to do because, otherwise, they could risk compromising Atomicity.

As I explained in this article, Atomicity is the property that allows a transaction to roll back modifications and return to a previous consistent state.

Without taking the exclusive locks upon executing an UPDATE or a DELETE statement, databases like Oracle, SQL Server, PostgreSQL, or MySQL would be at risk of breaking Atomicity due to the Dirty Writes anomaly, which looks like this:

If two transactions are allowed to modify the same record, it’s not clear what consistent state a transaction should return to in case of a rollback.

In the diagram above, if Alice rolls back to the state that was available prior to her UPDATE, then she will override Bob’s modification, and if Bob commits, he is not going to apply his change.

Or, if Alice’s rollback leaves the record as-is, but Bob rolls back, his transaction will roll back to Alice’s previous uncommitted modification.

Any of the following scenarios could lead to data integrity issues, so many database systems try to prevent it using the pessimistic locking approach of acquiring an exclusive lock on the records that get modified by a given transaction.

For a brief introduction to YugabyteDB’s strong consistency model, check out this article.

YugabyteDB column-level optimistic locking

However, this pessimistic approach is not the only solution to preventing Dirty Writes. For instance, YugabyteDB takes an optimistic approach that allows it to avoid taking row-level locks because it can detect the Dirty Write modifications when they occur.

As explained in the documentation, YugabyteDB uses column-level locking:

Multiple SI transactions could be modifying different columns in the same row concurrently. They acquire weak SI locks on the row key, and strong SI locks on the individual columns they are writing to. The weak SI locks on the row do not conflict with each other.

So, when using YugabyteDB, two concurrent transactions can basically modify the same table row as long as the modified columns don’t overlap, as illustrated by the following diagram:

So, with YugabyteDB, we can have the same JPA entity modified concurrently by multiple transactions:

doInJPA(entityManager -> {

Book post = entityManager.find(Book.class, 1L);

post.setPriceCents(3995);

entityManager.flush();

executeSync(() -> {

doInJPA(_entityManager -> {

Book _post = _entityManager.find(Book.class, 1L);

_post.setTitle("High-Performance Java Persistence, 2nd edition");

});

});

});

doInJPA(entityManager -> {

Book post = entityManager.find(Book.class, 1L);

assertEquals(

"High-Performance Java Persistence, 2nd edition",

post.getTitle()

);

assertEquals(

Integer.valueOf(3995),

post.getPriceCents()

);

});

However, if you’re using JPA and Hibernate, this only works if your JPA entities are annotated with @DynamicUpdate Hibernate-specific annotation:

@Entity(name = "Book")

@Table(name = "book")

@DynamicUpdate

public class Book {

@Id

private Long id;

private String title;

@Column(name = "price_cents")

private int priceCents;

public Long getId() {

return id;

}

public Book setId(Long id) {

this.id = id;

return this;

}

public String getTitle() {

return title;

}

public Book setTitle(String title) {

this.title = title;

return this;

}

public int getPriceCents() {

return priceCents;

}

public Book setPriceCents(int priceCents) {

this.priceCents = priceCents;

return this;

}

}

The @DynamicUpdate annotation will instruct Hibernate to generate the UPDATE statement only with the columns that have been modified.

The

@DynamicUpdateannotation should be the default option when using YugabyteDB, as otherwise, the LSM (Log-structured merge-tree) storage will generate versions of the columns that were not actually updated. For more details about this topic, check out this talk from Denis Magda.

Without @DynamicUpdate, the default UPDATE includes all the columns the entity is mapping, and this will cause a column-level optimistic locking conflict, as illustrated by the following test case:

try {

doInJPA(entityManager -> {

Book post = entityManager.find(Book.class, 1L);

post.setPriceCents(3995);

LOGGER.info("Alice updates the book record");

entityManager.flush();

executeSync(() -> {

try {

doInJPA(_entityManager -> {

Book _post = _entityManager.find(Book.class, 1L);

_post.setTitle("High-Performance Java Persistence, 2nd edition");

LOGGER.info("Bob updates the book record");

_entityManager.flush();

});

} catch (Exception e) {

LOGGER.error("Bob's optimistic locking failure: ", e);

}

});

});

} catch (Exception e) {

LOGGER.error("Alice's optimistic locking failure: ", e);

}

When running the test case above, we can observe that Bob’s transaction could be rolled back as follows:

[Alice]: o.h.e.t.i.TransactionImpl - begin

[Alice]: SELECT

b.id as id1_0_0_,

b.price_cents as price_ce2_0_0_,

b.title as title3_0_0_

FROM book b

WHERE b.id=?

Params:[(1)]

[Alice]: Alice updates the book record

[Alice]: UPDATE book

SET price_cents=?,

title=?

WHERE id=?

Params:[(3995, High-Performance Java Persistence, 1)]

[Bob]: o.h.e.t.i.TransactionImpl - begin

[Bob]: SELECT

b.id as id1_0_0_,

b.price_cents as price_ce2_0_0_,

b.title as title3_0_0_

FROM book b

WHERE b.id=?

Params:[(1)]

[Bob]: Bob updates the book record

[Bob]: UPDATE book

SET price_cents=?,

title=?

WHERE id=?

Params:[(4495, High-Performance Java Persistence, 2nd edition, 1)]

[Bob]: o.h.e.j.s.SqlExceptionHelper - SQL Error: 0, SQLState: 40001

[Bob]: o.h.e.j.s.SqlExceptionHelper - ERROR: Operation failed. Try again:

895aebb7-52d5-4de3-a4ff-2379043c7569 Conflicts with higher priority transaction:

488353c9-f395-4aa6-8127-c1562c39c5d7

[Bob]: o.h.e.t.i.TransactionImpl - rolling back

[Bob]: Bob's optimistic locking failure: javax.persistence.OptimisticLockException:

org.hibernate.exception.LockAcquisitionException: could not execute statement

[Alice]: o.h.e.t.i.TransactionImpl - committing

The reason we got a rollback this time is that both Alice and Bob tried to update the same columns of the same record concomitantly, and this is forbidden in a serializable transaction schedule.

However, it’s not just that the second transaction could get rolled back. When running the same test case multiple times, we can observe that sometimes it’s Alice’s transaction getting rolled back:

[Alice]: o.h.e.t.i.TransactionImpl - begin

[Alice]: SELECT

b.id as id1_0_0_,

b.price_cents as price_ce2_0_0_,

b.title as title3_0_0_

FROM book b

WHERE b.id=?

Params:[(1)]

[Alice]: Alice updates the book record

[Alice]: UPDATE book

SET price_cents=?,

title=?

WHERE id=?

Params:[(3995, High-Performance Java Persistence, 1)]

[Bob]: o.h.e.t.i.TransactionImpl - begin

[Bob]: SELECT

b.id as id1_0_0_,

b.price_cents as price_ce2_0_0_,

b.title as title3_0_0_

FROM book b

WHERE b.id=?

Params:[(1)]

[Bob]: Bob updates the book record

[Bob]: UPDATE book

SET price_cents=?,

title=?

WHERE id=?

Params:[(4495, High-Performance Java Persistence, 2nd edition, 1)]

[Bob]: o.h.e.t.i.TransactionImpl - committing

[Alice]: o.h.e.t.i.TransactionImpl - rolling back

[Alice]: Alice's optimistic locking failure: org.postgresql.util.PSQLException: ERROR:

Operation expired: Transaction b4292e4f-1fa2-46c2-9d08-5237884c709d expired

or aborted by a conflict: 40001

As explained in the YugabyteDB documentation, by default, transactions are assigned a random priority number, and when two transactions are conflicting, it is the transaction with the lower priority that will get rolled back.

If you want to control the transaction priority, you can use the

yb_transaction_priority_lower_boundandyb_transaction_priority_upper_boundsettings like this:

set yb_transaction_priority_lower_bound = 0.8;

set yb_transaction_priority_upper_bound= 0.9;

So, by default, YugabyteDB uses an optimistic locking approach to detect the Dirty Write issue and will roll back one or the other transaction. Either way, one transaction gets rolled back, and the Dirty Write anomaly is prevented.

This behavior is observed when using the default Snapshot Isolation mode. On the other hand, if you switch to Read Committed, YugabyteDB will use a column-level pessimistic locking approach. Once a column value is changed in the Read Committed isolation level, YugabyteDB takes an exclusive lock on the associated column.

So, while the Read Committed pessimistic locking is closer to PostgreSQL or other relational database systems, the YugabyteDB approach still scales better as the lock is acquired at the column level, not on the associated table row.

Awesome, right?

If you enjoyed this article, I bet you are going to love my Book and Video Courses as well.

Conclusion

While traditional relational database systems use pessimistic locking to prevent Dirty Writes even when using an MVCC engine, YugabyteDB uses an optimistic locking approach that can provide better throughput for non-conflicting read-write transactions.

This happens to be a very convenient feature since reads are easier to scale than writes. For read-only transactions, you can increase throughput by spinning off more replica nodes. However, read-write transactions are harder to scale, especially when using a traditional Single-Primary Replication scheme.

Therefore, the optimistic locking approach taken by YugabuyteDB can help you scale read-write transactions, especially when you have data write hotspots.

This research was funded by Yugabyte and conducted in accordance with the blog ethics policy.

While the article was written independently and reflects entirely my opinions and conclusions, the amount of work involved in making this article happen was compensated by Yugabyte.