Tree Survey using Machine Learning

- Go ML Machine learning Tensorflow CoreML Geo

My buddy and I bought some lands (20ha/50acres) in the Quebec province.

Creating a new problem

It all started because as a new “forest owner”, I wanted to do a tree survey.

There are some good mobile apps to recognize flowers and trees but nothing professionally graded I knew (except paying for human expert).

I also needed a solution that would work offline since the mobile network coverage is not good on the field.

So I’ve started to look for existing solutions into ML research for the very specific North-East American trees and found one!

Tree Species Identification from Bark Images Using Convolutional Neural Networks.

They created and published a dataset named BarkNet, they also have a working model based on resnet34 and Pytorch.

Disclaimer: I’m a long-time software engineer but totally new to Machine Learning.

Here is my journey to create a new model and target mobile iOS devices, hopefully performing as good if not better as the paper.

Since they published the model, I was just hoping to reuse it by simply converting it to something suitable for CoreML, but it was a dead end, the project was old (in terms of software) and I was unable to convert the existing model.

My only hope was to train a new model myself which looked like an impossible mission for an ML novice.

Apple provides some great tools, one being CreateML, drag and drop your images and it can perform a classification for you.

Unfortunately with the number of images I had (around 800k) CreateML was crashing without completing the training. (It’s a great tool though and you should give it a try.)

Creating some tools

So I turned into Tensorflow 2.x and Keras, since I’m trying to recognize a texture pattern and not a specific shape in a picture I needed some tools to perform the tiling just once and not on every run, in order to have control over the tiled images I will submit to training and to reduce the whole processing time.

My main language is not Python anymore but Go (sorry Python still loving you), I needed a fast image processing library, I turneded into libvips and the Go bindings for libvips bimg.

My main language is not Python anymore but Go (sorry Python still loving you), I needed a fast image processing library, I turneded into libvips and the Go bindings for libvips bimg.

It led me to create this small tool ml-image-tile that took over all the process of pre tiling my images.

Since it’s a “texture”, for the generation of the validation images we can simply randomly tile into the source images, an option I’ve also added to this tool.

I had mixed results (far from the ones announced in the paper) and had to read the original paper several times to finally get it, they mentioned removing 3 classes and some blurry images but they were still in the published dataset.

The quality of the dataset was not really uniform and I did not wanted to review 23.000 pictures manually, I resorted to detecting the useless blurry images to remove the worst of them (blurry input is okay to a certain degree…).

To do that I turned to OpenCV for Go to perform Laplacian computations on the images and integrated it into my image tiler.

For reference, they were using a 224x224 image input to target the resnet34 model, pretrained with imagenet, froze the first layer and fine-tuned the network.

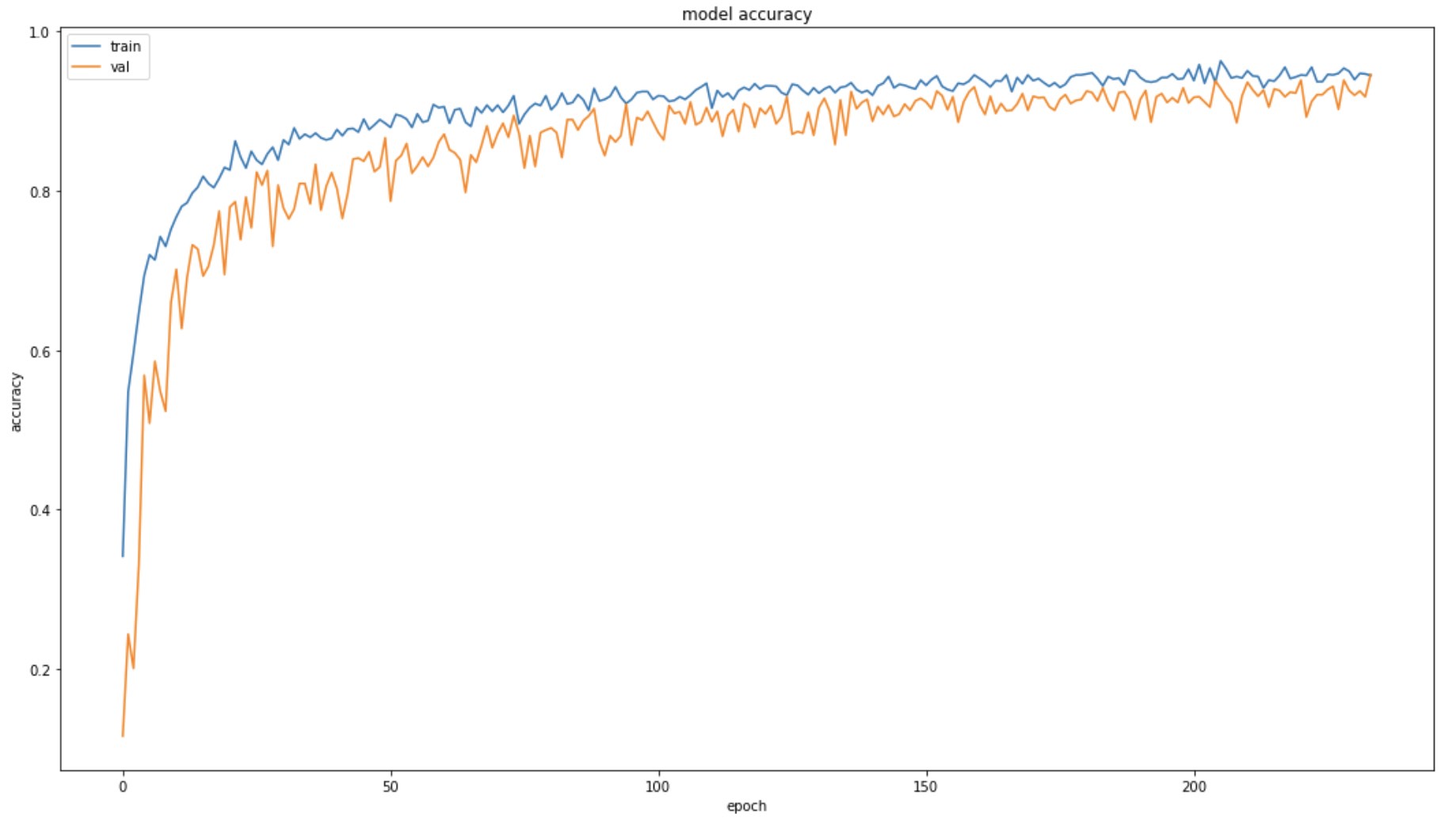

Thanks fo the Tensorflow ecosystem It leads me to test for different models and tunings, I had great results with inceptionV3 and 299x299 inputs.

I did most of this work on my Macbook M1, which is a very capable machine, the I/Os are incredibly fast so when dealing with big datasets it performs very well.

Longer computations I did on a 64GB memory Linux server with a GTX1080 gpu and SSD storage.

On pure training the PC was 4 times faster than the Mac, but on data manipulations the Mac was sometimes faster.

CoreML

Apple got it very early, having a great and simple ML framework for mobiles will bring awesome applications to the platform.

The second you enter the Apple ML world with your model, you’re almost done, previews, tests, code generation, it’s all done for you.

But first you need to convert your model to coreml, it’s still new and beta (in fact it was not correctly working on M1 the day before I started using it), but it’s kind of working, coremltools a Python library to help you convert your model into a .mlmodel, drag this into XCode and voila you are done (almost)!

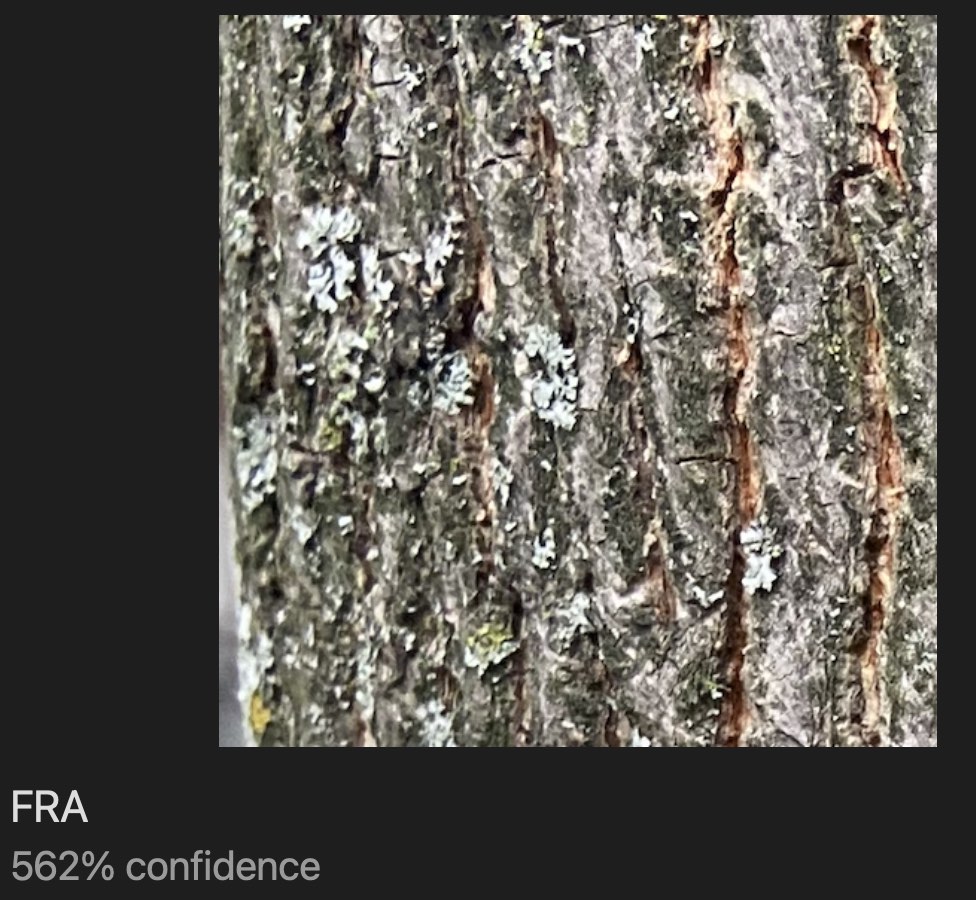

Over confident CoreML finding Fraxinus americana

Over confident CoreML finding Fraxinus americana

Enhancing the Dataset

For the first dataset they needed to hire some experts to recognize the trees and label the data.

What if we could find an existing list of trees with labels?

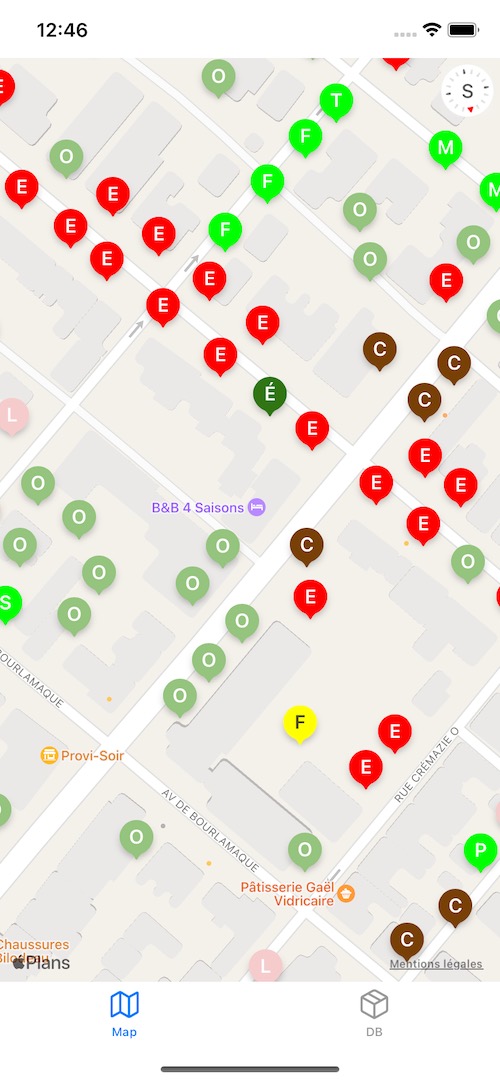

Thanks to the Opendata movement those data are available in some cities, I’ve found 132.000 labeled trees in Quebec City so I can walk around and take some pictures of new trees, (taking into account trees are probably growing differently in cities …).

Let’s create an App for that so I can find the trees around, I will need a local geo database for iOS, luckily for me I’ve made some similar work, 5 years ago, using Go and gomobile & a geodatabase I did rewrite a specialized geo aware database, in Go, based on bbolt and s2.

Creating Two Applications

So all the pieces are connecting together, create an app to browse 130,000 trees without using a remote server.

Reuse the same database code, offer an app capable of recognizing trees in the field using ML, store it into a local geo aware database with GPS coordinates and measure the trunk size using LIDAR.

Check my other blog post about creating the database using gomobile.

Can my side project turn into a viable product?

Conclusion

I’ve worked with data scientists in the past, and it was all about the data.

Most of the time spent on this project was about cleaning and processing the data.

The ML ecosystem is mature enough for a novice like me to get some results by reusing existing toolkits.

The first time I took some pictures of real trees and sent them to the model and saw it perform… It’s surreal, an experiment I wanted to share.

Thanks to the original paper authors and all the reviewers of this post.