Hello, handsome.

Let’s talk about docs for a little while.

Everyone laments that we don’t have more of them.

Seasoned programmers tease silver hairs from their scalp, attaching each to a story about a time that docs failed to lead them—or worse, misled them.

“Senior” teams with shitty docs hire apprentices, then pat themselves on the back for “training them” by relegating them to janitorial duty on the shitty docs.

Why do we suck at documentation, what does good even look like, and how do we get from here—wherever here is—to there—wherever there is?

Before we go through all that, I gotta address one thing first, and it’s going to piss a lot of people off. Get a cup of tea before you read this if you’ve ever found yourself, I dunno, ready to kneecap a stranger from Twitter over logarithmic complexity or something.

I really hate “more, more, more” documentation.

Technologists often feel guilt about having too little documentation, and that’s probably warranted—especially when the original implementers couldn’t be arsed to explain how anyone else might get the thing running, let alone how they might use it.

Occasionally, though, I see the “fix” swing really far in the opposite direction. Document everything! Four thousand word README! Headings in the wiki for every possible thing someone would want to do with this code! Twice for common things!

I see two issues with that approach.

First, the brutal candor: no one wants to read all that. Writing is a skill. Like most skills that aren’t programming, most programmers both undervalue it, and think they’re better at it than they are.

The skill of writing a large volume of clear, engaging documentation is so far out of most programmers’ depth that I cannot, in good faith, ask them to attempt it. Writing—yes, even nonfiction writing—is an art. And the more writing there is, the more artful it needs to be for anyone to read it.

But suppose you think your team is that good. They’re not, but whatever. There’s another reason not to do this.

Second, the practical reason: no one wants to maintain all that. Remember when I told y’all that all code, no matter how beautiful it is, requires maintenance? Remember how we concluded that therefore no feature—not even a finished feature—is free?

Guess what: that’s true for documentation, too. It gets out of date. It loses accuracy. The more of it there is, the more likely that is to happen, because no one seems to have the same enthusiasm for auditing reams of documentation that they had for writing it in the first place. And remember—they mostly weren’t even that jazzed to write it in the first place.

So the necessary fencepost maintenance on a whole bitranch of documentation is, frankly, never gonna happen.

Fine, Chelsea. So what’s the plan?

The plan is to:

- Identify when and why each piece of context might be important

- Document, briefly, at exactly the point where that piece of context will matter

- Ensure that, if this piece of context ever becomes outdated, it changes itself, draws immediate attention to itself, or disappears altogether.

The secret to making that work is to think beyond the README (or the Wiki, or the Confluence, or the readthedocs), and choose the appropriate documentation channel for each piece of context rather than using one tool for all of it.

A Survey of Documentation Tools

Let’s start with the obvious ones that get used for everything. We’ll discuss which situations suit them best. We’ll gradually progress toward the documentation tools that get less attention in discussions about documentation (and why I think they’re so great).

Code Comments

This is a weird one because the programming community says it hates comments. People love to tell junior programmers that “if your code needs comments, it’s not clear enough on its own.” So, to avoid admitting defeat, programmers will just leave illegible code without comments. The closest thing to a nuanced take on comments that I have heard is “use a comment to explain why, rather than what.”

As you know, I look to open source code more than I look to private code for real-life examples of collaborable practices, and the bottom line is that they’ve often got more comments than code. Take this plotting code from Pandas, for example, in which the docstrings easily make up more than half of the file. Python docstrings are a special kind of comment designed to reveal what an api does, but they’re still strings that the interpreter consumes and ignores. They don’t auto-update. They function like comments.

Here’s another example. Check out the docstring-to-code ratio on this function from matplotlib. You’re looking at twenty lines here. Fourteen of them comment the other six lines of code.

My general rules on when to use code comments look like this:

- Learn about the language I’m writing and follow community conventions. Many language communities include strings that the compiler ignores as conventional practice for various reasons. Examples: docstrings in Python or

//Markin iOS Development. - If I am doing something that no variable name can make obvious (often complicated algorithmic stuff is like this), I provide comments as headers in the logic.

- If someone is likely to come along and see this line and think they should change it, and they shouldn’t, I’ll leave a comment explaining why to leave it like this. They’re chiefly for code that looks wrong but has to be the way it is for some reason.

The common thread among all these: they probably shouldn’t change. The conventions of a community don’t change fast. An algorithm, though it might be tweaked, probably won’t undergo a structural change sufficient to invalidate structural headers without those headers getting removed or changed. The explanations on code usually explain the code as written. Comments work well for these purposes because, though it’s easy to change a line of code and leave a now-outdated comment, these are not cases where that should be happening.

Where do I put comments about stuff that’s definitely (or even probably) going to change? We’ll get there.

External Documentation (READMEs, ReadTheDocs, Confluence, et cetera)

I’m lumping all these together because they tend to get used for the same thing: a catchall after comments. Sometimes I see a distinction made that the README is for programmers and the others are for clients, but in practice, few teams seem to follow that distinction.

Isn’t it funny that we’re discouraged from writing comments because they could lose accuracy as the code changes, but we view documentation in a README or doc website as universally good despite being even more separate from the code?

This type of documentation is, to me, the highest risk of getting out-of-date. That said, programmers do need information about how to set up and run a piece of software if there are ever to be more maintainers than the original author. Plus, for code to help people, clients need to know how to use it. Here’s what I think goes in external documentation:

- How to get the code up and running. Do we need to do any IDE setup? Enter any environment variables? How do I run the tests? I do not ever want to have to figure this stuff out on my own. I want a list of sequential directions, preferably with illustrations, because I want to get to the point where the code is running and I have my full suite of other tools (like tests, debuggers, and the like) available for figuring out what this code does. The standard of acceptability for me here is “another person can set themselves up to work on my code without my intervention.”

- What the software does and how to do it. This does not have to be 4,000 words. It can be “This app is for X. In order to get it to do X, go to this URL, click the

doThingbutton, enter your email in this field and thethingIDin this field, and then hitsubmit. The job will take about 5 minutes. Look for an email at the email address you entered on the form to know when it’s done.” Again, my standard of acceptability is “My intervention should be unnecessary for someone new to my project to use it.” - A troubleshooting guide. If someone gets put on call for this software and gets woken up at 3 AM, what are the common problems, and how can they check for them? This section tends to start small and grow as use of the software reveals how it might break. This kind of section tends to be usable even when it’s long because the programmer who needs it can search for a verbatim exception name in the text and get ferried to exactly the relevant section.

That’s it. I don’t put other crap in the external documentation. If I can figure something out from the tests or by setting a breakpoint, calling the method, and following the execution path, I’m not repeating it in the external documentation.

Automated Tests

This is where I start to document stuff that might change, because if the code’s behavior changes, a test better fail. As a reader of documentation, I like tests the best, because I can use them as little laboratories to understand how the code works by changing values in them and seeing what happens. To me, a good test suite has test names that describe, in full, the behavior of the code in question. The trick to making tests an aid rather than a burden is to write them at the appropriate level of abstraction.

- I use feature tests to describe behavior and avoid regressions. These are at the very highest level. “When I visit this page and tap this button, I should see my personal widget list onscreen.”

- I use integration tests to, essentially, defensively check my connections with other systems before deploying. They’re useful to identify when a collaborator is down or its API has changed, but I don’t stuff other functionality testing in here.

- I use what I call multiclass tests (more on that here) to test systems of a few classes that should collaborate to accomplish a task. I prefer this to litanies of unit tests that, inevitably, add friction for beneficial refactors or API changes. Developer hesitation to perform changes that would reduce maintenance load because of having to change the tests are a telltale sign that the tests employ a lower level of abstraction than they should.

- I use unit tests when I need to document and validate complicated logic contained within a single class or function. I start with the edge cases and work up to the “happy path.” I don’t put unit tests for getters, setters, or aliases, usually.

Git Commit Messages

Programmers, in my opinion, criminally underuse git commit messages because they have cargo-culted wisdom about an 80-character message limit from a crappy IBM UI that went out of circulation in the eighties. I wish any part of that last sentence were an exaggeration. Nothing makes me more incandescent with rage than spelunking convoluted code whose commit message “bugfix” leaves me holding an empty bag.

Git commit messages are the perfect place to store contextual information about how and why a programmer made a change set that might need further changes in the future. Why:

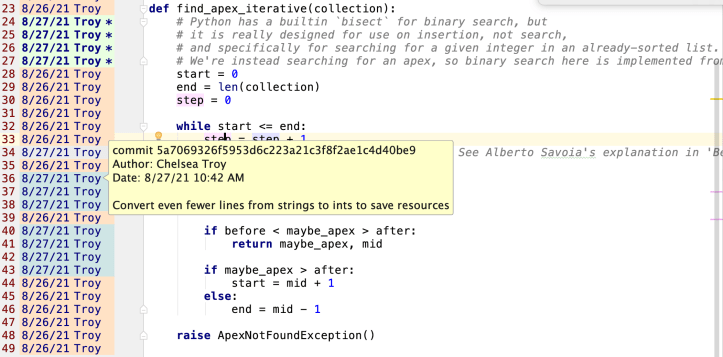

- A commit message is attached to the exact lines where the changes were made. Most modern IDEs have a view that allows a programmer to see the message associated with the last time any given line was changed.

- When a line changes, it gets included in a new change set with a new commit message. So, as the code changes, the message explaining why this line is the way it is also changes.

- Even if an individual line in a file changes and gets a new message, important, older changes that affect, say, the whole file will still be associated with other lines in that whole file. So a git annotation of a whole file will show relevant, timestamped, information about how this file has changed over time.

Nota Bene: The above only works under two circumstances.

- Programmers make well-circumscribed change sets. That means that they associate each commit with a single change and avoid throwing in distracting, extraneous, unrelated changes. The change can be large (like a structural refactor), but the change set should all relate to one thing then described (and if necessary, justified) in the commit message. I talk more about commit hygiene here.

- Programmers include a review of the git history in their context gathering step when they’re approaching code they don’t know. In addition to experimenting with the tests, I pop open the annotation view and take a look at all the commit messages associated with the file or files I plan to change. If the commit history is used as described, I can get a good idea from this of what has changed over time and why.

Here’s an example of some code I wrote in the annotation view in PyCharm, with my cursor hovering over one of the commits so you can see the message:

Hot take: I prefer the use of commit messages to architectural decision records. I have never seen an actual directory of architectural decision records come to any use besides appeasing CTOs. Teams can mandate that junior programmers read the ADR directory, but it’ll usually overwhelm them without teaching them much (see above point about writing skill). Teams can mandate their senior programmers read the ADR directory, but empirically, what happens here is the seniors go “I won’t, but I’ll say I did until I get caught with my pants down.” It’s a burden on the writing end and on the reading end, and most of what’s in there won’t relate to any individual change a programmer needs to make.

Pull Request Descriptions

I love pull request descriptions for their ideal timing and placement to transfer context to another programmer in an asynchronous work setting. I want someone to provide a thoughtful review of my code. In order to do that, they need to be able to run, test, and even make experimental changes to my code. Therefore, I need to provide, in my PR description, the context they might need to get to the point where they could do those things. My standard of acceptability here is “My PR description should make it possible, if I got hit by a bus, for my reviewer to take over this change set on my behalf.”

I got very specific about that over here already, so I won’t rehash what I said. That piece’s follow-up post is somehow even saltier than this post, if you can believe it. Please enjoy.

Automated Documentation

I like API documentation like Swagger or OpenAPI for automatically providing a playground for clients when I’m writing an HTTP API with endpoints that they hit and example requests they might use. I wrote a case study on that for Linode right over here, if you’re interested. I love this kind of automated documentation because, when the implementation of my API changes, the auto-generated documentation changes, too. Swagger also provides opportunities to include additional notes or information for that one weird thing about any API that needs to be customized.

When I can’t use Swagger or OpenAPI, I’ll often make a Postman collection of sample requests for my API, with actual sample request bodies, that others can run. I’ll include a step in CI to run Postman tests against that collection to ensure that all my example requests do what I say they do. Then I’ll allow that Postman Collection to supplant external API documentation.

Of course, I’m not always writing an HTTP API where those kinds of tricks work. I’ve been impressed with Apollo Studio for their work in providing living documentation for GraphQL requests. But what about plain old libraries that a client might need to use, like pandas or matplotlib for Python, collections for Swift, or GreenDAO for Android—or even the API of a programming language itself?

Error Messages

Remember, the plan is to document, briefly, at exactly the point where a piece of context will matter. One such point is the point where a client is “using our thing wrong.” People will use your thing wrong in ways that you could never have predicted. That’s true. But I sometimes see that levied as an argument against putting effort into error messages, which I can’t abide.

If we were to take all the things that people do wrong with a tool and put them in order from most common to least common, the resulting graph would often look a lot like this:

Common Mistakes, Sorted by Number of Occurrences

If a team can isolate the ten most common misuses of their tool and put in time on thoughtful, descriptive error messages at the exact point where someone is making the mistake, that team can eliminate a solid chunk of the amount of time that people spend cussing at the tool before they get it working.

Let’s look at an example: this error message from pandas about mutating copies of a dataframe:

SettingWithCopyWarning: A value is trying to be set on a copy of a slice from a DataFrame. Try using .loc[row_indexer,col_indexer] = value instead

This message happens when you make a copy of a portion of a dataframe and then try to modify that copy—it’s warning you that the original dataframe will not change when you run this code. Unfortunately, the message shows up at the point of copy modification—not at the point of copying itself, which is where the code needs to change. It can be tough for programmers to find the change point because this error message does not say which line to replace with the .loc syntax. I have needed to explain this one to other data scientists a few times.

For those of you who are here because you searched for that error message specifically, find the line right above where you get this message. In it, you’re trying to change or reassign a variable—say it’s called portion_of_dataframe. Scroll up to where you assign that variable in the first place. You’re doing something like this:

portion_of_dataframe = all_of_dataframe[all_of_dataframe['some_column'].some_condition()]Do this instead, which will provide a window into the original dataframe instead of copying it:

portion_of_dataframe = all_of_dataframe.loc[all_of_dataframe['some_column'].some_condition()]What if that advice were in the pandas error message? The tool might even have the context to say exactly which variable is getting assigned to a copy. That would save developers time and effort.

Another data exploration library, Streamlit, includes detailed context for a similar error—the CachedObjectMutationWarning . Though it, like pandas, struggles to surface the error at the exact change point, it goes to greater lengths to explain where that change point might be. I explain the CachedObectMutationWarning in this livestream (which I have here fast-forwarded for you to the exact relevant timestamp, if you’re interested):

The truth is, very few clients will do advance reading about issues like this before using a tool. But surfacing troubleshooting information at the exact point where the trouble must be shot can make a massive positive difference in developer experience.

OK, so what if none of the above strategies adequately covers the use case we need to document?

In those cases, I still fall back on a plain old, well-indexed directory of external documentation.

Sometimes, I just need to describe all of the available functions and all of the options those functions provide. This will definitely need maintenance, and it’s likely to get out of date. But I do my best to keep it as brief as possible, to index it well (providing meaningful titles and headers so folks can find what they need), and to keep regular maintenance on my to-do list.

And at least if I’ve employed the other documentation methods described above, I have a smaller corpus of external documentation to maintain than I would if I shoved every un-codified thought about my code base into it.

If you liked this piece, you might also like:

The technical debt series (come for the buzzwords, stay for the insights)

This piece on risk-oriented testing (also mentioned above)

This salty piece about pull request descriptions (which made the ‘best of’ list on Pocket in 2020!)

Sorry if you get this twice. I got an nonce verification error when I tried to submit.

I was just going to say I disagree a little on your comment about the value of architectural decision records, but we may be using them differently. We don’t use them for decisions on detailed design. We use them instead for decisions to use a specific technology or architectural pattern. For example, a recent one was the decision to continue to use React as the first choice over Svelte.