In my daily work, I’m becoming quite familiar with the ins and outs of using System.Text.Json. For those unfamiliar with this library, it was released along with .NET Core 3.0 as an in-the-box JSON serialisation library.

At its release, System.Text.Json was pretty basic in its feature set, designed primarily for ASP.NET Core scenarios to handle input and output formatting to and from JSON. The library was designed to be performant and reduce allocations for common scenarios. Migrating to System.Text.Json helped ASP.NET Core continue to improve the performance of the framework.

Since that original release, the team continue to expand the functionality of System.Text.Json, supporting more complex user scenarios. In the next major release of the Elasticsearch .NET client, it’s my goal to switch entirely to System.Text.Json for serialisation.

Today, v7.x uses an internalised and modified variant of Utf8Json, a previous high-performance JSON library that sadly is no longer maintained. Utf8Json was initially chosen to optimise applications making a high number of calls to Elasticsearch, avoiding as much overhead as possible.

Moving to System.Text.Json in the next release has the advantage of continuing to get high-performance, low allocation (de)serialisation of our strongly-typed request and response objects. Since its relatively new, it leverages even more of the latest high-performance APIs inside .NET. In addition, it means we move to a Microsoft supported and well-maintained library, which is shipped “in the box” for most consumers who are using .NET Core and so doesn’t require additional dependencies.

That brings us to the topic of today’s post, where I will briefly explore a new performance-focused feature coming in the next release of System.Text.Json (included in .NET 6), source generators. I won’t spend time explaining the motivation for this feature here. Instead, I recommend you read Layomi’s blog post, “Try the new System.Text.Json source generator“, explaining it in detail. In short, the team have leveraged source generator capabilities in the C# 9 compiler to optimise away some of the runtime costs of (de)serialisation.

Source generators offer some extremely interesting technology as part of the Roslyn compiler, allowing libraries to perform compile-time code analysis and emit additional code into the compilation target. There are already some examples of where this can be used in the original blog post introducing the feature.

The System.Text.Json team have harnessed this new capability to reduce the runtime cost of (de)serialisation. One of the jobs of a JSON library is that it must map incoming JSON onto objects. During deserialisation, it must locate the correct properties to set values for. Some of this is achieved through reflection, a set of APIs which let us inspect and work with Type information.

Reflection is powerful, but it has a performance cost and can be relatively slow. The new feature in System.Text.Json 6.x allows developers to enable source generators that perform this work ahead of time during compilation. It’s really quite brilliant as this removes most of the runtime cost of serialising to and from strongly-typed objects.

This post will not be my usual deep dive style. Still, since I have experimented with the new feature I thought it would be helpful to share a real scenario for leveraging System.Text.Json source generators for performance gains.

The Scenario

One of the common scenarios consumers of the Elasticsearch client need to complete is indexing documents into Elasticsearch. The index API accepts a simple request that includes the JSON representing the data to be indexed. The IndexRequest type, therefore, includes a single Document property of a generic TDocument type.

Unlike many other request types defined in the library, when sending the request to the server, we don’t want to serialise the request type itself (IndexRequest), just the TDocument object. I won’t go into the existing code for this here as it’ll muddy the waters, and it’s not that relevant to the main point of this post. Instead, let me explain briefly how this is implemented in prototype form right now, which is not that dissimilar to the current code base anyway.

public interface IProxyRequest

{

void WriteJson(Utf8JsonWriter writer);

}

public class IndexRequest<TDocument> : IProxyRequest

{

public TDocument? Document { get; set; }

public void WriteJson(Utf8JsonWriter writer)

{

if (Document is null) return;

using var aps = new ArrayPoolStream();

JsonSerializer.Serialize(aps, Document);

writer.WriteRawValue(aps.GetBytes());

}

}

We have the IndexRequest type implement the IProxyRequest interface. This interface defines a single method that takes a Utf8JsonWriter. The Utf8Json writer is a low-level serialisation type in System.Text.Json for writing JSON tokens and values directly. The critical concept is that this method delegates the serialisation of a type, to the type itself, giving it complete control over what is actually serialised.

For now, this code uses System.Text.Json serialisation directly to serialise the Document property. Remember, this is the consumer provided type representing the data being indexed.

The final implementation will include passing in JsonSerializerOptions and the ITransportSerializer implementation registered in the client configuration. We need to do this because it allows consumers of the library to provide an implementation of ITransportSerializer. If provided, this implementation is used when serialising their own types, while the client types still use System.Text.Json. It’s vital as we don’t want to force consumers to make their types compatible with System.Text.Json to use the client. They could configure the client with a JSON.Net-based implementation if they prefer.

The above code serialises the document and, thanks to a new API added to the Utf8JsonWriter, can write the raw JSON to the writer using WriteRawValue.

The WriteJson method will be invoked from a custom JsonConverter, and all we have access to is the Utf8JsonWriter. I won’t show that converter here as it’s slightly off-topic. Ultimately, custom JsonConverters and JsonConverterFactory instances can be used to perform advanced customisation when (de)serialising types. In my example, if the type implements IProxyRequest a custom converter is used which calls into the WriteJson method.

This (finally) brings me to one example use case for source generator functionality from System.Text.Json. What if the consumer wants to boost performance by leveraging source generator serialisation contexts when their document is serialised?

In the prototype, I added an Action<Utf8JsonWriter, TDocument> property to the IndexRequest. A consumer can set this property and provide their own serialisation customisation for their document. The developer may write directly into the Utf8Json writer but also leverage the source generator feature if they prefer.

public class IndexRequest<TDocument> : IProxyRequest

{

public TDocument? Document { get; set; }

public Action<Utf8JsonWriter, TDocument>? WriteCustomJson { get; set; }

public void WriteJson(Utf8JsonWriter writer)

{

if (Document is null) return;

if (WriteCustomJson is not null)

{

WriteCustomJson(writer, Document);

return;

}

using var aps = new ArrayPoolStream();

JsonSerializer.Serialize(aps, Document);

writer.WriteRawValue(aps.GetBytes());

}

}

This would be an advanced use case and only neccesary for consumers with particularly high-performance requirements. When an Action is provided, the WriteJson method uses that to perform the serialisation.

To see this in action, imagine the consumer is indexing data about books. For testing, I used a simple POCO types to define the fields of data I want to index.

public class Book

{

public string Title { get; set; }

public string SubTitle { get; set; }

public DateTime PublishDate { get; set; }

public string ISBN { get; set; }

public string Description { get; set; }

public Category Category { get; set; }

public List<Author> Authors { get; set; }

public Publisher Publisher { get; set; }

}

public enum Category

{

ComputerScience

}

public class Author

{

public string? FirstName { get; set; }

public string? LastName { get; set; }

}

public class Publisher

{

public string Name { get; set; }

public string HeadOfficeCountry { get; set; }

}

While these would serialise just fine with no further work, let’s enable source generation. This creates metadata that can be used during serialisation instead of reflecting on the type at runtime. It’s as simple as adding this definition to the consuming code.

[JsonSourceGenerationOptions(PropertyNamingPolicy = JsonKnownNamingPolicy.CamelCase)]

[JsonSerializable(typeof(Book))]

internal partial class BookContext : JsonSerializerContext

{

}

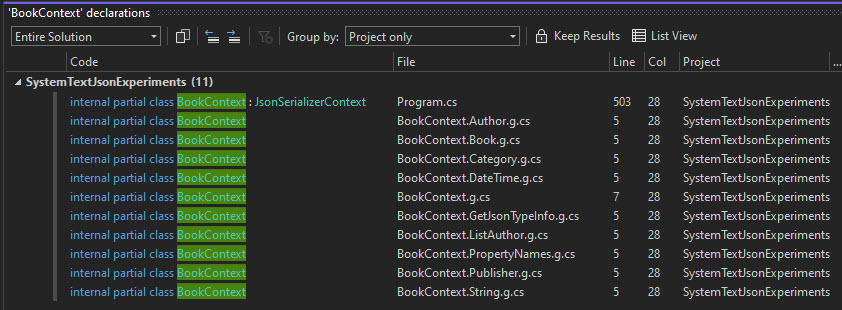

We must include a partial class deriving from JsonSerializerContext and add the JsonSerializable attribute to it which marks it for inclusion in source generation.

The source generator feature runs at compile time to complete the BookContext code. As shown above, we can even provide options controlling the serialisation of the type by adding the JsonSourceGenerationOptions attribute. The JsonSerializerContext contains logic that builds up JsonTypeInfo, shifting the reflection cost to compilation time. This results in several generated files being included in the compilation.

During indexing, the consumer code can then look something like this.

var request = new IndexRequest<Book>()

{

WriteCustomJson = (writer, document) =>

{

BookContext.Default.Book.Serialize!(writer, document);

writer.Flush();

},

Book = = new Book

{

Title = "This is a book",

SubTitle = "It's really good, buy it!",

PublishDate = new DateTime(2020, 01, 01),

Category = Category.ComputerScience,

Description = "This contains everything you ever want to know about everything!",

ISBN = "123456789",

Publisher = new Publisher

{

Name = "Cool Books Ltd",

HeadOfficeCountry = "United Kingdom"

},

Authors = new List<Author>

{

new Author{ FirstName = "Steve", LastName = "Gordon" },

new Author{ FirstName = "Michael", LastName = "Gordon" },

new Author{ FirstName = "Rhiannon", LastName = "Gordon" }

}

}

};

The important part is inside the WriteCustomJson action, defined here using lambda syntax. It uses the default instance of the source generated BookContext, serialising it directly into the Utf8Json writer.

It’s pretty straightforward to introduce this capability, but what benefit does it provide? To compare, I knocked up a quick benchmark that serialises 100 instances of the IndexRequest. This simulates part of the cost of sending 100 API calls to the index API of the server. The results for my test case were as follows.

| Method | Mean [us] | Ratio | Gen 0 | Allocated [B] |

|------------------------ |----------:|------:|--------:|--------------:|

| SerialiseRequest | 396.4 us | 1.00 | 27.3438 | 115,200 B |

| SerialiseUsingSourceGen | 132.3 us | 0.33 | 14.6484 | 61,600 B |

In my prototype, using the System.Text.Json source generator makes the runtime serialisation 3x faster and, in this case, allocates nearly half as much as the alternative case. Of course, the impact will depend on the complexity of the type being (de)serialised, but this is still an exciting experiment. It looks promising to provide a mechanism for consumers to optimise their code with source generators, especially for volume ingestion or retrieval scenarios.

I’ll be researching the benefit of using source generator functionality for the request and response types inside the client. I’m reasonably confident it will provide a good performance boost that we can leverage to make serialisation faster for our consumers. Since this is one of the core activities of a client such as ours, it could be a real benefit that consumers receive just by upgrading. Along with other optimisations, it should make the move to System.Text.Json as the default serialisation well worth the effort.

Have you enjoyed this post and found it useful? If so, please consider supporting me: