NGINX Reverse Proxy Metrics to Monitor

NGINX is one of the most popular web servers nowadays, especially for Linux web servers. According to nginx.com, it powers more than 400 million websites. It is, however, probably even more commonly used as a reverse proxy. Since it acts as a go-between for your application and your users, it’s important to properly monitor NGINX metrics. NGINX comes free with Linux. Sometimes you may find yourself trying to understand some performance degradation of the application while an issue may come from NGINX itself. In this post, we’ll discuss the most important NGINX metrics to monitor when used as a reverse proxy.

Table of Contents

Monitoring Can Be Overwhelming

A common approach to monitoring is to install a tool with some built-in dashboards and add even more customized information to it. However, this easily leads to having too much irrelevant information. Having two big screens with all metrics possible on them doesn’t give you a better overview. It gives you a headache. It’ll make spotting the cause of issues harder.

On the other hand, if you pick only the metrics that really tell you something important, you’ll benefit on many fronts. You’ll save time because making quick correlation between metrics A and B will be easier. You’ll save money because fewer metrics means less storage and network traffic needed. Plus, the easier it is for engineers to understand what they’re looking at, the quicker they’ll be able to find what caused the issue and, ultimately, fix it.

But Which Metrics Are “Important”?

It all depends who you ask. Different metrics will be useful for application developers, different for operations teams, and different for the product owners/technical leads.

Application Developers

First of all, yes, developers definitely should have access to NGINX metrics. A common misconception is that it’s only relevant for the ops team. So, what can be important for developers?

- $upstream_response_time: First, and probably the most important one, is the metric that tells how much time it took for the application server to respond to the request. Why is this important for the developers? Because the response time will be directly related to the quality of the code and libraries used. With easy access to this metric, developers can improve the performance of the application.

- $request_uri + $status: These two metrics can give developers early insights into any issues that may arise from wrong routing or authentication issues. Whenever, for example, some product might be missing on the website, these two metrics help to spot it (URL to the product + 404 code). Whenever there’s malfunctioning code, these two metrics help identify which request causes Internal Server Error (500).

- $request_uri + $upstream_cache_status: If NGINX is also used as a cache, then developers would benefit from having a look at these two metrics. They’ll tell if there’s a HIT or MISS on the cache for a particular URL. It’s important to make the most use of the cache. Improperly configured cache headers can lead to lots of MISS requests on the cache. When having access to these, developers can easily optimize the headers sent by their applications to increase the amount of cache HITs.

SRE/Operations Team

Ops teams will benefit the most from different sets of metrics than those developers might find useful. They need to focus more on NGINX itself. Therefore, NGINX performance- and security-related metrics that aren’t relevant for developers will be important here. Let’s discuss a few:

- $connections_active: First, this simple metric provides the total number of active connections. It’s crucial for performance estimation. Based on average and peak numbers of active connections, ops teams can estimate resources needed for NGINX. Also based on those metrics, they can spot sudden spikes in traffic and scale up NGINX instances accordingly.

- $request_length: This provides full request size (in bytes). It’s important for calculating overall bandwidth and needed for proper network sizing.

- $request_time in correlation with $upstream_response_time: These two can quickly tell if degraded performance is caused by NGINX or the upstream application. If there’s a big difference between the two, it means NGINX is having troubles or there’s some misconfiguration.

- $upstream_connect_time: This tells how much time NGINX spent on establishing a connection with the upstream server. It indicates how stable the connection between NGINX and the proxied server is.

Tech Lead/Product Owner

Tech leads/product owners don’t need to know what the ops team needs to know. They need to focus on what’s important for the business, which heavily depends on the clients. Therefore, for product owners, metrics that tell them something about clients are much more valuable:

- $http_user_agent: This identifies a specific browser/device. It’s important to get an overview on which devices are used. If 90% of the traffic is coming from mobile devices, then as a product owner, you should focus on mobile features and making the website responsive. If there’s almost nobody using, for example, the Opera browser, you know that you can give lower priority to fixing Opera-related issues.

- $status: A quick look at the HTTP statuses metrics can give a tech lead a general overview on the health of the application. If there are as many 500 errors as 200s, then you know there’s something wrong.

Different Needs for Different Environments

What about different environments? Should you just use the same metrics for all environments? Well, no, you shouldn’t. You can monitor more metrics on dev environments, and it’ll allow you to better tune the configuration and understand what’s relevant. If you think metrics X and Y are important, but you never look at them, then you’ll know you can skip them in a production environment. Some metrics can be skipped in the development environment too. If you, for example, don’t use the NGINX caching mechanism in development environments (which is a common practice), then you don’t need cache-related metrics.

How to Monitor NGINX on Linux

Now that you know what to monitor, let’s discuss how to monitor it.

nginx_status

The most basic solution. It’s built into NGINX, so you don’t need to install anything extra. You only need to enable it in the configuration. Nginx_status will provide you with a very simple page out of the box, which shows only very basic information about the number of connections. Why are we mentioning it then? Because most of the tools for monitoring NGINX require nginx_status to be enabled, and sometimes it’s beneficial to be able to quickly curl your NGINX instance and get basic information.

NGINX Prometheus Exporter

NGINX Prometheus Exporter is a much more advanced solution but also much more difficult. It’s valuable when you’re already using Prometheus to monitor the rest of your infrastructure. It feeds you with NGINX-specific metrics.

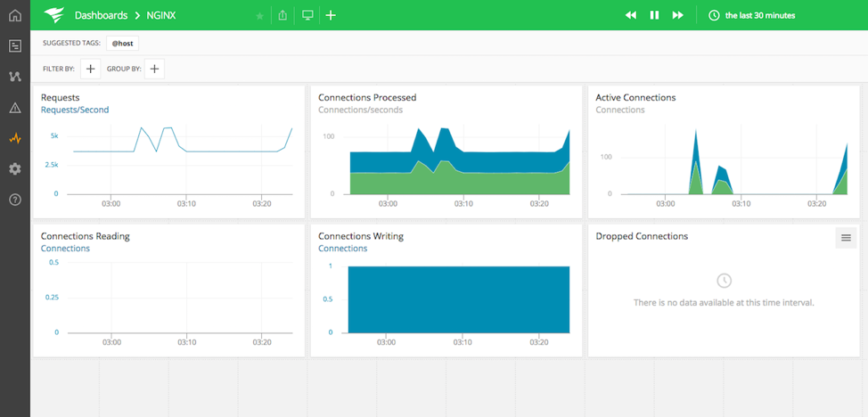

AppOptics

If you don’t feel like setting up the whole Prometheus stack yourself, and nginx_status is simply not enough for you, tools like SolarWinds® AppOptics™ can provide you with a ready-to-use solution. AppOptics isn’t limited to NGINX. It can monitor your whole infrastructure including Linux and Windows environments, but it’s optimized for seamless integration with NGINX, so you won’t need to spend hours to get started. But don’t take my word for it—click here to start a free trial.

OK, I Have All the Metrics I Need. Now What?

So, you picked appropriate metrics, and you’ve chosen a tool to monitor your NGINX on an Ubuntu Linux operating system. The next step is to actually understand your data. Picking only valid metrics can help you focus, but it won’t automatically solve all your problems. You still need to correlate them yourself. For example, if you see a sudden spike in 500 HTTP errors, it doesn’t automatically mean there’s something wrong. Check your overall traffic. If there’s a spike in traffic, there will be a spike in 500s too.

Correlating NGINX Metrics With Other Data

The metrics we described here can help you to get the most important information about your NGINX, but since NGINX in reverse proxy mode doesn’t do much on its own, you need to correlate metrics with the rest of the system to get the full overview. You should compare the NGINX metrics with application and infrastructure metrics. For example, if you see long response times from the upstream server, you should look at application-specific metrics to find out why upstream responds slowly. If you see some performance degradation, you should look at underlying infrastructure metrics.

Summary

Choosing only relevant metrics to look at helps you focus on what’s really important. If you suddenly see a spike on 20 different metrics, you won’t be able to quickly understand where the spike comes from. As a developer, you don’t want to be bothered with details and performance counters of NGINX itself. You want to be focused on the application-related metrics. Similarly, as a team lead, you’ll get more valuable insight from user-related information than from infrastructure details. Choosing metrics that help you understand better what’s happening ultimately helps you save time and money.

As for the monitoring solution itself, there aren’t many NGINX-specific tools, but many generic monitoring tools can also monitor NGINX. You should, however, consider one that can provide you with important information without bringing in too many irrelevant numbers and graphs. SolarWinds AppOptics is optimized for monitoring NGINX, the Linux or Windows operating systems, and the entire application stack at the same time. The more data you feed into it, the more correlations it will provide you with.

This post was written by Dawid Ziolkowski. Dawid has 10 years of experience as a Network/System Engineer at the beginning, DevOps in between, Cloud Native Engineer recently. He’s worked for an IT outsourcing company, a research institute, telco, a hosting company, and a consultancy company, so he’s gathered a lot of knowledge from different perspectives. Nowadays he’s helping companies move to cloud and/or redesign their infrastructure for a more Cloud-Native approach.