TimescaleDB 2.0 with Hasura

Our #GraphQLJanuary continues with blog posts, live streams, Discord Q&A, office hours, and more. For a schedule of upcoming events, join the community or register at https://hasura.io/graphql/graphql-january/.

In this post, Hasura engineer Toan shares his experience with using Hasura alongside TimescaleDB 2.0 and exploring what works...and what doesn’t. Learning from the experience of others exploring workarounds to achieve their goals is always instructive.

This post is part of a 3-part series exploring TimescaleDB with Hasura.

TimescaleDB is an open-source database designed to make SQL scalable for time-series data. The most valuable features of TimescaleDB is hypertable, a high-level table that provides automatic partitioning across time and space (partitioning key).

TimescaleDB 2.0 is a major version upgrade that has many improvements from version 1. It introduces new interesting features and capabilities, especially horizontal multi-node scaling that can solve the limitation of write performance.

Because it is a PostgreSQL extension, it mostly works well with Hasura. However, there are several limitations. This article will share some known issues and workarounds.

The example code is uploaded on Github.

Migrations and Breaking changes

From 1.x to 2.0

To upgrade new version, you can read the official guide here

The following table shows syntax comparison between TimescaleDB 1.7 and 2.0:

| 1.7 | 2.0 | Comment |

|---|---|---|

| add_compress_chunks_policy | add_compression_policy | Add compression policy |

| remove_compress_chunks_policy | remove_compression_policy | Remove compression policy |

| add_drop_chunks_policy | add_retention_policy | Add retention policy |

| remove_drop_chunks_policy | remove_retention_policy | Remove retention policy |

| CREATE VIEW ... WITH (timescaledb.continuous) | CREATE MATERIALIZED VIEW ... (timescaledb.continuous); SELECT add_continuous_aggregate_policy(...); | create materialized view (continuous aggregate) |

| DROP VIEW <view_name> CASCADE | DROP MATERIALIZED VIEW <view_name> | Drop materialized view (continuous aggregate) |

| SELECT * FROM timescaledb_information.hypertables WHERE table_name = '<table_name>' | SELECT * FROM hypertable_size('<table_name>') | Get hypertable size |

| ^ | SELECT hypertable_index_size('<table_name>') | Get hypertable index size |

| ^ | SELECT hypertable_detailed_size('<table_name>') | Get hypertable index detailed size |

| SELECT * FROM timescaledb_information.compressed_chunk_stats WHERE hypertable_name = '<table_name>' | SELECT * FROM hypertable_compression_stats('<table_name>') | Get compression stats |

Many of the functions and SQL syntaxes are renamed. timescaledb_information view structures are very different. You can't list all hypertable sizes with timescaledb_information.hypertables, timescaledb_information.compressed_hypertable_stats but SELECT each table with hypertable_size, hypertable_compression_stats functions.

In version 2.0, there are 2 new interesting features:

- Scheduled job. Now you can run cronjob in Postgres to do many things, such as automatically refreshing Materialized view.

- Multi-node. It helps us scaling read/write into multiple data nodes.

Because of the scheduled job. Continuous Aggregate Materialized View has a big refactor, as well as breaking changes, that we will explore more in the next section.

Materialized View (Continuous Aggregate)

Continuous Aggregate Materialized View is Materialized View with auto refresh and partitioning. From a migration point of view, high level SQL definition is translated to internal statements.

Input:

CREATE MATERIALIZED VIEW conditions_summary_minutely

WITH (timescaledb.continuous) AS

SELECT time_bucket(INTERVAL '1 minute', time) AS bucket,

AVG(temperature),

MAX(temperature),

MIN(temperature)

FROM conditions

GROUP BY bucket;

Output:

CREATE OR REPLACE VIEW "public"."conditions_summary_minutely" AS

SELECT

_materialized_hypertable_5.bucket,

_timescaledb_internal.finalize_agg(

'avg(double precision)' :: text,

NULL :: name,

NULL :: name,

'{{pg_catalog,float8}}' :: name [],

_materialized_hypertable_5.agg_2_2,

NULL :: double precision

) AS avg,

_timescaledb_internal.finalize_agg(

'max(double precision)' :: text,

NULL :: name,

NULL :: name,

'{{pg_catalog,float8}}' :: name [],

_materialized_hypertable_5.agg_3_3,

NULL :: double precision

) AS max,

_timescaledb_internal.finalize_agg(

'min(double precision)' :: text,

NULL :: name,

NULL :: name,

'{{pg_catalog,float8}}' :: name [],

_materialized_hypertable_5.agg_4_4,

NULL :: double precision

) AS min

FROM

_timescaledb_internal._materialized_hypertable_5

WHERE

(

_materialized_hypertable_5.bucket < COALESCE(

_timescaledb_internal.to_timestamp(_timescaledb_internal.cagg_watermark(5)),

'-infinity' :: timestamp with time zone

)

)

GROUP BY

_materialized_hypertable_5.bucket

UNION ALL

SELECT

time_bucket('00:01:00' :: interval, conditions."time") AS bucket,

avg(conditions.temperature) AS avg,

max(conditions.temperature) AS max,

min(conditions.temperature) AS min

FROM

conditions

WHERE

(

conditions."time" >= COALESCE(

_timescaledb_internal.to_timestamp(_timescaledb_internal.cagg_watermark(5)),

'-infinity' :: timestamp with time zone

)

)

GROUP BY

(

time_bucket('00:01:00' :: interval, conditions."time")

);

It's frustrating to reuse definitions in console if you lose the original script. Moreover, it doesn't support CREATE OR REPLACE to replace current definition, so you need to DROP and CREATE the view.From TimescaleDB 2.0, the new API and framework for continuous aggregates separates policy from the core functionality (#2118). The continuous view creation syntax is changed:

-- old syntax

CREATE VIEW conditions_summary_minutely

WITH (timescaledb.continuous,

timescaledb.refresh_lag = '1h',

timescaledb.refresh_interval = '1h')

AS

SELECT time_bucket(INTERVAL '1 minute', time) AS bucket,

AVG(temperature),

MAX(temperature),

MIN(temperature)

FROM conditions

GROUP BY bucket;

-- new syntax

CREATE MATERIALIZED VIEW conditions_summary_minutely

WITH (timescaledb.continuous) AS

SELECT time_bucket(INTERVAL '1 minute', time) AS bucket,

AVG(temperature),

MAX(temperature),

MIN(temperature)

FROM conditions

GROUP BY bucket;

-- the continuous aggregate policy is a separated job

SELECT add_continuous_aggregate_policy('conditions_summary_minutely',

start_offset => INTERVAL '2 h',

end_offset => INTERVAL '1 h',

schedule_interval => INTERVAL '1 h');

The new continuous view separates continuous aggregate policy into another function add_continuous_aggregate_policy instead of parameters in one CREATE VIEW statement. Moreover, it leads another issue:

CREATE MATERIALIZED VIEW ... WITH DATA cannot run inside a transaction block

FatalError: ... cannot run inside a transaction block

There are functions that require running in AUTOCOMMIT mode. Every statement is automatically committed once submitted. psql turns on autocommit by default. Ever notice that psql executes SQL statements one by one? AFAIK, this error is thrown in these operations:

- Admin commands: CREATE/DROP database, tablespace,...

- CREATE INDEX CONCURRENTLY

Now, this error appears on new Continuous materialized view too. It isn't a problem if you create views with psql. However, Hasura migration CLI becomes unusable, as it uses transaction to apply migration files.

It isn't impossible to run a migration with database driver, if it satisfies both conditions:

- Executes SQL statement without transaction.

- Only one statement per request.

Unfortunately it is hard to change GraphQL Engine source code, because it relates to core functionality of the engine. So, I came up with an idea. It is easier to customize the CLI with native Go's lib/pq option.

This is a hack, so it isn't official in the main upstream. You can download the customized CLI here, or try it in the single node example.

hasura migrate apply --disable-transaction --database-url "<url>"

There are also caveats:

- Migration files are applied right away one by one, not applied as bulk SQL in one transaction. Therefore the migration can't be canceled. For example, you apply 3 migrations

A, B, C. If there is any error in migrationC, AandBwere still applied. - There must be only 1 SQL statement in

up.sqlif you use a special statement such asCREATE MATERIALIZED VIEW.

GraphQL

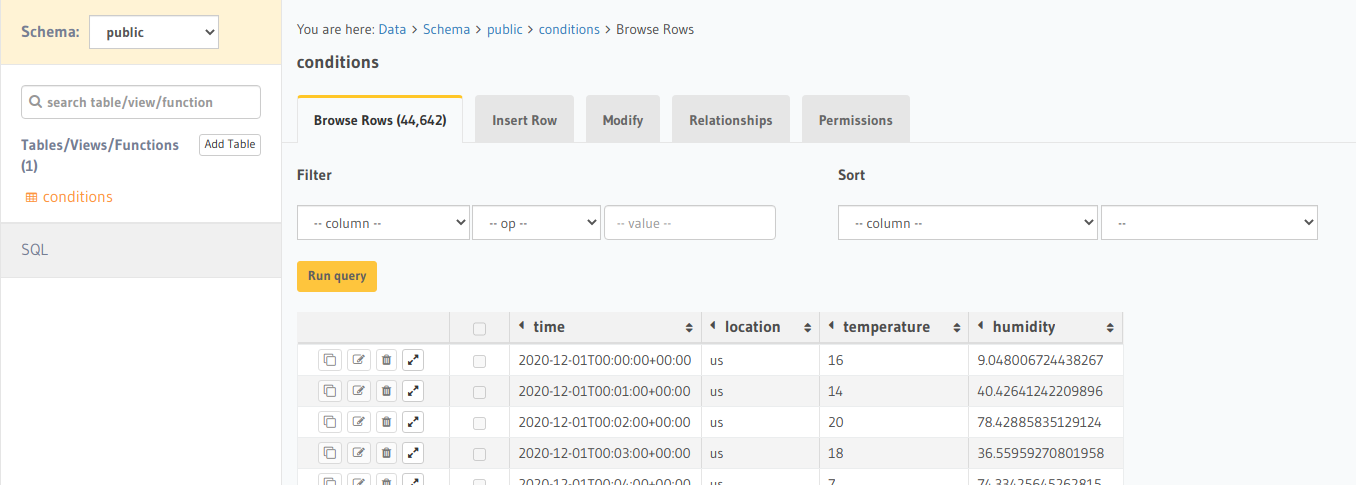

Because TimescaleDB is an extension of Postgres, it is compatible with GraphQL Engine. However, there are several limitations of TimescaleDB that affect Hasura:

- Hypertable doesn't require a Primary key. Therefore

<hypertable_name>_by_pkqueries, mutations and subscriptions are disabled. - GraphQL Engine doesn't support

UPSERTforhypertable. In fact, TimescaleDB supports UPSERT , but with infer indexes only. Meanwhile popular Postgres query builder engines, ORMs useON CONFLICT ON CONSTRAINT constraint_namestatement. - Foreign key can't be point into a hypertable (although that data model isn't very common). The common is for the hypertable to have a foreign key to point to a relational table (think an

object_idin the time-series is a Foreign Key to a metadata table about the object). - Although we can create a manual relationship between hypertables, the query performance should be considered.

(Thank Mike Freedman (@michaelfreedman) for the feedback)

Console and Hasura CLI

TimescaleDB SQL API isn't supported by the Hasura console. We have to use Raw SQL or create migration manually. In theory hypertable is the high level of the table, we can create it in console. However, hypertable uses timestamp or number column as partition key. It requires including that column as primary key. Therefore we have to ignore primary key or use composite keys, and in practice we choose ignoring it. The issue is, Hasura console forces Primary key on table creation #6235. Manual migration creation is unavoidable.

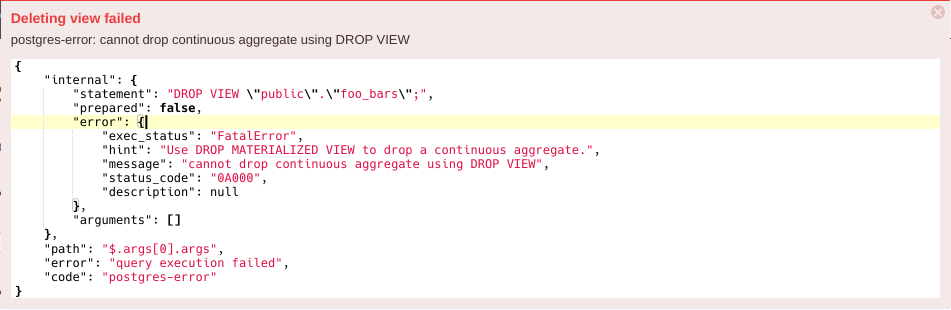

However, Continuous Aggregate View can't be deleted by console UI. Behind the scene it requests DROP VIEW SQL execution. The correct statement is DROP MATERIALIZED VIEW.

Due to the optional Primary key in hypertable, we can't view detail, update and delete rows in the data table.

Therefore, most TimescaleDB functions have to be run in raw SQL. The console doesn't have much help here.

Should I upgrade?

Yes, if you aren't afraid of migration breaking changes. The new Continuous Materialized View is also another concern.

TimescaleDB 2.0 is also worth to upgrade with Multi-node scaling solution. We will explore in the next part of series coming soon.