The code that accompanies this article can be found here.

In this article, we explore how we can prepare a machine learning model for production and deploy it inside of simple Web application. Deployment of Machine Learning models is an art for itself. In fact, to successfully put a machine learning model in production goes beyond data science knowledge and engages a lot of software development and DevOps skills. Why should you care about all this? Well, at the moment one of the most valued roles in data science teams are Machine Learning engineers. This role gathers the best of both worlds.

These engineers don’t have to know only how to apply different Machine Learning and Deep Learning models to a proper problem, but how to test them, verify them and finally deploy them as well. Having a person that is able to put Machine learning models into production became a huge asset to any company. This is, in general, the main type of services that Rubik’s Code provides. In order to become a successful Machine Learning engineer, you need to have a variety of skills that are not focused only on the data. In fact, the amount of code that is written for Machine Learning models is much smaller than the amount of the code that supports testing and serving that model.

Are you afraid that AI might take your job? Make sure you are the one who is building it.

STAY RELEVANT IN THE RISING AI INDUSTRY! 🖖

MLOps, an engineering term that was built around this need is becoming, a new trend. That is why in this article we focus on the several techniques you need to know in order to build one successful machine learning application that users would like. The complete solutions provided in this tutorial are composed of two components: server-side and client-side. This is the basic web architecture, where the client-side interacts with the user of the application and sends it to the server-side. Server-side does the processing of the data, or in this case, running the predictions, and returns the result to the client-side, which presents it to the user. In essence, in this article we build a small system that can be represented with the following image:

MLOps, an engineering term that was built around this need is becoming, a new trend. That is why in this article we focus on the several techniques you need to know in order to build one successful machine learning application that users would like. The complete solutions provided in this tutorial are composed of two components: server-side and client-side. This is the basic web architecture, where the client-side interacts with the user of the application and sends it to the server-side. Server-side does the processing of the data, or in this case, running the predictions, and returns the result to the client-side, which presents it to the user.

In order to cover all that we need to cover several topics:

1. Installations and Dataset

To successfully run the examples from this tutorial, Python 3.6 or higher needs to be installed. The easiest way to do that is to use Anaconda distribution. It comes with all other necessary libraries for this tutorial, like Pandas, NumPy, SciKit Learn, etc. To install FastAPI and all it’s dependencies use the following command:

pip install fastapi[all]This includes Uvicorn, an ASGI server that runs your code. If you are more comfortable with some other ASGI server like Hypercorn, that is also fine and you can use it for this tutorial.

For the web application, we use Angular. For that, you need to install Node.js and npm. The most common way to manipulate with Angular framework is to use Angular Command Line Interface – Angular-CLI. One of the benefits of this tool is that, once we initialize our application with it, we can use TypeScript and it will be automatically translated to JavaScript. Installing this interface is done through npm, of course, by running the command:

npm install -g angular-cliWhen you want to create new Angular application you can do so with the command:

ng new application_nameThis command will create a folder structure that will be used by our application. To run this application, position shell in the just created root folder of your application (cd application_name), and call command:

ng serveIf you followed the previous steps once you go to your browser and open localhost:4200 you will be able to see default Angular application.

Data that we use in this article is from PalmerPenguins Dataset. This dataset has been recently introduced as an alternative to the famous Iris dataset. It is created by Dr. Kristen Gorman and the Palmer Station, Antarctica LTER. You can obtain this dataset here, or via Kaggle. This dataset is essentially composed of two datasets, each containing data of 344 penguins. Just like in Iris dataset there are 3 different species of penguins coming from 3 islands in the Palmer Archipelago. Also, these datasets contain culmen dimensions for each species. The culmen is the upper ridge of a bird’s bill. In the simplified penguin’s data, culmen length and depth are renamed as variables culmen_length_mm and culmen_depth_mm. Here is the dataset:

2. REST API Basics

Before we start with the implementation of the Web application with Python and Flask let’s first find out what is REST API. Now, I believe that you have seen this term once twice in your life. The second part of the term – API stands for an application programming interface. Essentially, it API represents the set of rules that programs use to communicate with each other. For example, in the server-client architecture server side of the application is programmed in a way that exposes methods that can be called by the client-side of the application. What does this mean is that the client can call a method on the server inside of its code and get a certain result from it. REST stands for “Representational State Transfer”. This represents a set of rules that developers should follow when they build their APIs. It defines how the API should look like, so APIs are standardized.

One of the rules defines that data or resources could be gathered when you link a specific URL. For example, you can link ‘api.rubikscode.com/blogs’ and get the list of blogs as a response. URL ‘ api.rubikscode.com/blogs ‘ is called request, and the list of clients that you get back is called a response. Every request is composed of 4 parts:

- The Endpoint (route) – This is the URL we mentioned previously. It is structured like this – “root-endpoint/?”. The root-endpoint is the starting point and it can be followed by the path and query parameters. The path defines what specific resource is required. For example, the root-endpoint of GitHub’s API is ‘api.github.com’, while the full endpoint to the list of my repositories on GitHub is https://api.github.com/users/nmzivkovic/repos.

- The Method – There are five types of requests that can be sent, and the method defines this type:

- GET – Used to get or read information.

- POST – Used to create a new resource.

- PUT and PATCH – They are used to update resources.

- DELETE – Deletes resource.

- The Headers – The headers are used to provide additional information to both client and server in a form of property-values pairs. List of valid headers on MDN’s HTTP Headers Reference.

- The Body – This section contains information that the client sends to the server. It is not used in GET requests.

What we want to create in this article is the Web server, which serves a model for Iris predictions. We want to build API using which the client-side of the application can get predictions from the model. That is done using the Python framework Flask.

2. FastAPI Basics

In this article, we use FastAPI to build REST API. Why do we use this library? There are several reasons for it. FastAPI is faster when compared with other major Python frameworks like Flask and Django. Also, this framework supports asynchronous code out of the box using the async/await Python keywords. This improves its performance even further. Probably the most distinct feature that makes FastAPI one of the best API libraries out there is built-in interactive documentation. We explore this feature in more detail a little bit later. Finally, applications built using FastAPI are very easy to test and deploy. Because of all of this, FastAPI became a standard when it comes to building web API applications with Python.

2.1 First FastAPI Application

Ok, let’s build our first FastAPI application. The cool thing is that a simple HelloWorld example with FastAPI can be created with 5 lines of code. I am not joking. Are you ready? Here is what you need to do:

- Create a new folder and name it ‘my_first_fastapi’ (or whatever you feel like it :))

- Create a new Python script called main.py (or whatever you feel like it :))

- Add the following code to the main.py:

from fastapi import FastAPI

app = FastAPI()

@app.get("/")

async def root():

return {"message": "Hello World"}- Go to the terminal and position into my_first_fastapi folder

- Run this command:

uvicorn main:app --reloadINFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [28720]

INFO: Started server process [28722]

INFO: Waiting for application startup.

INFO: Application startup complete.Awesome, now you have a web server running in the http://127.0.0.1:8000. If you go to this address in your browser you will see something like this:

Notice that @app.get(“route”) decorator in the code above? This decorator determines the type of request that can be issued on a particular endpoint. This means, for example, that if we put app.get(“/users”) we define REST API endpoint “users” on whcih we can issue GET http request.

2.2 Fast API Documentation

One of the coolest things about FastAPI is built-in interactive documentation. It is presented with Swagger UI. If we use the example that we have just built and go to the http://127.0.0.1:8000/docs we will see the documentation page for the API:

You can click on any of the endpoints, further explore it and learn about them. Probably the biggest benefit of this documentation is that you can perform, live in-browser tests by clicking on Try it out button.

I know, right?!🤯

Ok, back to our application and let’s see how we can utilize FastAPI for Machine Learning Deployment.

3. Client-side & User Interface

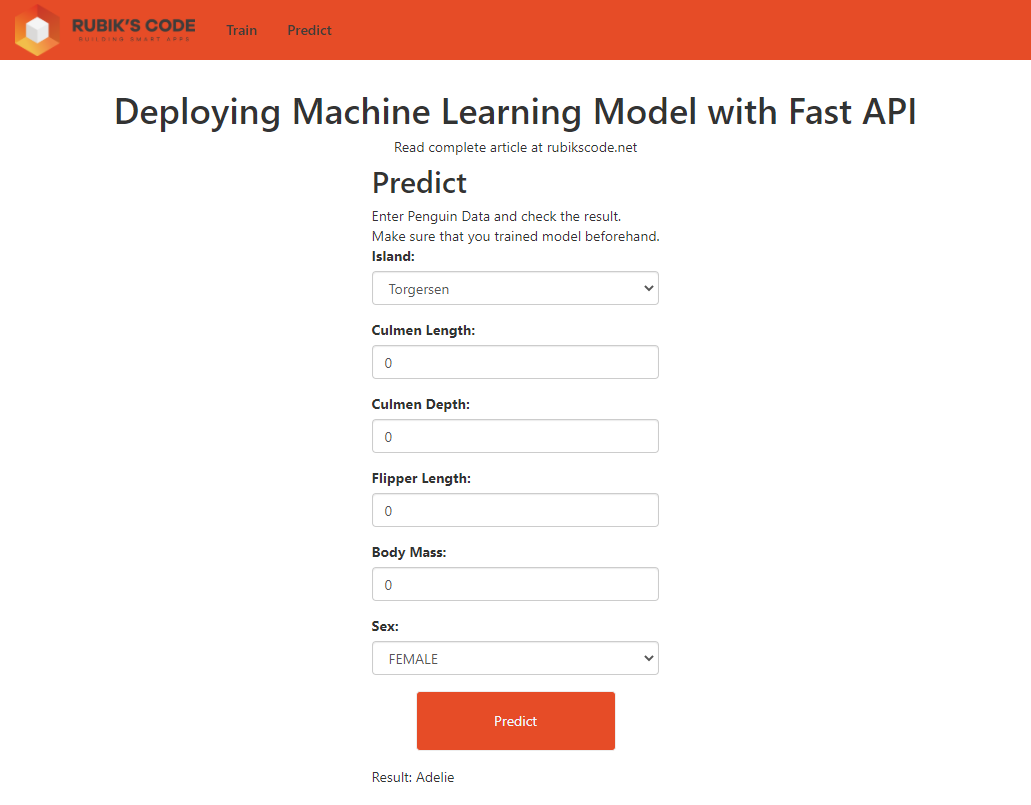

Ok, in order for the user to communicate with our model we need some sort of user interface. There are many frameworks and technologies available out there that you can do that with. Here you can see how we have done a similar thing with Flask and Python. In this tutorial, we use Angular to build a web application that users can interact with. Here is what it looks like:

We will not go into details of the implementation too much here, since the focus is on the FastAPI and Machine Learning model. In essence, there are two items in the toolbar of the application Train and Predict which leads us to two web pages with the same names. The complete thing is implemented within two components: train component and predict component. There is one service too – fastapi.service. This service contains the calls to the REST API that our server-side implements.

In the train tab, you are able to pick the model that you want to train, pick the data that you want to experiment with (taken it is formatted like PalmerPenguing dataset), and define the size of the test dataset. Once you press the train button, HTTP request with this information is sent to the server-side and we expect it will train the defined machine learning model.

In the predict tab, the user is able to make predictions with the machine learning model that is trained via the previous tab. Here user can enter parameters that describe a penguin, for which the user wants to make a prediction. Once the Predict button is pressed this information is sent to the server which uses a machine learning model to make predictions and sends it back to the user.

To run this application (we assume that you have cloned the GitHub repo), open terminal and position into train_solution\client and run these commands:

npm install

ng serveOnce this is done, this web app will be available at localhost:4200. Ok, let’s see how the server-side implementation looks like.

3. Solution with Model Training

The server solution is composed of several components that can be found in files in the train_solution\server folder. The overall architecture looks something like this:

Let’s explore each component.

3.1 Data Contracts

The train_parameters.py file contains the model that is passed from the train tab of the web application. Essentially, this file contains a data contract that is used when the HTTP request from the clients come to our server.

from pydantic import BaseModel

class TrainParameters(BaseModel):

model: str

path: str

testsize: float

Note that we use Pydantic, data validation and settings management library for type annotations. This library enforces type hints at runtime and provides user-friendly errors when data is invalid. The same library we use in penguin_sample.py, in which we define the data contract that is used when the HTTP request is sent from the predict page of the web application:

from pydantic import BaseModel

class PenguinSample(BaseModel):

island: str

culmenLength: float

culmenDepth: float

flipperLength: float

bodyMass: float

sex: str

species: str

def __getitem__(self, item):

return getattr(self, item)3.2 Data Loader

This class is used to load and prepare the data. Here is what it looks like:

import pandas as pd

import numpy as np

from scipy.stats import norm

from sklearn.preprocessing import StandardScaler

from sklearn.model_selection import train_test_split

from penguin_sample import PenguinSample

class DataLoader():

def __init__(self, test_size = 0.2, scale = True):

self.test_size = test_size

self.scale = scale

self.scaler = StandardScaler()

self._island_map = {}

self._sex_map = {}

def load_preprocess(self, path):

data = pd.read_csv(path)

data = self._feature_engineering_pipeline(data)

X = data.drop(['species', "island", "sex"], axis=1)

if(self.scale):

X = self.scaler.fit_transform(X)

y = data['species']

spicies = {'Adelie': 0, 'Chinstrap': 1, 'Gentoo': 2}

y = [spicies[item] for item in y]

y = np.array(y)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=self.test_size, \

random_state=33)

return X_train, X_test, y_train, y_test

def prepare_sample(self, raw_sample: PenguinSample):

island = self._island_map[raw_sample.island]

sex = self._sex_map[raw_sample.sex]

sample = [raw_sample.culmenLength, raw_sample.culmenDepth, raw_sample.flipperLength, \

raw_sample.bodyMass, island, sex]

sample = np.array([np.asarray(sample)]).reshape(-1, 1)

if(self.scale):

self.scaler.fit_transform(sample)

return sample.reshape(1, -1)

def _feature_engineering_pipeline(self, data):

data['culmen_length_mm'].fillna((data['culmen_length_mm'].mean()), inplace=True)

data['culmen_depth_mm'].fillna((data['culmen_depth_mm'].mean()), inplace=True)

data['flipper_length_mm'].fillna((data['flipper_length_mm'].mean()), inplace=True)

data['body_mass_g'].fillna((data['body_mass_g'].mean()), inplace=True)

data["species"] = data["species"].astype('category')

data["island"] = data["island"].astype('category')

data["sex"] = data["sex"].astype('category')

data["island_cat"] = data["island"].cat.codes

data["sex_cat"] = data["sex"].cat.codes

self._island_map = dict(zip(data['island'], data['island'].cat.codes))

self._sex_map = dict(zip(data['sex'], data['sex'].cat.codes))

return dataAs the parameters in the constructor, it receives test dataset size and the flag that indicates whether the data should be scaled or not. There are two public methods and one private method in this class:

- _feature_engineering_pipeline – This method does small feature engineering on the existing set. Namely, missing data is filled and categorical data is encoded. If you want to learn more about feature engineering check out this blog post.

- load_preprocess – This method does the heavy lifting, it loads the data from the defined path, and splits data into training and test dataset.

- prepare_sample – When the new sample comes into our system, we need to process it in the same way we did the training data. That is why we use the prepare_sample method before we done further predictions.

3.3 Model Trainer

This component is in charge of training the model. Through the constructor, it receives information about the algorithm that the user picked and it handles it from there on. Here is what it looks like:

from data_loader import DataLoader

from sklearn.svm import SVC

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

from penguin_sample import PenguinSample

class ModelTrainer():

def __init__(self, algoritm, test_size=0.2):

if(algoritm == 'svm'):

self.data_loader = DataLoader()

self.model = SVC(kernel="rbf", gamma=0.1, C=500, verbose=True)

if(algoritm == 'logistic regression'):

self.data_loader = DataLoader()

self.model = LogisticRegression(C=1e20, verbose=True)

if(algoritm == 'decision tree'):

self.data_loader = DataLoader(scale = False)

self.model = DecisionTreeClassifier(max_depth=5)

if(algoritm == 'random forest'):

self.data_loader = DataLoader(scale = False)

self.model = RandomForestClassifier(n_estimators=11, max_leaf_nodes=16, n_jobs=-1, \

verbose=True)

def train(self, path):

X_train, X_test, y_train, y_test = self.data_loader.load_preprocess(path)

self.model.fit(X_train, y_train)

predictions = self.model.predict(X_test)

return accuracy_score(predictions, y_test)

def predict(self, data: PenguinSample):

prepared_sample = self.data_loader.prepare_sample(data)

return self.model.predict(prepared_sample)In the constructor, the correct model is instantiated. As you can see the classes from SciKit Learn are used. Apart from that, an instance of DataLoader is created. In the train method, the data is retrieved and the model is trained. Once that is done the accuracy score is calculated and that value is returned to the caller. The predict method on the other hand receives a new sample and adapts it to the model using data loader instance. Then the predict method is called and the result is returned to the caller.

3.4 REST API Module

The most important file is the main.py file. This file puts all other pieces together and builds the REST API with FastAPI. Here is what that looks like:

from fastapi import FastAPI

from fastapi.middleware.cors import CORSMiddleware

from model_trainer import ModelTrainer

from train_parameters import TrainParameters

from penguin_sample import PenguinSample

origins = [

"http://localhost:8000",

"http://localhost:4200"

]

app = FastAPI()

app.add_middleware(

CORSMiddleware,

allow_origins=origins,

allow_credentials=True,

allow_methods=["*"],

allow_headers=["*"],

)

app.model = ModelTrainer('svm')

@app.post("/train")

async def train(params: TrainParameters):

print("Model Training Started")

app.model = ModelTrainer(params.model.lower(), params.testsize)

accuracy = app.model.train(params.path)

return accuracy

@app.post("/predict")

async def predict(data:PenguinSample):

print("Predicting")

spicies_map = {0: 'Adelie', 1: 'Chinstrap', 2: 'Gentoo'}

species = app.model.predict(data)

return spicies_map[species[0]]First, we import all the necessary libraries. Since our server runs in http://localhost:8000 and client at http://localhost:4200, we need to handle CORS policies. That is why we import CORSMiddleware. This class is utilized during app initialization:

app = FastAPI()

app.add_middleware(

CORSMiddleware,

allow_origins=origins,

allow_credentials=True,

allow_methods=["*"],

allow_headers=["*"],

)

app.model = ModelTrainer('svm')

Note that we added an instance of ModelTrainer there. This instance is just a placeholder and it is replaced later on. There are two endpoints “/trian” and “/predict” both accepting POST HTTP requests. With that, we have two functions to handle these requests. The train method creates a new instance of ModelTrainer based on the parameters received from the client. Then it runs the training of the model and returns the accuracy of the model:

@app.post("/train")

async def train(params: TrainParameters):

print("Model Training Started")

app.model = ModelTrainer(params.model.lower(), params.testsize)

accuracy = app.model.train(params.path)

return accuracyThe predict method is receiving data from predict tab of the web application. It calls the predict method from the ModelTrainer and returns the predicted value:

@app.post("/predict")

async def predict(data:PenguinSample):

print("Predicting")

spicies_map = {0: 'Adelie', 1: 'Chinstrap', 2: 'Gentoo'}

species = app.model.predict(data)

return spicies_map[species[0]]3.5 Testing the server-side

In order to run this solution, open a terminal, and go to the train_solution\server folder. Run this command:

uvicorn main:app --reloadINFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [28720]

INFO: Started server process [28722]

INFO: Waiting for application startup.

INFO: Application startup complete.Once the app is running go to localhost:8000/docs in the browser. You should see something like this:

First, let’s test the train endpoint. Expand it and click on Try it out button:

As a request body pass this json object:

{

"model": "svm",

"path": "./data/penguins_size.csv",

"testsize": 0.2

}Then click on Execute button:

Here is what we get as a response:

In a similar way, we can test the predict endpoint. Try it out!

3.6 Running it all together

To run the server side you need to go to the train_solution\server folder and use the command:

uvicorn main:app --reloadINFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [28720]

INFO: Started server process [28722]

INFO: Waiting for application startup.

INFO: Application startup complete.In another terminal you need to go to the train_solution\client folder and run:

npm install

ng serve√ Browser application bundle generation complete.

Initial Chunk Files | Names | Size

vendor.js | vendor | 3.02 MB

polyfills.js | polyfills | 481.27 kB

styles.css, styles.js | styles | 340.83 kB

main.js | main | 93.72 kB

runtime.js | runtime | 6.15 kB

| Initial Total | 3.92 MB

Build at: 2020-11-22T10:33:00.423Z - Hash: bf345d81dfd56983facb - Time: 10867ms

** Angular Live Development Server is listening on localhost:4200, open your browser on http://localhost:4200/ **

√ Compiled successfully.Once you have done so, you can go to localhost:4200 and test the application:

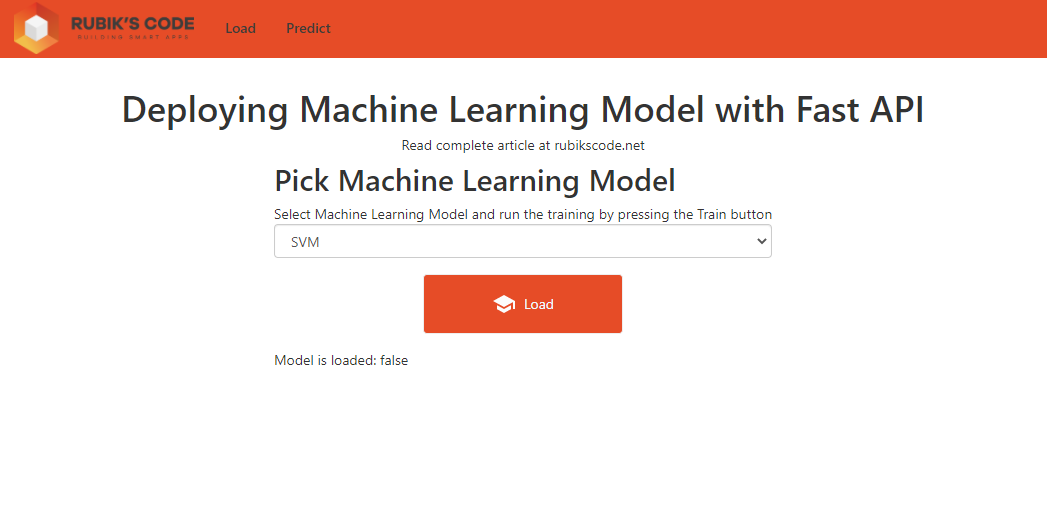

4. Solution with Model Loading

Now, the previous solution is really pretty. I really like that you can pick different models and play around with the parameters. However, even though is neath, it is not a real-world example and it is more of a vanity project. In real-world solutions, one component is in charge of gathering the data, the other is in charge of processing that data, and the third one is in charge of periodically training the models and storing them in some location. These stored models are then utilized by the REST API. Now all of this is too much for this simple tutorial, but still, I had a need to give something that is closer to real-world problems and solutions. So the architecture of the server-side is changed into this:

These changes affect the UI too. The train tab became Load tab and it is a little bit simpler. There is no test size definition and no data path definition. However, the user is still able to pick the model it wants to use. The solution is located in the load_solution/client path and it looks something like this:

The server side is located in the load_solution/server folder and it is a little bit more change. Let’s go through each of the components.

4.1 Train and Save Models

You may have already noticed a new folder ‘models‘ in the solution folder. In this folder you can find following files:

These are already trained models. In fact, there is a script train_models_script.py that you can run if you want to retrain the following models again. It follows utilizes bits and pieces from the previous implementation, with one major difference. Models are not stored in memory but are stored on the hardisk. Here is the script:

from sklearn.ensemble import RandomForestClassifier

from joblib import dump

def load_data(scale = True):

data = pd.read_csv('./data/penguins_size.csv')

data['culmen_length_mm'].fillna((data['culmen_length_mm'].mean()), inplace=True)

data['culmen_depth_mm'].fillna((data['culmen_depth_mm'].mean()), inplace=True)

data['flipper_length_mm'].fillna((data['flipper_length_mm'].mean()), inplace=True)

data['body_mass_g'].fillna((data['body_mass_g'].mean()), inplace=True)

data["species"] = data["species"].astype('category')

data["island"] = data["island"].astype('category')

data["sex"] = data["sex"].astype('category')

data["island_cat"] = data["island"].cat.codes

data["sex_cat"] = data["sex"].cat.codes

X = data.drop(['species', "island", "sex"], axis=1)

if(scale):

scaler = StandardScaler()

X = scaler.fit_transform(X)

y = data['species']

spicies = {'Adelie': 0, 'Chinstrap': 1, 'Gentoo': 2}

y = [spicies[item] for item in y]

y = np.array(y)

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=33)

return X_train, X_test, y_train, y_test

# Train SVM

X_train, X_test, y_train, y_test = load_data(scale=True)

model = SVC(kernel="rbf", gamma=0.1, C=500, verbose=True)

model.fit(X_train, y_train)

dump(model, './models/svm.joblib')

# Train Decision Tree

X_train, X_test, y_train, y_test = load_data(scale=False)

model = DecisionTreeClassifier(max_depth=5)

model.fit(X_train, y_train)

dump(model, './models/decision_tree.joblib')

# Train Random Forest

X_train, X_test, y_train, y_test = load_data(scale=False)

model = RandomForestClassifier(n_estimators=11, max_leaf_nodes=16, n_jobs=-1, verbose=True)

model.fit(X_train, y_train)

dump(model, './models/random_forest.joblib')

# Train Logistic Regression

X_train, X_test, y_train, y_test = load_data(scale=True)

model = LogisticRegression(C=1e20, verbose=True)

model.fit(X_train, y_train)

dump(model, './models/logistic_regression.joblib') The load_data function loads the data and does all necessary preprocessing, just DataLoader did in the previous solution. Then we train models and save them in files using joblib’s dump function. You can run this script like this:

python train_models_script.py4.2 Model Loader

This component looks similar to the ModelTrainer from the previous implementation but it is simpler. Here is what it looks like:

from sklearn.svm import SVC

from sklearn.linear_model import LogisticRegression

from sklearn.tree import DecisionTreeClassifier

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

from penguin_sample import PenguinSample

from sklearn.preprocessing import StandardScaler

from joblib import load

import numpy as np

class ModelLoader():

def __init__(self, algoritm):

self.scaledData = True

self._island_map = {'Torgersen': 2, 'Biscoe': 0, 'Dream': 1}

self._sex_map = {'MALE': 2, 'FEMALE': 1}

if(algoritm == 'svm'):

self.model = load('./models/svm.joblib')

if(algoritm == 'logistic regression'):

self.model = load('./models/decision_tree.joblib')

if(algoritm == 'decision tree'):

self.scaledData = False

self.model = load('./models/random_forest.joblib')

if(algoritm == 'random forest'):

self.scaledData = False

self.model = load('./models/logistic_regression.joblib')

self.scaler = StandardScaler()

def prepare_sample(self, raw_sample: PenguinSample):

island = self._island_map[raw_sample.island]

sex = self._sex_map[raw_sample.sex]

sample = [raw_sample.culmenLength, raw_sample.culmenDepth, raw_sample.flipperLength, \

raw_sample.bodyMass, island, sex]

sample = np.array([np.asarray(sample)]).reshape(-1, 1)

if(self.scaledData):

self.scaler.fit_transform(sample)

return sample.reshape(1, -1)

def predict(self, data: PenguinSample):

prepared_sample = self.prepare_sample(data)

return self.model.predict(prepared_sample)Again based on the parameters that we receive from the client-side, we load the correct model using joblib’s load function. The two methods are there to perform predictions. The prepare_sample prepares a new sample for model processing, while the predict method runs the sample through the model and retrieves predictions.

4.3 REST API Module

This module is almost the same as in previous implementaion:

from fastapi import FastAPI

from fastapi.middleware.cors import CORSMiddleware

from model_loader import ModelLoader

from train_parameters import TrainParameters

from penguin_sample import PenguinSample

origins = [

"http://localhost:8000",

"http://127.0.0.1:8000/predict",

"http://127.0.0.1:8000/load",

"http://localhost:4200"

]

app = FastAPI()

app.add_middleware(

CORSMiddleware,

allow_origins=origins,

allow_credentials=True,

allow_methods=["*"],

allow_headers=["*"],

)

app.model = ModelLoader('svm')

@app.post("/train")

async def train(params: TrainParameters):

print("Model Loading Started")

app.model = ModelLoader(params.model.lower())

return True

@app.post("/predict")

async def predict(data:PenguinSample):

print("Predicting")

spicies_map = {0: 'Adelie', 1: 'Chinstrap', 2: 'Gentoo'}

species = app.model.predict(data)

return spicies_map[species[0]]The major difference is that ModelLoader class is utilized and no training of the model is done.

To run the server side you need to go to the train_solution\server folder and use the command:

4.4 Running it all together

To run the server side you need to go to the load_solution\server folder and use the command:

uvicorn main:app --reloadINFO: Uvicorn running on http://127.0.0.1:8000 (Press CTRL+C to quit)

INFO: Started reloader process [28720]

INFO: Started server process [28722]

INFO: Waiting for application startup.

INFO: Application startup complete.In another terminal you need to go to the load_solution\client folder and run:

npm install

ng serve√ Browser application bundle generation complete.

Initial Chunk Files | Names | Size

vendor.js | vendor | 3.02 MB

polyfills.js | polyfills | 481.27 kB

styles.css, styles.js | styles | 340.83 kB

main.js | main | 93.72 kB

runtime.js | runtime | 6.15 kB

| Initial Total | 3.92 MB

Build at: 2020-11-22T10:33:00.423Z - Hash: bf345d81dfd56983facb - Time: 10867ms

** Angular Live Development Server is listening on localhost:4200, open your browser on http://localhost:4200/ **

√ Compiled successfully.Once you have done so, you can go to localhost:4200 and test the application:

Conclusion

In this article, we were able to see how we can deploy machine learning algorithms with FastAPI and some JavaScript framework (in this particular case Angular). We saw what is REST API and how to build it with FastAPI. Finally, we utilized this API with the help of the user interface and completed the whole solution. If you want to take this one step further you might want to put this into Docker instances and use Kubernetes.

Thank you for reading!

Nikola M. Zivkovic

CAIO at Rubik's Code

Nikola M. Zivkovic a CAIO at Rubik’s Code and the author of book “Deep Learning for Programmers“. He is loves knowledge sharing, and he is experienced speaker. You can find him speaking at meetups, conferences and as a guest lecturer at the University of Novi Sad.

Rubik’s Code is a boutique data science and software service company with more than 10 years of experience in Machine Learning, Artificial Intelligence & Software development. Check out the services we provide.

Trackbacks/Pingbacks