Memory capacity growth: a major contributor to the success of computers

The growth in memory capacity is the unsung hero of the computer revolution. Intel’s multi-decade annual billion dollar marketing spend has ensured that cpu clock frequency dominates our attention (a lot of people don’t know that memory is available at different frequencies, and this can have a larger impact on performance that cpu frequency).

In many ways memory capacity is more important than clock frequency: a program won’t run unless enough memory is available but people can wait for a slow cpu.

The growth in memory capacity of customer computers changed the structure of the software business.

When memory capacity was limited by a 16-bit address space (i.e., 64k), commercially saleable applications could be created by one or two very capable developers working flat out for a year. There was no point hiring a large team, because the resulting application would be too large to run on a typical customer computer. Very large applications were written, but these were bespoke systems consisting of many small programs that ran one after the other.

Once the memory capacity of a typical customer computer started to regularly increase it became practical, and eventually necessary, to create and sell applications offering ever more functionality. A successful application written by one developer became rarer and rarer.

Microsoft Windows is the poster child application that grew in complexity as computer memory capacity grew. Microsoft’s MS-DOS had lots of potential competitors because it was small (it was created in an era when 64k was a lot of memory). In the 1990s the increasing memory capacity enabled Microsoft to create a moat around their products, by offering an increasingly wide variety of functionality that required a large team of developers to build and then support.

GCC’s rise to dominance was possible for the same reason as Microsoft Windows. In the late 1980s gcc was just another one-man compiler project, others could not make significant contributions because the resulting compiler would not run on a typical developer computer. Once memory capacity took off, it was possible for gcc to grow from the contributions of many, something that other one-man compilers could not do (without hiring lots of developers).

How fast did the memory capacity of computers owned by potential customers grow?

One source of information is the adverts in Byte (the magazine), lots of pdfs are available, and perhaps one day a student with some time will extract the information.

Wikipedia has plenty of articles detailing cpu performance, e.g., Macintosh models by cpu type (a comparison of Macintosh models does include memory capacity). The impact of Intel’s marketing dollars on the perception of computer systems is a PhD thesis waiting to be written.

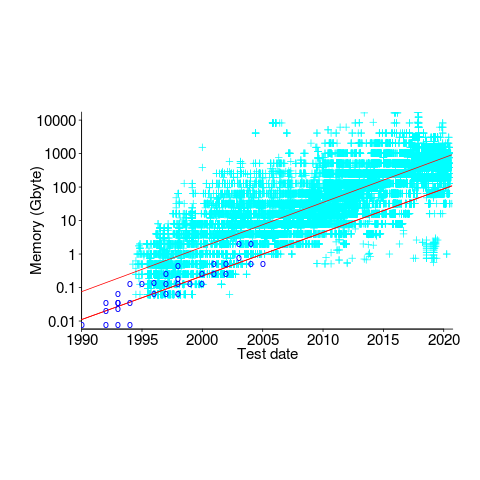

The SPEC benchmarks have been around since 1988, recording system memory capacity since 1994, and SPEC make their detailed data public 🙂 Hardware vendors are more likely to submit SPEC results for their high-end systems, than their run-of-the-mill systems. However, if we are looking at rate of growth, rather than absolute memory capacity, the results may be representative of typical customer systems.

The plot below shows memory capacity against date of reported benchmarking (which I assume is close to the date a system first became available). The lines are fitted using quantile regression, with 95% of systems being above the lower line (i.e., these systems all have more memory than those below this line), and 50% are above the upper line (code+data):

The fitted models show the memory capacity doubling every 845 or 825 days. The blue circles are memory that comes installed with various Macintosh systems, at time of launch (memory doubling time is 730 days).

How did applications’ minimum required memory grow over time? I have a patchy data for a smattering of products, extracted from Wikipedia. Some vendors probably required customers to have a fairly beefy machine, while others went for a wider customer base. Data on the memory requirements of the various versions of products launched in the 1990s is very hard to find. Pointers very welcome.

Isn’t this just Moore’s Law? (I always thought Moore’s Law was boring since CPU speeds did the thing I described below and thus was thus largely irrelevant to the amount of computation available to we unwashed masses running on our own desktop.)

People always complain about code bloat and creeping feature-itis, but other than Photoshop, most programs on PCs always ran reasonably comfortably in the memory available at the time. SSDs have made life less painful, of course, but Photoshop is still seriously gross. Even Windows NT was completely fine from the day it was released. (It was the only “real” OS in the PC/Mac world until Apple got off their duffs and ripped off a Unix from somewhere ten years later.)

Having programmed (on and off) since 1970, my view of CPU speed is that it was roughly stable from 1970 (e.g. PDP-6 (about .3 MIPS)) to 1990 (various 32-bit single chip devices with no or minimal on-chip cache* (about 1.0 MIPS)), CPU speed went completely insane from 1990 to 2005, and then hasn’t changed much since**. Which is a pretty wild roller-coaster ride.

*: The 486 had on-chip cache and was announced before 1990, but wasn’t available generally until well after 1990.

**: Herb Sutter’s 2005 paper “There’s no More Free Lunch” was flipping brilliant, and is looking more and more so every year. Unfortunately.

@David J. Littleboy

Moore’s law related to Intel processors, and over most of the period plotted Intel was ahead everybody else, i.e., improvement in memory device fabrication lagged.

Product developers did target particular computer configurations so things would run at about the same speed.

My point is that faster processors quickly become uninteresting to most customers, unless there is more memory within which software with more functionality can run.

Memory capacity is a killer. People can wait for a program to finish (it will be a lot faster than doing things manually). If things won’t fit in memory the program does not run.

Until single user machine came along, computers had to be shared. The more powerful the computer, the more users sharing it. As you say, performance appeared to stay the same.

Yes, Intel and the motherboard vendors got their acts synchronised in the 1990s, and visually stunning performance improvements just kept coming.

Well, my argument on processor performance is that Moore’s Law covers a period over which processor performance did very different things: 1.0 to 3.0 MHz from 1970 to 1990 was a major snore, 3.0 Mhz to 3.0 GHz from 1990 to 2005 was great fun. 3.0 Ghz to 4.0 GHz 2005 to 2020 is another major snore. It’s been FIFTEEN years since the first quad-core i7.

Moore’s Law was _1965_ (revised down to doubling every two years in 1975), and has pretty much held. But it’s effect on raw MIPS was pretty small. Especially in the latter half of the time period in your graph. Really: ’75-’90 maybe a factor of two, ’90-’05 THREE orders of magnitude, 2005 to 2020 maybe a factor of two.

Arguing is, of course, fun. But your graph doesn’t show the discontinuity in processor performance at 2005, and rather shows memory capacity following Moore’s Law quite nicely.

(Truth in advertising: I haven’t followed memory as closely as processors. I remember buying a 4MB memory card for my first full-frame DSLR that cost US$500. More recently, I noticed that SSD prices had stopped falling.)

But “If things won’t fit in memory the program does not run.” Well, we’ve always tried to do more than is reasonable, and people have always worked with data sets larger than main memory (I worked at a database company for a year. (in BCLP)).

“Until single user machine came along, computers had to be shared. The more powerful the computer, the more users sharing it. As you say, performance appeared to stay the same.”

Well, no. I hit college fall of 1972, and soon got a programming job with a group using a KA-10 that was soon upgraded to a KL-10 (’74 or so). At least in the Comp. Sci. and AI world, nothing faster than a KL-10 appeared until the 486*. The Vaxen were cheaper than KL-10s, but not as fast (and Comp. Sci. departments put more users on them; being a Comp. Sci. grad student 1982-1984 wasn’t fun. Really. It wasn’t. I.Was.There.). The Computer Science world didn’t see increasing performance _per processor_ until the 486. Really we didn’t.

*: OK, maybe the Sun and other workstations in the late 80s. But they weren’t any faster than a KL-10. Supercomputers, of course, were getting faster during this whole period, but no one (in Comp Sci/AI) gets to play with them. (How do you tell a mini-computer from a maxi-computer: walk up to the front panel and turn it off. If they arrest you, it wasn’t a mini-computer.)

@David J. Littleboy

Sun’s SPARC was clocking at 30Mhz in 1990, as was Intel’s 486.

Fabrication technology for memory has lagged that for processors, so the ‘kink’ in performance will arrive later.

If you want cpu performance data check out Evidence-based software engineering (pointers to interesting data welcome).

The first major computer I used was a KL10. DEC had lots of great software and it’s difficult to separate out the performance of the software from the performance of the hardware. The software from other vendors never seemed to be as good, although the hardware may was faster.

@Derek Jones

Thanks for the correction, I was quite careless there.

My argument depends on talking about MIPS, not cycles. In 1989/1990 it took several cycles to execute one instruction, and the top end chips were (as you correctly point out) running 16 to 50 MHz producing 4 to 18 MIPS (e.g. 68040), as opposed to the 2 or so MIPS of a 1974 KL10. Fifteen years saw a factor of 20 or so. (And the KL10 was a single-user computer if you were willing to stay up late.)

https://en.wikipedia.org/wiki/Instructions_per_second

Fast forward to 2006, and you get more than 10 instructions per clock cycle: 49,161 MIPS at 2.66 GHz. OK, that’s 17, not 15 years, but it’s a full three orders of magnitude. (The Ryzen number at the end of that page looks flaky. But with the fastest i9 processors, we’re seeing some improvement lately.)

(But, grumble. If you were actually using a computer in, say, March 1989, it wasn’t 30 Mhz, if you were lucky, it was a 16MHz 386.)

Whatever. On memory, DRAM is now under $5.00 a gigabyte, and has been under $10.00 for almost a decade, so it’s not getting much cheaper. Is $5.00 a gigabyte expensive? DRAM was above $10,000.00 until 1996 or so, so that’s like 3 orders of magnitude in 15 years, lagging (but matching) processor performance improvement by 6 or 7 years and then stuck for the last almost 10.

My intuition is that 64 GB is a lot of memory, and that’s under US$500. Maybe my intuition here is as off as my numeric ranting is careless.

(When I started college, a megabyte of memory was a large refrigerator sized cabinet that cost a million dollars and was called a “moby”.)

I took a break from writing this for dinner and had a thought that might be useful for your argument on memory versus processors: Look at the _number of transistors you get per dollar_ in memory vs. in processors over time. That _might_ allow you to quantify the claim that memory fabrication technology lags processor fab. tech.

I’ve downloaded your book (thank you!), but probably won’t have anything useful to say. My Comp. Sci. background is ancient history and was all R&D sorts of things, so I don’t have any experience with large projects…

@David J. Littleboy

You’re right that MIPS is the important number. Technically many instructions could be retired per clock cycle, at least on some processors, but it all depended on having work for all the functional units to do at the same time and no jump instructions flushing the (often really long) pipeline.

The cost of memory has an impact how often people upgrade. They will buy that first computer because it saves them so much money. Memory capacity makes the initial sale, memory cost drives the replacement sales.

Re: the book. I think you will have lots of useful stuff to say. Software engineering data is not easy to find, and I have plenty of data from the 60s, 70, and 80s.