Data preparation may be one of the most difficult steps in any machine learning project.

The reason is that each dataset is different and highly specific to the project. Nevertheless, there are enough commonalities across predictive modeling projects that we can define a loose sequence of steps and subtasks that you are likely to perform.

This process provides a context in which we can consider the data preparation required for the project, informed both by the definition of the project performed before data preparation and the evaluation of machine learning algorithms performed after.

In this tutorial, you will discover how to consider data preparation as a step in a broader predictive modeling machine learning project.

After completing this tutorial, you will know:

- Each predictive modeling project with machine learning is different, but there are common steps performed on each project.

- Data preparation involves best exposing the unknown underlying structure of the problem to learning algorithms.

- The steps before and after data preparation in a project can inform what data preparation methods to apply, or at least explore.

Kick-start your project with my new book Data Preparation for Machine Learning, including step-by-step tutorials and the Python source code files for all examples.

Let’s get started.

What Is Data Preparation in a Machine Learning Project

Photo by dashll, some rights reserved.

Tutorial Overview

This tutorial is divided into three parts; they are:

- Applied Machine Learning Process

- What Is Data Preparation

- How to Choose Data Preparation Techniques

Applied Machine Learning Process

Each machine learning project is different because the specific data at the core of the project is different.

You may be the first person (ever!) to work on the specific predictive modeling problem. That does not mean that others have not worked on similar prediction tasks or perhaps even the same high-level task, but you are the first to use the specific data that you have collected (unless you are using a standard dataset for practice).

… the right features can only be defined in the context of both the model and the data; since data and models are so diverse, it’s difficult to generalize the practice of feature engineering across projects.

— Page vii, Feature Engineering for Machine Learning, 2018.

This makes each machine learning project unique. No one can tell you what the best results are or might be, or what algorithms to use to achieve them. You must establish a baseline in performance as a point of reference to compare all of your models and you must discover what algorithm works best for your specific dataset.

You are not alone, and the vast literature on applied machine learning that has come before can inform you as to techniques to use to robustly evaluate your model and algorithms to evaluate.

Even though your project is unique, the steps on the path to a good or even the best result are generally the same from project to project. This is sometimes referred to as the “applied machine learning process“, “data science process“, or the older name “knowledge discovery in databases” (KDD).

The process of applied machine learning consists of a sequence of steps. The steps are the same, but the names of the steps and tasks performed may differ from description to description.

Further, the steps are written sequentially, but we will jump back and forth between the steps for any given project.

I like to define the process using the four high-level steps:

- Step 1: Define Problem.

- Step 2: Prepare Data.

- Step 3: Evaluate Models.

- Step 4: Finalize Model.

Let’s take a closer look at each of these steps.

Want to Get Started With Data Preparation?

Take my free 7-day email crash course now (with sample code).

Click to sign-up and also get a free PDF Ebook version of the course.

Step 1: Define Problem

This step is concerned with learning enough about the project to select the framing or framings of the prediction task. For example, is it classification or regression, or some other higher-order problem type?

It involves collecting the data that is believed to be useful in making a prediction and clearly defining the form that the prediction will take. It may also involve talking to project stakeholders and other people with deep expertise in the domain.

This step also involves taking a close look at the data, as well as perhaps exploring the data using summary statistics and data visualization.

Step 2: Prepare Data

This step is concerned with transforming the raw data that was collected into a form that can be used in modeling.

Data pre-processing techniques generally refer to the addition, deletion, or transformation of training set data.

— Page 27, Applied Predictive Modeling, 2013.

We will take a closer look at this step in the next section.

Step 3: Evaluate Models

This step is concerned with evaluating machine learning models on your dataset.

It requires that you design a robust test harness used to evaluate your models so that the results you get can be trusted and used to select among the models that you have evaluated.

This involves tasks such as selecting a performance metric for evaluating the skill of a model, establishing a baseline or floor in performance to which all model evaluations can be compared, and a resampling technique for splitting the data into training and test sets to simulate how the final model will be used.

For quick and dirty estimates of model performance, or for a very large dataset, a single train-test split of the data may be performed. It is more common to use k-fold cross-validation as the data resampling technique, often with repeats of the process to improve the robustness of the result.

This step also involves tasks for getting the most out of well-performing models such as hyperparameter tuning and ensembles of models.

Step 4: Finalize Model

This step is concerned with selecting and using a final model.

Once a suite of models has been evaluated, you must choose a model that represents the “solution” to the project. This is called model selection and may involve further evaluation of candidate models on a hold out validation dataset, or selection via other project-specific criteria such as model complexity.

It may also involve summarizing the performance of the model in a standard way for project stakeholders, which is an important step.

Finally, there will likely be tasks related to the productization of the model, such as integrating it into a software project or production system and designing a monitoring and maintenance schedule for the model.

Now that we are familiar with the process of applied machine learning and where data preparation fits into that process, let’s take a closer look at the types of tasks that may be performed.

What Is Data Preparation

On a predictive modeling project, such as classification or regression, raw data typically cannot be used directly.

This is because of reasons such as:

- Machine learning algorithms require data to be numbers.

- Some machine learning algorithms impose requirements on the data.

- Statistical noise and errors in the data may need to be corrected.

- Complex nonlinear relationships may be teased out of the data.

As such, the raw data must be pre-processed prior to being used to fit and evaluate a machine learning model. This step in a predictive modeling project is referred to as “data preparation“, although it goes by many other names, such as “data wrangling“, “data cleaning“, “data pre-processing” and “feature engineering“. Some of these names may better fit as sub-tasks for the broader data preparation process.

We can define data preparation as the transformation of raw data into a form that is more suitable for modeling.

Data wrangling, which is also commonly referred to as data munging, transformation, manipulation, janitor work, etc., can be a painstakingly laborious process.

— Page v, Data Wrangling with R, 2016.

This is highly specific to your data, to the goals of your project, and to the algorithms that will be used to model your data. We will talk more about these relationships in the next section.

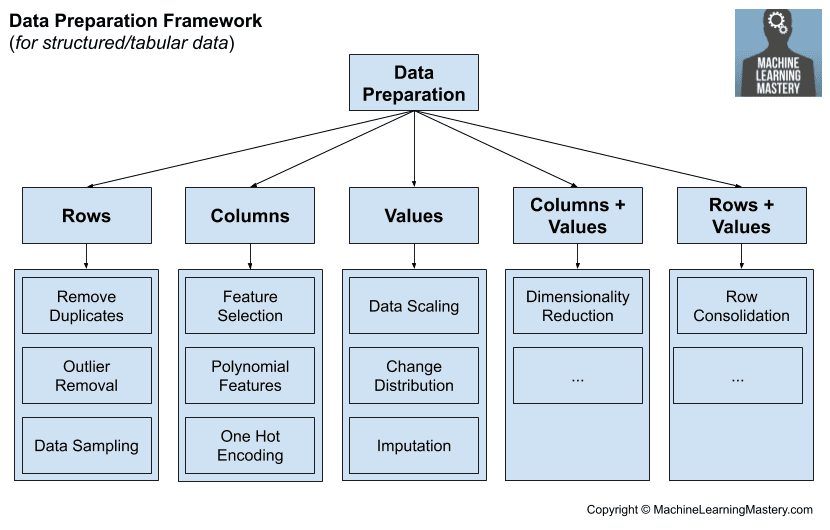

Nevertheless, there are common or standard tasks that you may use or explore during the data preparation step in a machine learning project.

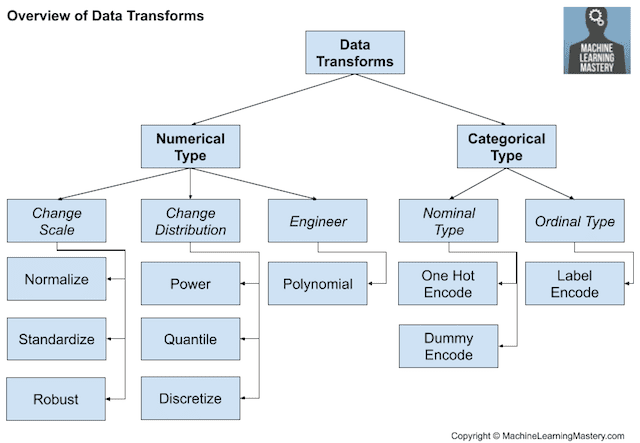

These tasks include:

- Data Cleaning: Identifying and correcting mistakes or errors in the data.

- Feature Selection: Identifying those input variables that are most relevant to the task.

- Data Transforms: Changing the scale or distribution of variables.

- Feature Engineering: Deriving new variables from available data.

- Dimensionality Reduction: Creating compact projections of the data.

Each of these tasks is a whole field of study with specialized algorithms.

Data preparation is not performed blindly.

In some cases, variables must be encoded or transformed before we can apply a machine learning algorithm, such as converting strings to numbers. In other cases, it is less clear, such as scaling a variable may or may not be useful to an algorithm.

The broader philosophy of data preparation is to discover how to best expose the underlying structure of the problem to the learning algorithms. This is the guiding light.

We don’t know the underlying structure of the problem; if we did, we wouldn’t need a learning algorithm to discover it and learn how to make skillful predictions. Therefore, exposing the unknown underlying structure of the problem is a process of discovery, along with discovering the well- or best-performing learning algorithms for the project.

However, we often do not know the best re-representation of the predictors to improve model performance. Instead, the re-working of predictors is more of an art, requiring the right tools and experience to find better predictor representations. Moreover, we may need to search many alternative predictor representations to improve model performance.

— Page xii, Feature Engineering and Selection, 2019.

It can be more complicated than it appears at first glance. For example, different input variables may require different data preparation methods. Further, different variables or subsets of input variables may require different sequences of data preparation methods.

It can feel overwhelming, given the large number of methods, each of which may have their own configuration and requirements. Nevertheless, the machine learning process steps before and after data preparation can help to inform what techniques to consider.

How to Choose Data Preparation Techniques

How do we know what data preparation techniques to use in our data?

As with many questions of statistics, the answer to “which feature engineering methods are the best?” is that it depends. Specifically, it depends on the model being used and the true relationship with the outcome.

— Page 28, Applied Predictive Modeling, 2013.

On the surface, this is a challenging question, but if we look at the data preparation step in the context of the whole project, it becomes more straightforward. The steps in a predictive modeling project before and after the data preparation step inform the data preparation that may be required.

The step before data preparation involves defining the problem.

As part of defining the problem, this may involve many sub-tasks, such as:

- Gather data from the problem domain.

- Discuss the project with subject matter experts.

- Select those variables to be used as inputs and outputs for a predictive model.

- Review the data that has been collected.

- Summarize the collected data using statistical methods.

- Visualize the collected data using plots and charts.

Information known about the data can be used in selecting and configuring data preparation methods.

For example, plots of the data may help identify whether a variable has outlier values. This can help in data cleaning operations. It may also provide insight into the probability distribution that underlies the data. This may help in determining whether data transforms that change a variable’s probability distribution would be appropriate.

Statistical methods, such as descriptive statistics, can be used to determine whether scaling operations might be required. Statistical hypothesis tests can be used to determine whether a variable matches a given probability distribution.

Pairwise plots and statistics can be used to determine whether variables are related, and if so, how much, providing insight into whether one or more variables are redundant or irrelevant to the target variable.

As such, there may be a lot of interplay between the definition of the problem and the preparation of the data.

There may also be interplay between the data preparation step and the evaluation of models.

Model evaluation may involve sub-tasks such as:

- Select a performance metric for evaluating model predictive skill.

- Select a model evaluation procedure.

- Select algorithms to evaluate.

- Tune algorithm hyperparameters.

- Combine predictive models into ensembles.

Information known about the choice of algorithms and the discovery of well-performing algorithms can also inform the selection and configuration of data preparation methods.

For example, the choice of algorithms may impose requirements and expectations on the type and form of input variables in the data. This might require variables to have a specific probability distribution, the removal of correlated input variables, and/or the removal of variables that are not strongly related to the target variable.

The choice of performance metric may also require careful preparation of the target variable in order to meet the expectations, such as scoring regression models based on prediction error using a specific unit of measure, requiring the inversion of any scaling transforms applied to that variable for modeling.

These examples, and more, highlight that although data preparation is an important step in a predictive modeling project, it does not stand alone. Instead, it is strongly influenced by the tasks performed both before and after data preparation. This highlights the highly iterative nature of any predictive modeling project.

Further Reading

This section provides more resources on the topic if you are looking to go deeper.

Tutorials

Books

- Feature Engineering and Selection: A Practical Approach for Predictive Models, 2019.

- Feature Engineering for Machine Learning, 2018.

- Applied Predictive Modeling, 2013.

- Data Mining: Practical Machine Learning Tools and Techniques, 4th edition, 2016.

Articles

Summary

In this tutorial, you discovered how to consider data preparation as a step in a broader predictive modeling machine learning project.

Specifically, you learned:

- Each predictive modeling project with machine learning is different, but there are common steps performed on each project.

- Data preparation involves best exposing the unknown underlying structure of the problem to learning algorithms.

- The steps before and after data preparation in a project can inform what data preparation methods to apply, or at least explore.

Do you have any questions?

Ask your questions in the comments below and I will do my best to answer.

Excellent article and very informative for any ML practitioner. Thanks for the article .

But , the second bullet point in the summary , which also appears in the main article also , I read it and understands in a different way , is it my understanding different from what you saying? bit confused!

what you said :

“Data preparation involves best exposing the unknown underlying structure of the problem to learning algorithms.”

How I understand:

“Data preparation involves best exposing the unknown underlying structure of the data to learning algorithms.”

Thanks.

Agreed, but what’s difference as you see it? e.g. the data embodies the problem. Problem subsumes the data, could be domain knowledge there to I guess.

Sir ,, i bought your all the books except weka , and read each book atleast 10 times ,,

And i am eagerly waiting for your next book ,, plz tell what is topic and when it will be available ,,

You can verify my email …

Your books are my life

Thanks!

Next book is written on the topic of data prep and will come out soon (I hope).

thanks a lot. very much appreciate it

You’re welcome.

Hello Jason,

It is a pleasure to read your blogs on each topic of data science and machine learning in such a detailed and clear way, Thanks and keep the good work.

But here is small confusion for me, please help

You said the main steps in a predictive modelling project as :

Step 1: Define Problem.

Step 2: Prepare Data.

Step 3: Evaluate Models.

Step 4: Finalize Model.

But any modelling process involves an important step “learning (training) ” step ,also called fit method, where model learns parameters of the model from the prepared data.

Where does that step fall in the above process steps ? I feel there must be a step between 2 and 3 , called Train/learn/fit step. How can we evaluate a model before training/learning/fitting, as your steps jumps to “evaluation step” right after “data preparation” step.

I have not noticed mentioning this training step as a sub process neither in step 2 nor 3.

please let me hear some comments on this

Thanks and regards

Thanks!

Yes, step 3 when we evaluate models. We fit models on train data (learn) and make predictions on test data, then calculate a score to see how well it learned.

How to buy books through net banking .. ?

You can purchase my ebooks using a PayPal account or using your Credit Card:

https://machinelearningmastery.com/faq/single-faq/why-doesnt-my-payment-work

Hello Jason,

Thank you for this amazing blog. Could you please provide the citation details which i can for my thesis report.

Thank you in advance.

You’re welcome.

This will help you cite:

https://machinelearningmastery.com/faq/single-faq/how-do-i-reference-or-cite-a-book-or-blog-post