In my free How to Think Like the Engine class, I explain that SQL Server builds execution plans based on statistics. The contents of your tables inform the decisions it makes about which indexes to use, whether to do seeks or scans, how many CPU cores to allocate, how much memory to grant, and much more.

When the statistics on an object change, SQL Server says to itself, “Hey, the next time a query comes in that references this object, I’d better build a new execution plan because my old one might not be a good fit for the new data distribution.”

That’s usually a good thing: you want accurate plans.

However, you’re also putting yourself at risk.

Whenever you update statistics on a table or index, you’re also telling SQL Server that all execution plans that touch that table should get a brand new plan based on whatever parameters happen to come in next. As I talk about in my Fundamentals of Parameter Sniffing class, that means the more you update statistics, the more chances you’re taking: you’re purposely freeing parts of the plan cache, often large parts, and taking a gamble on the very next parameters that come in.

Updated statistics can build better query plans.

In a perfect world, you would only update statistics in cases where query plans would benefit from fresh statistics.

In a perfect world, you would only update statistics in cases where query plans would benefit from fresh statistics.

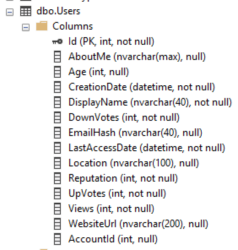

To understand what I mean by that, let’s look at the Users table in the Stack Overflow database, and think about how often the contents of each column would change in a way that would affect our query plans.

The classic scenario where frequent stats updates are vital is a date column that focuses on current activity. In a data warehouse, that means loading new sales for yesterday. In the Stack Overflow column, the equivalent is the LastAccessDate column: users are logging in all day long.

Say we’ve got an index on LastAccessDate, and a stored procedure that queries people by ranges of that date:

|

1 2 3 4 5 6 7 8 9 10 11 |

CREATE INDEX LastAccessDate ON dbo.Users(LastAccessDate); GO CREATE OR ALTER PROC dbo.usp_SearchUsersByDate @LastAccessDateStart DATETIME, @LastAccessDateEnd DATETIME AS SELECT * FROM dbo.Users WHERE LastAccessDate >= @LastAccessDateStart AND LastAccessDate <= @LastAccessDateEnd ORDER BY DisplayName; GO |

When that query runs, SQL Server has to make a decision about whether to use that index, and how much memory to grant for that sort on DisplayName. If 1% of our users log in today during the day, after we’ve updated statistics overnight, and we try to run that search query:

|

1 2 3 4 |

/* Say 1% of our users logged in today */ UPDATE dbo.Users SET LastAccessDate = GETDATE() WHERE Id % 100 = 1; GO EXEC usp_SearchUsersByDate '2020-05-26', '2020-05-27' |

Then we might not accurately estimate the number of rows that’ll come out, since the data was updated after the stats update. This under-estimation might mean sorts end up spilling to disk, or that we choose an index seek where a table scan would have been more appropriate:

In the above plan, SQL Server inefficiently chose an index seek + key lookup and ended up reading more pages than there are in the table. In this scenario, you want frequent statistics updates – and heck, on a very active web site, you might not even be able to update stats fast enough to get plans that accurately reflect the data that will be in the table over the course of the next few hours. This is why folks end up resorting to query hints, forced plans, and building out fake statistics for future data. (I’ve never done that last one, but I find it mesmerizing.)

Frequent stats updates backfire on other columns, though.

If I write a query that says, “Show me all of the users whose Location = San Diego, CA,” that data distribution just doesn’t change that often. Sure, as we add progressively more and more users to the table, we stand a slight chance that the estimate will be off by a few or a few hundred rows – but it’s not a large distribution change for any one value over the course of several weeks. In a mature database with years of history, we could leave the same statistics in place on Location for months at a time without worrying.

The data distribution inside the table doesn’t change that much that quickly.

But holy cow, the data distribution per query changes constantly.

Say I’ve got an index on Location, and a stored procedure that looks like this:

|

1 2 3 4 5 6 7 8 9 10 |

DropIndexes; GO CREATE INDEX Location ON dbo.Users(Location); GO CREATE OR ALTER PROC dbo.usp_SearchUsers @Location NVARCHAR(100) AS SELECT TOP 1000 * FROM dbo.Users WHERE Location = @Location ORDER BY Reputation DESC; GO |

After a statistics update, the very next Location I call it with will determine everyone’s performance for the day:

- If a large location like India goes in first, everybody gets a table scan that goes parallel and gets a huge memory grant

- If a small location like San Diego goes in first, everybody gets an index seek + key lookup that goes single threaded and gets a tiny memory grant

So the worst case scenario for parameter sniffing emergencies would be to rebuild the statistics on this table every day. Every morning, you’d wake up and stumble into work with a 50% chance of table scans, and a 50% chance of TempDB spills. Every morning would be a different emergency – even though the data distribution isn’t changing at all.

Plan cache geeks can spot it by looking at the plan_generation_num column in sys.dm_exec_query_stats. Each time a plan is recompiled due to things like stats changes, the plan_generation_num will increment. Higher numbers indicate more frequent compilations – but at the same time, plan_generation_num = 1 doesn’t mean you don’t have a parameter sniffing problem, either. (I dig into that in Mastering Parameter Sniffing.)

That’s why I update stats weekly until I have a reason to do it more frequently.

I run into parameter sniffing issues much more often than I run into the ascending-date-range problem. I’d rather default to safe weekly stats updates, which leads to more plan cache stability and easier weekday mornings.

Then, when I find specific stats that are wildly out of date within a day or two, I … no, I still don’t update stats daily. I just try to tweak the indexes and queries first to make them easier for SQL Server to build fast, efficient plans.

Because when you resort to daily stats updates to fix queries like this:

|

1 2 3 4 5 6 7 8 9 |

CREATE OR ALTER PROC dbo.usp_SearchUsersByDate @LastAccessDateStart DATETIME, @LastAccessDateEnd DATETIME AS SELECT * FROM dbo.Users WHERE LastAccessDate >= @LastAccessDateStart AND LastAccessDate <= @LastAccessDateEnd ORDER BY DisplayName; GO |

Then you’ve probably forgotten something important: this query is vulnerable to parameter sniffing too!

Then you’ve probably forgotten something important: this query is vulnerable to parameter sniffing too!

After your daily stats job, if someone happens to call it for a year-long (or one-hour) date range, then you’re right back in hot water with a query plan that won’t work well for everyone.

And that’s why I teach a 3-day long Mastering Parameter Sniffing class. When I started writing it, I wasn’t sure I could fill 3 days, but the more I prepped, and the more I read of our past parameter sniffing posts, the more I realized 3 days might not even be long enough!

18 Comments. Leave new

I’m sure with a where like “WHERE @Location = @Location” parameter sniffing will not be your biggest issue. 😉

But that aside, wouldn’t adding an “optimize for unknown” be better in such a case? It will pretty much prevent the problem of who is first to execute. You will get a more general plan, but that may not be bad at all.

One problem I have noticed with leaving a long time between updating statistics is that at a certain point (depends on the number of changes to the table) SQL Server starts to extrapolate from the current statistics to determine the estimates and that can also give quite funky results.

Hahaha, good catch on the typo. Sure, as long as the data is evenly distributed, optimize for unknown is fine. In real life, that isn’t usually the case for me though – especially when you start dealing with date range searches.

OK, you made your point. I need to attend the Parameter Sniffing Class, but sometimes /always I dream of a less complex day at work. Why can’t I just start the Query Store and pin the exec plan and save my day?

Anders – I know, right? That’s also something we cover inside the class, why pinning plans with Query Store doesn’t work well for most parameter sniffing problems.

Hey Brent,

Erik said in his YouTube-Video “How to use Ola Hallengrens Maintenance scripts” that he would update stats daily and in your YouTube-Video “Watch Brent Write T SQL Part 1: Adding a Stats Check to sp_BlitzFirst” you said you are doing it weekly…I totally get the advantages and disadvantages that come with one or the other. But now I am confused with what I should do and why your opinions differ that much.

My situation is that I am responsible you quite a bunch of DBs where all are developed by other companies…we are using the application softwares that comes with it. Knowing all of these round about 700 DBs and their data structure is quite impossible. Also doing individual testing for if I am making changes to the statistics maintenance…no chance.

So any advice on a in your opinion “well-rounded” statistics maintenance will help me out here 🙂

Thanks in advance. You guys are awesome. Learned so much from you over the years and really appreciate it 🙂

Dennis – sure, for customized personal advice on your own personal situation, you can click Consulting at the top of the screen and schedule an engagement with me. Obviously, developing a custom strategy for someone with 700 DBs is kinda beyond what I can do in a blog post comment. Fair enough?

True true. I will talk to my team leader and see what we can do. I guess Corona will get in our way because of investments at the moment, but we’ll see 🙂

I updated to the newest version of sp_Blitz this week so I am researching this potential problem for the first time. I am seeing a near constant flow of statistic updates on my server which I imagine stems from leaving on Auto-Update Statistics like Erik suggested at https://www.brentozar.com/archive/2016/03/leave-auto-update-statistics/.

To generalize that leaves us with two pieces of advice: “updating statistics more than once a week can cause problems” and “updating statistics is usually painless so let the SQL Server do it whenever it wants”. Aren’t those in conflict? Would you still recommend leaving on Auto-Update for most users? If so, how do you figure out if you aren’t in the “most users” group? Does regularly seeing the Statistics Updated Recently flag in sp_BlitzFirst mean we are in the group that should turn off Auto-Update?

I totally understand if this is too complex of a topic to answer in the comments. However if that is the case, I would selfishly suggest that it might be a good idea for a future blog post.

Hi! Yeah, unfortunately that’s way far beyond what I can address in the comments. It’s exactly the kind of thing I do in my consulting, though. Gimme a holler and we can set something up. Thanks!

That’s fair. I wasn’t expecting anything specific to just my use case, but right now sp_Blitz will warn you if you turn off Auto-Update Statistics and sp_BlitzFirst will warn you if statistics are updated frequently.

The documentation for the Auto-Update Stats Disabled warning (https://www.brentozar.com/blitz/auto-update-stats-disabled/) is strongly in favor of frequent updates to statistics except in “rare edge cases”. Meanwhile this blog post, which serves as the documentation for the Statistics Updated Recently warning, seemingly flips that advice around and says that updating stats more than once a week is the edge case that you will avoid “until I have a reason to do it more frequently.”.

I’ll admit my comment was primarily motivated by the selfish desire to get an answer for myself, but the reason it was asked publicly is because these two pieces of advice appear to conflict and the general community could probably benefit from further discussion on how these two posts relate to each other.

Hi Brent!

Parameter sniffing is not resolved in SQL Server 2022:

CREATE INDEX [IX_Location] ON [dbo].[Users]([Location])

GO

Create Or ALTER PROCEDURE [dbo].[usp_UsersByReputation]

@Reputation int

AS

SELECT TOP 10000 *

FROM dbo.Users

WHERE Reputation=@Reputation

ORDER BY DisplayName

GO

Exec [dbo].[USP_SearchUsersByLocation] N’San Diego, CA’

Exec [dbo].[USP_SearchUsersByLocation] N’Paris, France’

Exec [dbo].[USP_SearchUsersByLocation] N’Bangalore, Karnataka, India’

Exec [dbo].[USP_SearchUsersByLocation] N’India’

Who told you it was?

I say.

No, I mean who told you it was fixed?

Create Or ALTER Procedure [dbo].[USP_SearchUsersByLocation]

(@Location Nvarchar(100))

As

Select * From dbo.Users Where Location = @Location

Order By DisplayName

We have a client that we recently discovered updates statistics daily twice a day. I’ll be curious to see their reasoning behind it when meeting with them again.

Before I start, I’ve learned an insane amount on your site, I’m just giving a point of view.

I think the title of this article is doing your readers a dis-service, I’m sure 100’s of DBA’s are changing the cadence of their maintenance jobs as I type, and I feel like this is half the story on Stats updates.

Accurate stats have a huge impact on reads, writes and cpu. Where the impact is really felt is databases with OLTP workloads. I have run tests where the row read reduction between the default sample size and full scans were staggering. Different scenario to updating them less frequently but an eye opener none the less.

For me I think of it more like updating Statistics doesn’t cause parameter sniffing, having to get a new execution plan and poorly designed DML/DDL does. To potentially sacrifice performance for everyone over poorly written sp’s doesn’t seem like the answer to me, and people really respect your opinion, including me, so I thought I’d write this and see if you replied.

By the way, your SQL ConstantCare looks amazing value for money!

Thanks, glad you like my work!

I think it sounds like you’re saying, “People should fix their code.” I hear ya – I’d sure love that too! But…