The tremendous success, in recent centuries, of science and technology explaining the world around us and improving the human condition helped create the impression that we are on the brink of understanding the Universe. The world is complex, but we seem to have been able to reduce its complexity down to a relatively small number of fundamental laws. These laws are formulated in the language of mathematics, and the idea is that, even if we can’t solve all the equations describing complex systems, at least we can approximate the solutions, usually with the help of computers. These successes led to a feeling bordering on euphoria at the power of our reasoning. Eugene Wigner summed up this feeling in his famous essay, The Unreasonable Effectiveness of Mathematics in the Natural Sciences.

Granted, there are still a few missing pieces, like the unification of gravity with the Standard Model, and the 95% of the mass of the Universe unaccounted for, but we’re working on it… So there’s nothing to worry about, right?

Actually, if you think about it, the idea that the Universe can be reduced to a few basic principles is pretty preposterous. If this turned out to be the case, I would be the first to believe that we live in a simulation. It would mean that this enormous Universe, with all the galaxies, stars, and planets was designed with one purpose in mind: that a bunch of sentient monkeys on the third planet from a godforsaken star in a godforsaken galaxy were able to understand it. That they would be able to build, in their puny brains–maybe extended with some silicon chips and fiber optics–a perfect model of it.

How do we understand things? By building models in our (possibly computer-enhanced) minds. Obviously, it only makes sense if the model is smaller than the actual thing; which is only possible if reality is compressible. Now compare the size and the complexity of the Universe with the size and the complexity of our collective brains. Even with lossy compression, the discrepancy is staggering. But, you might say, we don’t need to model the totality of the Universe, just the small part around us. This is where compositionality becomes paramount. We assume that the world can be decomposed, and that the relevant part of it can be modeled, to a good approximation, independent from the rest.

Reductionism, which has been fueling science and technology, was made possible by the decompositionality of the world around us. And by “around us” I mean not only physical vicinity in space and time, but also proximity of scale. Consider that there are 35 orders of magnitude between us and the Planck length (which is where our most precious model of spacetime breaks down). It’s perfectly possible that the “sphere of decompositionality” we live in is but a thin membrane; more of an anomaly than a rule. The question is, why do we live in this sphere? Because that’s where life is! Call it anthropic or biotic principle.

The first rule of life is that there is a distinction between the living thing and the environment. That’s the primal decomposition.

It’s no wonder that one of the first “inventions” of life was the cell membrane. It decomposed space into the inside and the outside. But even more importantly, every living thing contains a model of its environment. Higher animals have brains to reason about the environment (where’s food? where’s predator?). But even a lowly virus encodes, in its DNA or RNA, the tricks it uses to break into a cell. Show me your RNA, and I’ll tell you how you spread. I’d argue that the definition of life is the ability to model the environment. And what makes the modeling possible is that the environment is decomposable and compressible.

We don’t think much of the possibility of life on the surface of a proton, mostly because we think that the proton is too small. But a proton is closer to our scale than it is to the Planck scale. A better argument is that the environment at the proton scale is not easily decomposable. A quarkling would not be able to produce a model of its world that would let it compete with other quarklings and start the evolution. A quarkling wouldn’t even be able to separate itself from its surroundings.

Once you accept the possibility that the Universe might not be decomposable, the next question is, why does it appear to be so overwhelmingly decomposable? Why do we believe so strongly that the models and theories that we construct in our brains reflect reality? In fact, for the longest time people would study the structure of the Universe using pure reason rather than experiment (some still do). Ancient Greek philosophers were masters of such introspection. This makes perfect sense if you consider that our brains reflect millions of years of evolution. Euclid didn’t have to build a Large Hadron Collider to study geometry. It was obvious to him that two parallel lines never intersect (it took us two thousand years to start questioning this assertion–still using pure reason).

You cannot talk about decomposition without mentioning atoms. Ancient Greeks came up with this idea by pure reasoning: if you keep cutting stuff, eventually you’ll get something that cannot be cut any more, the “uncuttable” or, in Greek, ἄτομον [atomon]. Of course, nowadays we not only know how to cut atoms but also protons and neutrons. You might say that we’ve been pretty successful in our decomposition program. But these successes came at the cost of constantly redefining the very concept of decomposition.

Intuitively, we have no problem imagining the Solar System as composed of the Sun and the planets. So when we figured out that atoms were not elementary, our first impulse was to see them as little planetary systems. That didn’t quite work, and we know now that, in order to describe the composition of the atom, we need quantum mechanics. Things are even stranger when decomposing protons into quarks. You can split an atom into free electrons and a nucleus, but you can’t split a proton into individual quarks. Quarks manifest themselves only under confinement, at high energies.

Also, the masses of the three constituent quarks add up only to one percent of the mass of the proton. So where does the rest of the mass come from? From virtual gluons and quark/antiquark pairs. So are those also the constituents of the proton? Well, sort of. This decomposition thing is getting really tricky once you get into quantum field theory.

Human babies don’t need to experiment with falling into a precipice in order to learn to avoid visual cliffs. We are born with some knowledge of spacial geometry, gravity, and (painful) properties of solid objects. We also learn to break things apart very early in life. So decomposition by breaking apart is very intuitive and the idea of a particle–the ultimate result of breaking apart–makes intuitive sense. There is another decomposition strategy: breaking things into waves. Again, it was Ancient Greeks, Pythagoras who studied music by decomposing it into harmonics, and Aristotle who suggested that sound propagates through movement of air. Eventually we uncovered wave phenomena in light, and then the rest of the electromagnetic spectrum. But our intuitions about particles and weaves are very different. In essence, particles are supposed to be localized and waves are distributed. The two decomposition strategies seem to be incompatible.

Enter quantum mechanics, which tells us that every elementary chunk of matter is both a wave and a particle. Even more shockingly, the distinction depends on the observer. When you don’t look at it, the electron behaves like a wave, the moment you glance at it, it becomes a particle. There is something deeply unsatisfying about this description and, if it weren’t for the amazing agreement with experiment, it would be considered absurd.

Let’s summarize what we’ve discussed so far. We assume that there is some reality (otherwise, there’s nothing to talk about), which can be, at least partially, approximated by decomposable models. We don’t want to identify reality with models, and we have no reason to assume that reality itself is decomposable. In our everyday experience, the models we work with fit reality almost perfectly. Outside everyday experience, especially at short distances, high energies, high velocities, and strong gravitational fields, our naive models break down. A physicist’s dream is to create the ultimate model that would explain everything. But any model is, by definition, decomposable. We don’t have a language to talk about non-decomposable things other than describing what they aren’t.

Let’s discuss a phenomenon that is borderline non-decomposable: two entangled particles. We have a quantum model that describes a single particle. A two-particle system should be some kind of composition of two single-particle systems. Things may be complicated when particles are close together, because of possible interaction between them, but if they move in opposite directions for long enough, the interaction should become negligible. This is what happens in classical mechanics, and also with isolated wave packets. When one experimenter measures the state of one of the particles, this should have no impact on the measurement done by another far-away scientist on the second particle. And yet it does! There is a correlation that Einstein called “the spooky action at a distance.” This is not a paradox, and it doesn’t contradict special relativity (you can’t pass information from one experimenter to the other). But if you try to stuff it into either particle or wave model, you can only explain it by assuming some kind of instant exchange of data between the two particles. That makes no sense!

We have an almost perfect model of quantum mechanical systems using wave functions until we introduce the observer. The observer is the Godzilla-like mythical beast that behaves according to classical physics. It performs experiments that result in the collapse of the wave function. The world undergoes an instantaneous transition: wave before, particle after. Of course an instantaneous change violates the principles of special relativity. To restore it, physicists came up with quantum field theory, in which the observers are essentially relegated to infinity (which, for all intents and purposes, starts a few centimeters away from the point of the violent collision in an collider). In any case, quantum theory is incomplete because it requires an external classical observer.

The idea that measurements may interfere with the system being measured makes perfect sense. In the macro world, when we shine the light on something, we don’t expect to disturb it too much; but we understand that the micro world is much more delicate. What’s happening in quantum mechanics is more fundamental, though. The experiment forces us to switch models. We have one perfectly decomposable model in terms of the Schroedinger equation. It lets us understand the propagation of the wave function from one point to another, from one moment to another. We stick to this model as long as possible, but a time comes when it no longer fits reality. We are forced to switch to a different, also decomposable, particle model. Reality doesn’t suddenly collapse. It’s our model that collapses because we insists–we have no choice!–on decomposability. But if nature is not decomposable, one model cannot possibly fit all of it.

What happens when we switch from one model to another? We have to initialize the new model with data extracted from the old model. But these models are incompatible. Something has to give. In quantum mechanics, we lose determinism. The transition doesn’t tell us how exactly to initialize the new model, it only gives us probabilities.

Notice that this approach doesn’t rely on the idea of a classical observer. What’s important is that somebody or something is trying to fit a decomposable model to reality, usually locally, although the case of entangled particles requires the reconciliation of two separate local models.

Model switching and model reconciliation also show up in the interpretation of the twin paradox in special relativity. In this case we have three models: the twin on Earth, the twin on the way to Proxima Centauri, and the twin on the way back. They start by reconciling their models–synchronizing the clocks. When the astronaut twin returns from the trip, they reconcile their models again. The interesting thing happens at Proxima Centauri, where the second twin turns around. We can actually describe the switch between the two models, one for the trip to, and another for the trip back, using more advanced general relativity, which can deal with accelerating frames. General relativity allows us to keep switching between local models, or inertial frames, in a continuous way. One could speculate that similar continuous switching between wave and particle models is what happens in quantum field theory.

In math, the closest match to this kind of model-switching is in the definition of topological manifolds and fiber bundles. A manifold is covered with maps–local models of the manifold in terms of simple n-dimensional spaces. Transitions between maps are well defined, but there is no guarantee that there exists one global map covering the whole manifold. To my knowledge, there is no theory in which such transitions would be probabilistic.

Seen from the distance, physics looks like a very patchy system, full of holes. Traditional wisdom has it that we should be able to eventually fill the holes and connect the patches. This optimism has its roots in the astounding series of successes in the first half of the twentieth century. Unfortunately, since then we have entered a stagnation era, despite record number of people and resources dedicated to basic research. It’s possible that it’s a temporary setback, but there is a definite possibility that we have simply reached the limits of decomposability. There is still a lot to explore within the decomposability sphere, and the amount of complexity that can be built on top of it is boundless. But there may be some areas that will forever be out of bounds to our reason.

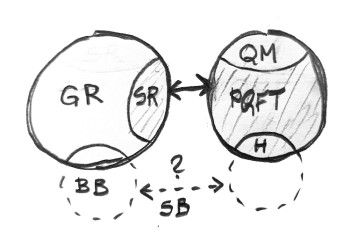

Fig 1. Current decomposability sphere.

- GR: General Relativity (gravity)

- SR: Special Relativity

- PQFT: Perturbative Quantum Field Theory (compatible with SR)

- QM: Quantum Mechanics (non-relativistic)

- BB: Big Bang

- H: Higgs Field

- SB: Symmetry Breaking (inflation)

May 22, 2020 at 4:37 pm

Interesting. I rather like it. I’m generally skeptical of theories that pick on the supposed limitations of the human mind (I suspect our level of sapience is kind of like Turing-power, interchangeable in principle with other forms of sapience), but ultimately the limitation you’re really looking at is limitation of composability, so, that particular skepticism seems not to apply.

Re the longevity of QM, another possibility I’ve considered is that QM limits and shapes what questions we ask, in a way that diverts us away from asking the right questions to falsify QM. This is, of course, not incompatible with what you suggest on limits of composability.

May 22, 2020 at 11:11 pm

I particularly liked “We have an almost perfect model of quantum mechanical systems using wave functions until we introduce the observer.”. Some people, backed by experience, think there is no observer at all as weird as it looks (also my sentence is ill formulated in terms of “some”, “think”). I am always astonished at how much physics meets and agrees with “philosophy” (should have a better term, thinking about Alan Watts) when going deep into theories. In the words of Christopher Knight “”solitude bestows an increase in something valuable … my perception. But … when I applied my increased perception to myself, I lost my identity. There was no audience, no one to perform for … To put it romantically, I was completely free.” Made me think of that.

May 23, 2020 at 10:36 am

For an imho exceptionally clear-thinking account of interpretations of QM, I recommend JS Bell’s essay “Six possible worlds of quantum mechanics”; it’s in his anthology “Speakable and unspeakable in quantum mechanics”, which I find available on the Internet Archive. (Bell doesn’t frame the peculiarity of QM in terms of whether there’s an observer, but in terms of the relationship between continuous and discrete.)

May 28, 2020 at 7:57 pm

The premise that composability is only useful within a bounded locality is definitely true, particularly in the world of physics. (We are not bounded in pure mathematics in the same way.) I personally think that the universe we live in is not completely decomposable simply because it is simultaneously infinitesimally small and infinitely large. Discrete reasoning does not handle infinites well.

May 28, 2020 at 8:21 pm

Infinity is a mathematical concept. You’re trying to apply it to the universe. Do you see the problem?

May 28, 2020 at 8:25 pm

What if the universe really is infinite, though? That would mean that we cannot break it down atomically at some point. For practical purposes, this doesn’t matter too much, but it does affect our conceptual understanding of the universe.

June 13, 2020 at 5:14 pm

Are you supposing that non-decomposable systems transcend mathematical description? If so, why?

My reason for asking is that Philip Goff—a Consciousness researcher—makes a similar argument about sensory qualities. He says that Galileo, in his 1623 treatise The Assayer, cleaved sensory qualities out of scientific discourse by declaring non-mathematical properties off-limits to natural philosophy.

Goff supports his claim—in part—by conflating quantitative language for all of mathematics (he explicitly describes mathematics as “purely quantitative”). Before reading your article, my reaction to Goff was to suppose Goff’s argument fails because mathematics does, in fact, deal with non-numerical structures quite well. For example, Category Theory and modern Algebra are especially focused on the non-numerical.

My view was that mathematics is a very precise language and that sensory qualities are as susceptible to mathematical description as anything. However, your article seems to raise another possibility. Perhaps sensory qualities are non-decomposable and hence not susceptible to mathematical description.

This runs counter to my every instinct. My entire reason for investing myself in Category Theory has been on informed faith that it would help render so-called “qualitative” properties mathematical. Yet I respect you enough as a mathematician to be very unsettled by your argument.

I’d like to hear more about why you think non-decomposability is so anti-mathematical that it should be out of reach for reason. Shouldn’t it always be possible to speak of things mathematically? Even if sometimes we need to resort to probability to capture the inaccessible bits? If not, why not?

June 13, 2020 at 6:12 pm

“Shouldn’t it always be possible to speak of things mathematically?” is like asking “Shouldn’t it always be possible to speak with words?” Of course, that’s what speaking is.

June 13, 2020 at 7:04 pm

That’s what I thought! But then you said, “But there may be some areas that will forever be out of bounds to our reason.” Which sounds a lot like

There are some areas we have no mathematical vocabulary for.' Which is to sayThere are some things we have no words for, so we must pass over them in silence.’I wanted to hear more about what caused you to say such a thing, and why non-decomposability would cause a thing to “forever be out fo bounds to our reason.”

June 14, 2020 at 8:29 am

What I’m saying is that there is absolutely no reason to believe that the universe can be modeled using decomposable schemes of mathematics. It seems that you take it for granted. But try to find arguments to convince me. I don’t think there are any.

June 14, 2020 at 12:50 pm

I’m sorry if there’s been a misunderstanding. I’m not trying to disagree with you. I’m trying to ask a clarifying question. I am asking if you are identifying “decomposable schemes of mathematics” with all of mathematics. If so, why do you think all mathematical schemes are decomposable? If not, why do you think non-decomposable structures are “out of bounds to our reason” (despite being within the bounds of mathematics)?

I’m just trying to clarify how you got from non-decomposable to beyond reason.

For instance, if physics is truly, deeply, non-local then it would be exceedingly hard to gather the data needed to complete any model beyond a certain point, because relevant bits would be far apart and hard to find.

But that wouldn’t be beyond reason. That wouldn’t be beyond mathematics. That would only be beyond current technology. But it certainly wouldn’t be beyond our ability to express mathematically.

Clearly you mean something other than “beyond technology.” I’m just trying to understand what it is. I’m trying to understand if you think there are some things in the universe that are truly beyond expression, or if you think something else—and if so what that might be.

I’m sorry this got drawn out. I’m not trying to heckle you. I’m trying to understand your intent so that I can cite your idea without misrepresenting you.

June 14, 2020 at 2:39 pm

I wasn’t sure which part of my reasoning didn’t convince you. For me it’s obvious that mathematics is the science of decomposition. Just look at any math book or paper. It’s composed of theorems and proofs. A theorem is composed of assumptions and the thesis. The proof goes step by step. A follows from B, B follows from C, C follows from the axioms that we assumed, and so on. Reason and understanding are by definition decompositional. Anything else is faith.

June 14, 2020 at 3:49 pm

I think see now. So you weren’t talking about non-factorizability, non-locality or any other kind of wholistic description of phenomena (this is what thought you meant by “non-decomposable”). Nor were you talking about systems whose complexity exceeds human cognitive bandwidth (such as almost anything we study nowadays). For you, decomposability means step-by-step reasoning (which—from your comment—is synonymous with mathematical description)?

If I understand you correctly, you believe that there are some aspects of the natural world that cannot be described through step-by-step reasoning. Is that correct? They are immune to mathematical description because they are non-decomposable?

Moreover, evidence for these non-decomposable phenomena appears as gaps in our models, such as the gap between particle-like and wave-like descriptions of subatomic physics. You believe that some of these gaps may be irreconcilable? Am I understanding you correctly?

If I’m understanding you correctly, then I have one final question. How can we know which scientific gaps are temporary and which come from non-decomposability? More precisely, conceding that we can’t know for sure, how can we make an educated guess?

PS. Thanks for humoring me. Thanks for your time.

June 14, 2020 at 4:59 pm

Obviously I don’t have a crystal ball, so my guess is as good as yours. I’m only exposing hidden assumptions that a lot of people are making about the relationship between science and nature. Being a physicist I know first-hand how huge the gaps in our knowledge are–something that is not widely advertised. One of the people who are quite vocal about it is Sabine Hossenfelder, whose blog (and a book) you might find interesting http://backreaction.blogspot.com/. It’s possible that nature is fully decomposable, but that would be an amazing discovery that would require further explanation (are we living in a simulation?!).

June 15, 2020 at 6:35 am

Ok, fair enough. You made a blog post and I’m trying to pin down answers like it was a published book!

I agree with you that nature is likely infinitely complex. My personal view is that nature is likely infinitely decomposable—-that no gap is forever irreconcilable, but every new insight reveals new gaps. It seemed like you were suggesting that some gaps are irreconcilable. I still haven’t figured out if that’s what you’re saying, and I see that I won’t get an answer.

Yes I’ve read Hossenfelder’s book. She’s like a twenty-first-century Karl Popper.

Take care

August 15, 2020 at 4:52 am

For anyone would hasn’t seen it, Bartosz has a great talk about the limits of composition https://vimeo.com/242784236

August 16, 2020 at 9:15 am

The video puts a lot of emphasis on how supposedly-limited the human brain is. Modern culture has been deeply infected by a sort of… I really want to call it “computer-worship”; an over-emphasis on what formal systems, technological artifacts, can do, to the exclusion of what human minds are actually good at. Most talk about AIs “understanding” things is based on an incautious supposition that AIs understand anything at all. I suspect AIs, in the current sense, represent perfect absence of understanding, as piling up lots of details isn’t the point of the exercise. Thinking in terms of formal systems is addictive; one gets to disregarding anything inconsistent with that way of thinking, and then, looking at what’s left, concludes that everything is consistent with it. We don’t really have a proper handle on what else to do, having only started seriously exploring formalism around two and a half centuries ago (Hamilton’s discovery of quaternions is a key event in that, but I’ve recently been set on to Gauss as a possible originator of the trend). Two and a half centuries is scarcely a blink of an eye, in terms of progress toward understanding. As best I can tell, we advanced from orality to literacy about two and a half millennia ago; from verbality to orality about fifty thousand years ago; and may have become sapient about three million years ago. It strikes me as getting ahead of ourselves to suppose we’ve yet come far enough to be qualified to assess the limits, or lack of limits, of our ability to understand.

Part of the problem here seems to me to be that scientists often fail to think of their theories as theories, instead treating them as things we “know” (physicists seem especially guilty of this). There’s a worrisome lack of scientific humility in that. The assumption that formalism is all there is to understanding also seems lacking in humility, and the supposition that we’re yet qualified to assess the limits of our understanding may be of the same ilk.

Btw, a couple of stray thoughts. (1) I don’t think I agree that Occam’s Razor is necessarily about how we think. Set aside the difference between “entities should not be multiplied unnecessarily” versus “among competing hypotheses, the one with the fewest assumptions should be selected”. If we have a choice between two theories and one them is simpler, why shouldn’t we use the one that’s simpler; but, set that aside too. Each assumption in a hypothesis is an opportunity to introduce problems into the hypothesis; therefore, the more assumptions a hypothesis has, the more opportunities for problems. Therefore a hypothesis is likely to have fewer problems if it’s got fewer assumptions. That’s not about the people wielding the hypotheses, and it’s actually not about “nature”, the subject of the hypotheses, either; it’s about the hypotheses themselves. (2) Statements like “a monad is a monoid in the category of endofunctors” put me in mind of E.T. Bell’s attribution to Lagrange of the view “that a mathematician has not thoroughly understood his own work till he has made it so clear that he can go out and explain it to the first man he meets on the street.”

I also note, how decomposable something is depends on what structure one applies to it. Different decompositions of the same thing aren’t necessarily arranged in a linear order; indeed, I’ve suggested that a progression of increasingly complicated theories (such as our theories of basic physics) may be a sign that we’ve got a wrong assumption somewhere that is causing the problem. One can even imagine jumping to a meta-level and developing a theory of different ways of decomposing things.

August 21, 2021 at 2:49 pm

“To my knowledge, there is no theory in which such transitions would be probabilistic.”. You might be interested in statistical manifolds where you interact with families of probability distributions within an affine framework.