Today I spoke at at the (Virtual) Cloud Native Rejekts conference on The Need for A Cloud Native Tunnel. In this post I'll introduce the talk, the slides, and give some links for how you can get started with exploring inlets.

In my talk I cover how the network has always been the problem, even as far back as when I was a teenager at school contenting with HTTP proxies, to the present day where medical companies contend to link to radiography machinary in remote hospital sites to obtain patient data.

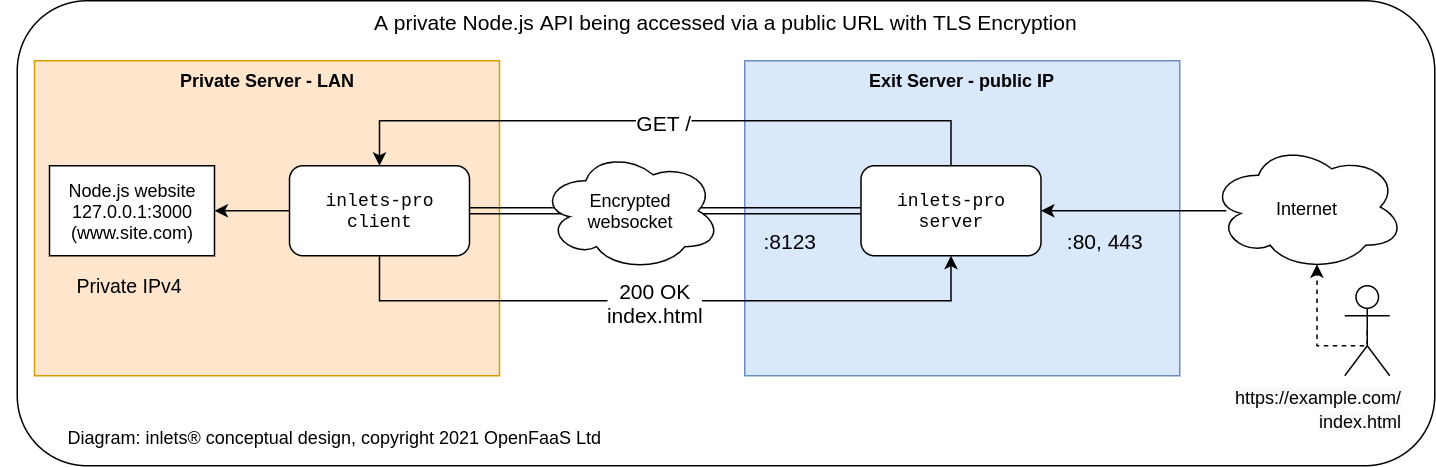

One of the main challenges teams have is connecting a service on a private network to a public one, and other variations of this scenario. The talk covers the options for bridging networks and other tunnel solutions compared to inlets and why customers and the community are now picking Cloud Native.

The slides

You can view the abstract here.

We had some Tweets during the call, but if you follow me on Twitter @alexellisuk you can get the recording as soon as it's ready.

For the source code for the demo with the Pimoroni Unicorn LED board, see: unicorn_server.py

You'll have a chance to trigger this LED panel from @pimoroni live during my talk on @inletsdev at @rejektsio today - see my pinned tweet. pic.twitter.com/Zgo4L7uAuc

— Alex Ellis (@alexellisuk) April 1, 2020

Great session @alexellisuk @rejektsio #VirtualRejekts pic.twitter.com/spzPI2bTMY

— Iván Camargo (@ivanrcamargo) April 1, 2020

The video is now live as part of the live-stream:

View now: Cloud Native Rejekts recording at: 9:32:26

inlets tooling

Let's cover the two versions of inlets which are available, how they work together and what automation exists to make things easier for you.

There are two proxies:

- inlets OSS - HTTP / L7 proxy suitable for webpages, APIs and websockets, you manage your own link encryption

- inlets PRO - TCP / L4 proxy that can proxy any TCP traffic such as SSH, MongoDB, Redis, TLS, IngressControllers for LetsEncrypt and so forth. Inlets PRO also adds automatic link encryption to any traffic.

Each tunnel has two parts:

- The server - known as the exit server or exit node. It faces a public network and exposes two ports - a control port for the client end i.e. 8080 or 8123, and a data port for your customers i.e. 80/443

- The client - which acts like a router or gateway to send incoming requests to some upstream server or to localhost on a given port

You can automate the creation and running of the client and server parts with:

- inletsctl - a simple CLI to provision cloud hosts, a bit like what you'd expect from Terraform, which also starts the server on the new host

- inlets-operator - a Kubernetes Operator with its own

TunnelCRD that observesLoadBalancerservices and creates a VM or remote host and then deploys a client Pod for the uplink.

You can also run the client and server binaries yourself.

An example of what you can do

One of the leading tutorials shows how to get an IP address for your IngressController in Kubernetes with inlets PRO. This means you can then get a free TLS certificate from LetsEncrypt and expose all your APIs via Ingress definitions. It works great with Minikube, k3s, KinD and even a Raspberry Pi, if that's your thing.

When you add that all together, you can do things like Deploy a Docker registry in around 5 minutes with authentication and link-level encryption.

Links and getting started

To download any of the tools we've tallked about here, visit the documentation site at: https://docs.inlets.dev/

Join the community through OpenFaaS Slack and the #inlets channel

Buy your own inlets SWAG and support the project with the OpenFaaS Ltd Store

Feel free to contact me about how we can help your team gain confidence in adopting Kubernetes and migrating to the cloud: alex@openfaas.com