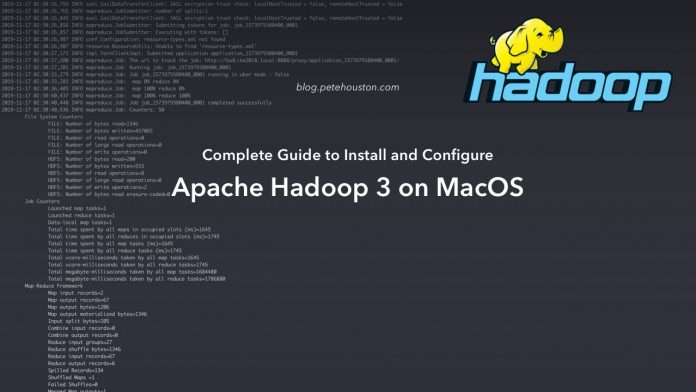

This is the complete guide to install and configure Apache Hadoop 3 on MacOS. I wil show you how to do in the most simple way so you can make it with your Mac.

Article Contents

Why another Hadoop installation tutorial?

I used to work with Hadoop version 2.6 and below using Linux, it is very easy to setup. However, at the moment, I need to setup on my Mac to develop some work and require to work with latest Hadoop version, it is Apache Hadoop 3.2.1; so I did try to install as usual like a Linux. BUT it didn’t work!

Google for the help? The thing is, at the time of this writing, I can’t find any single article about setting up Hadoop version 3+ on a Mac. All I can find are articles for Hadoop previous versions. Steps are supposed to be the same, you might ask? Yes, indeed, but there are always some missing points on version update.

Anyway, if you have any problem with setting up Apache Hadoop version 3+ on you Mac, this article might help get over with it.

Pre-requisites

This guide will use the latest Hadoop version, which is Hadoop 3.2.1, which is fetched by default using Homebrew.

So here are some stuffs you need to have:

- Java 8

- Homebrew (the deps magician of the Mac)

Install and Configure Apache Hadoop 3.2

Step 1: Update brew and install latest Hadoop

$ brew install hadoopThe command will fetch the latest Hadoop version and install into your Mac.

By default, Hadoop installation directory will be located in /usr/local/Cellar/hadoop/3.2.1.

Later, when you read this article, the version will be updated and it will be different, the location will be the version directory, /usr/local/Cellar/hadoop/X.Y.Z.

Step 2: Update the HADOOP_OPTS environment variable

Open this file, /usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/hadoop-env.sh and add this line at the bottom:

export HADOOP_OPTS="-Djava.net.preferIPv4Stack=true -Djava.security.krb5.realm= -Djava.security.krb5.kdc="Or, if you find the line with export HADOOP_OPTS, you can edit it.

Step 3: Update core-site.xml

Open this file, /usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/core-site.xml and update as below.

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/Cellar/hadoop/hdfs/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>Some notes to know:

hadoop.tmp.dir: this property is to configure your local Hadoop temporary data like storage for datanode, namenode, hdfs… You can put it into any directory you want, but make sure to give appropriate permission.fs.defaultFS: is the new name for the previously-deprecated keyfs.default.name.

Step 4: Update hdfs-site.xml

Open this file, /usr/local/Cellar/hadoop/3.2.1/libexec/etc/hadoop/hdfs-site.xml and add below configuration.

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>Why the value is 1? Well, for development purpose, pseudo-distributed or single cluster mode is enough and it must have at least 1 datanode, so number of replication is 1.

Step 5: Format and test filesystem

We need to initialize the distributed filesystem before use, so we format the first.

$ hdfs namenode -formatLet’s try some commands to test. But before that, we should start some DFS servers that includes DataNode, NameNode, SecondaryNameNode by issuing following command:

$ cd /usr/local/Cellar/hadoop/3.2.1/sbin

$ ./start-dfs.shIf there is no error message output, then we can start with below commands.

$ hdfs dfs -ls /

$ hdfs dfs -mkdir /input

$ touch data.txt

$ echo "Hello Hadoop" >> data.txt

$ hdfs dfs -put data.txt /input

$ hdfs dfs -cat /input/data.txtIf the last command shows the above content, which is Hello Hadoop , then it works successfully.

Step 6: Executing sample MapReduce job in a JAR package

Now, we need to verify if map-reduce job can work. This can be done by following instructions that I put on my hadoop-wordcount Github repository.

Step 7: Configure commands for shell

Instead of cd directory into /usr/local/Cellar/hadoop/3.2.1/sbin to execute commands, we can just configure into global PATH, so they can be called anywhere in terminal or shell.

Append following line into your shell configuration file, like /etc/profile, ~/.bashrc, ~/.bash_profile, ~/.profile, ~/.zshrc… depending on your environment.

I prefer to use zsh, so I put it into ~/.zshrc on my Mac.

export PATH=$PATH:/usr/local/Cellar/hadoop/3.2.1/sbinStep 7: Configure complete Hadoop start command

Normally, the default configuration of Hadoop will use local framework for debug/development purpose. If you want to use yarn framework on local machine, you might want to start YARN as well, which is done by:

$ /usr/local/Cellar/hadoop/3.2.1/sbin/start-yarn.shIf you’ve done step 6, then just type start-yarn.sh.

So, your Hadoop environment might require two commands to execute, and you can combine them into one command by using alias.

alias hadoop-start="/usr/local/Cellar/hadoop/3.2.1/sbin/start-dfs.sh;/usr/local/Cellar/hadoop/3.2.1/sbin/start-yarn.sh"

alias hadoop-stop="/usr/local/Cellar/hadoop/3.2.1/sbin/stop-dfs.sh;/usr/local/Cellar/hadoop/3.2.1/sbin/stop-yarn.sh"There you go, Hadoop is ready to work on your Mac.

In conclusion

Setting up Apache Hadoop 3+ doesn’t look so much complicated, make sure to follow my steps above then you will have it working in no time.

However, if you face any problem, try to see this Apache Hadoop troubleshooting. It might help you fix your problems.