Originally published on Medium.

Last year, I taught a Data Analytics bootcamp at UC Berkeley Extension. In 6 months, students who'd never programmed before learned Excel, Python, pandas, JavaScript, D3, basic machine learning, and more.

What would you guess was the toughest thing for most students to learn? Machine learning? JavaScript and D3?

Deploying a Flask app to Heroku.

I empathize. When I first learned to program, and got through the pain of setting up my environment, it was easy to iterate. I used a REPL to experiment:

>>> name = "World"

>>> print(f'Hello, {name}')

Hello, World

or wrote a script that I could quickly run and test on my local machine:

$ python hello.py

Hello, World

Now I'm asked to deploy an app to a service like Heroku. Suddenly, I need to learn:

git- The Heroku CLI

- The

Procfile - and Gunicorn

What's more, each deploy can take minutes, so each mistake takes longer to troubleshoot and fix.

I dealt with the pain of deploying apps before Heroku, so I appreciate its simplicity. But my students lacked that context, so Heroku seemed needlessly complex.

Airflow was my Heroku

At Pipedream, we use Airflow to run scheduled jobs.

When I first used Airflow, I needed to run a simple Python script on a schedule. I wrote that script in 15 minutes. Then I setup Airflow. This involved:

- Reading the

READMEfor the Helm chart (we use Kubernetes) - Realizing I failed to include some specific config, trying again

- Realizing there was a typo in the documented config, trying again

- Setting up some Kubernetes secrets

- Troubleshooting a PersistentVolumeClaim

- Setting some env vars and

airflow.cfgconfig - And lots of deep dives into the Airflow docs and StackOverflow

I felt a lot like my students did with Heroku. I just wanted it to work, but had to learn a handful of new concepts and tools all at once.

Now, I love Airflow. I appreciate its dependency management, backfill, automatic retries, and all the things that make it a great job scheduler. But I didn't need any of that for my original use case. I just wanted to run a cron job.

cron has limitations. It doesn't have built-in error handling or retry, and has to be run on a machine that someone has to maintain. Modern job schedulers improve on it substantially, but lose its simplicity.

Keep it simple

As a teacher and developer, I care very deeply about improving developer tools. I joined Pipedream for this reason.

When we built the Cron Scheduler, we tried to marry the simplicity of cron with a powerful programming environment. I believe it's the easiest way to run a job on a schedule. There's no infra or cloud resources to manage, and it's free.

When I schedule a job on Pipedream, I:

- Create a workflow

- Set the schedule

- Write the code

I created this 1 minute video to show you how this works:

Every workflow on Pipedream begins with a trigger: HTTP requests, emails, or a cron schedule.

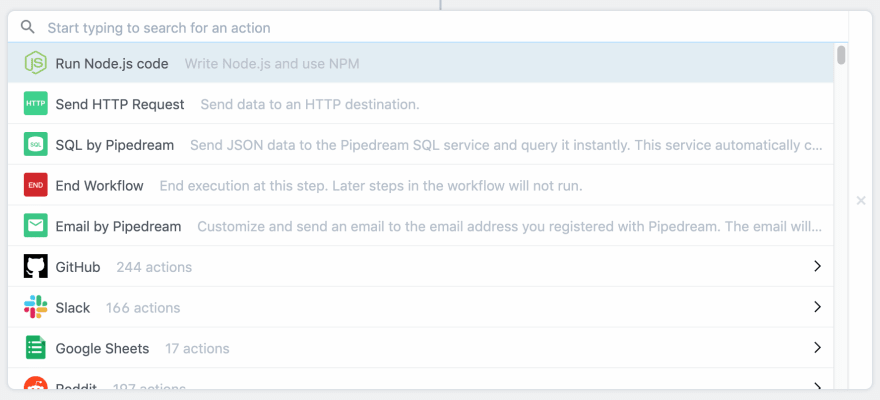

After I select my trigger, I add steps: Run any Node.js code (Python coming soon), send an HTTP request or an email, or interact with the APIs of built-in apps like Slack, Github, Google, Reddit, Shopify, AWS, and more.

Standard output and error logs appear directly below the step that produced them. If a job fails, I'm notified via email, and I can replay that job in one click.

Workflow templates are also public. I can share them with anyone, and they can fork, modify, and run them their own accounts.

Take a look at these examples and try running one on your own:

- Send popular /r/doggos posts to a Slack channel

- Query a PostgreSQL DB on a schedule, send results to Slack, email, or SMS

- Run Node.js code on each new item from an RSS feed

- Google Alerts for Hacker News

- Rebuild your Github pages site nightly

We ❤️ feedback

Our beta release is our first effort at making job scheduling and workflow management easy, but we want you to use the product and give us honest feedback about how it can improve.

We'd love if you joined our Slack community and added new feature requests on our backlog. And you can reach out to our team anytime. We'd love to hear from you.

Top comments (0)