I seem to have a habit of writing about super powerful machines whose many cores are laid low by misuse of locks. So. Yeah. It’s that again.

But this one seems particularly impressive. I mean, how often do you have one thread spinning for several seconds in a seven-instruction loop while holding a lock that stops sixty-three other processors from running. That’s just awesome, in a horrible sort of way.

Update, November 2020: Microsoft has formally acknowledged the bug and said that it is fixed. They said that “root cause is that the search for free regions of the volsnap CoW bitmap were incorrectly unbounded” and I have inferred that this is most likely to happen on new drives where the free regions are uninterrupted by allocated regions that would themselves stop the search.

Contrary to popular belief I don’t actually have a machine with 64 logical processors, and I’ve never seen this particular problem. But a friend hit this problem, nerd-sniped me asked for help, and I decided it was interesting enough to look at. They sent me an ETW trace that contained enough information for me to craft a tweet-for-help which resolved the issue swiftly.

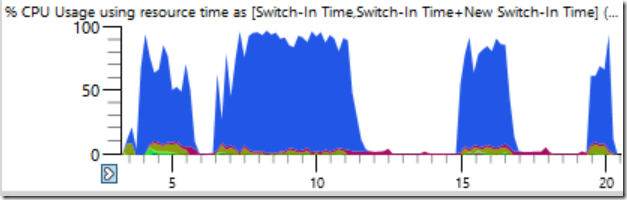

The complaint was simple enough: the friend was using ninja to do a build. Normally ninja does an excellent job of saturating the system, keeping n+2 processes running at all times so that there is little idle time. Instead, the CPU usage for the first seventeen seconds of the build looked like this:

It’s subtle (no, it’s not) but if you look really closely you can see where the total CPU usage drops from 100% to close to 0%, for seconds at a time. CPU usage goes from 64-threads busy one moment to two or three busy just half a second later. Here is a zoomed-in view of one of the drops – numbers on the bottom of the timeline are seconds:

My first thought was that ninja was failing to create processes quickly enough. I’ve seen that many times before, usually due to anti-virus software interfering. However when I looked at the processes graph, sorting by end time, I found that no processes were ending during the CPU usage downslope, so there wasn’t anything for ninja to do.

When figuring out why a system is idle the CPU Usage (Precise) table is ideal. It contains a record of every context switch on the computer so it is a precise record of every time that a thread starts running, including data about where it was waiting and for how long.

The tricky thing is that there is nothing wrong with a thread going idle. The problem occurs when we really wish a thread was doing work and instead it is idle, so we need to focus on the right idle moments.

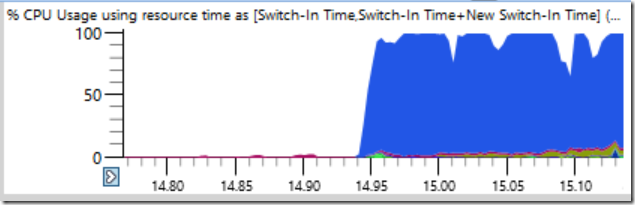

When doing wait analysis it is important to understand that the context-switch of interest happens when the thread resumes running. If we focus our ETW analysis on when CPU usage started dropping then we will find nothing. Instead we need to focus on when the system started working again. That phase of the trace was even more dramatic. Whereas it took half a second for CPU usage to ramp down, the reverse process from one thread in use to fully loaded took just twelve milliseconds! The graph below is zoomed in quite far, and yet the transition from idle to busy is almost a vertical line:

I zoomed in on the twelve milliseconds of truth and found that there were 500 context switches so I knew I’d need to be use the data wisely to find the culprit.

The context switch table has a lot of columns, which I have documented here. When a single process hangs then I group by New Process, New Thread, New Thread Stack and so on in order to find why the thread is stalled (discussed here), but that doesn’t work as well when a hundred processes are stalled. If I investigated the wrong process then it would say it was readied by the previous process which was readied by the previous process and I’d be scanning a long chain to find the prime mover who (presumably) held an important lock for a long time.

So, I tried a different column arrangement:

- Switch-In Time (when the context switch happened)

- Readying Process (who released the lock being waited on)

- New Process (who started running)

- Time Since Last (how long the new process was waiting)

That gives me a list of context switches, ordered by time, showing who readied whom and how long the readied processes had been waiting.

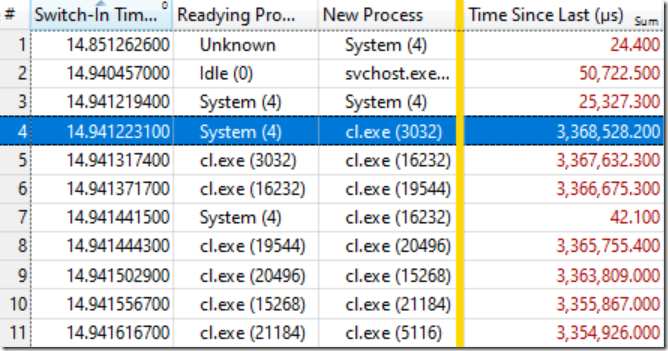

It turns out that’s all that I needed. The table below is a thing of beauty once you know how to read it. The first few context switches aren’t of interest because Time Since Last (how long the thread was idle) is quite small, but on the highlighted line (#4) things start moving:

That line says that System (4) readied cl.exe (3032) which had been waiting for 3.368 seconds. The next line says that less than 0.1 ms later cl.exe (3032) readied cl.exe (16232) which had been waiting for 3.367 seconds. And so on.

A few context switches such as the one on line #7 are not part of the wait chain and just represent the machine doing other work simultaneously, but the wait chain continued on for many dozens of links.

What this means is that all of these processes were waiting on the same lock. Once the System (4) process released the lock (after holding it for at least 3.368 seconds!) the waiting processes in turn grabbed the lock, did the little bit of work they needed to proceed, and then passed the lock down the chain. This chain of waiters continued for about a hundred processes, showing the extent of the backlog caused by this one lock.

A bit of exploration of the Ready Thread Stacks showed that most of the waits were going through KernelBase.dllWriteFile. I asked WPA to display the callers of that function, which also groups them. I could then see that during that 12 ms of catharsis there were 174 threads that were released from waits on WriteFile and they had waited an average of 1.184 seconds:

That is an amazing backlog, and it is actually understates the problem because many threads were waiting on the same lock from other functions such as KernelBase.dll!GetQueuedCompletionStatus.

What is System (4) doing

At this point I knew that build progress stopped because all of the compiler processes and others were waiting on WriteFile because System (4) was holding a lock. Adding the Ready Thread Id column showed that thread 3276 in the system process was the one that released the lock.

Thread 3276 was 100% busy during all of the build-system slowdowns so it is clear that it was doing some CPU work while holding a lock. Figuring out where CPU time is spent is the job of the CPU Usage (Sampled) graph so I pulled that up and drilled down into the sampled stacks for thread 3276. The CPU usage data was remarkably clear, showing essentially all of the time being spent in just one function, ntoskrnl.exe!RtlFindNextForwardRunClear (the numeric column is the count of samples):

Looking at the readying thread stack confirms that NtfsCheckpointVolume released the lock after 3.368 s:

At this point I felt it was time to pull in the vast and generous knowledge of my twitter followers so I posted this question. My follow-up question showed the full call stack. Tweets for help can give remarkable results when you supply enough information.

In this case the correct answer appeared very quickly, courtesy of Katelyn Gadd, along with lots of other great suggestions. The original reporter disabled system restore and suddenly their builds started running 2x to 3x as fast!

But wait, it gets better

Blocking forward progress on the entire system for 3+ seconds is pretty impressive, but it gets even better when you add the address column to the CPU Usage (Sampled) table and sort by it. This shows exactly where in RtlFindNextForwardRunClear the samples hit – 99% of them are on one instruction!

I grabbed ntoskrnl.exe and ntkrnlmp.pdb from my machine (they were the same version as on the affected machine) and used dumpbin /disasm to get the assembly language for the function of interest. The first digits of the addresses are different because the code is relocated on load, but the correspondence of the last four hex-digits (preserved over ASLR) is clear:

RtlFindNextForwardRunClear:

…

14006464F: 4C 3B C3 cmp r8,rbx

140064652: 73 0F jae 0000000140064663

140064654: 41 39 28 cmp dword ptr [r8],ebp

140064657: 75 0A jne 0000000140064663

140064659: 49 83 C0 04 add r8,4

14006465D: 41 83 C1 20 add r9d,20h

140064661: EB EC jmp 000000014006464F

…

We can see that the instruction at …4657 is part of a seven instruction loop that accumulates a few other samples. Adding the sample counts we get this:

RtlFindNextForwardRunClear:

…

14006464F: 4C 3B C3 cmp r8,rbx 4

140064652: 73 0F jae 0000000140064663 41

140064654: 41 39 28 cmp dword ptr [r8],ebp

140064657: 75 0A jne 0000000140064663 7498

140064659: 49 83 C0 04 add r8,4 2

14006465D: 41 83 C1 20 add r9d,20h 1

140064661: EB EC jmp 000000014006464F 1

…

Interpreting the sample counts on an out-of-order super-scalar processor is left as an exercise for the reader, with this article providing some good thoughts. In this case the processor was an AMD Ryzen Threadripper 2990WX 32-Core Processor. Apparently with micro-up fusion and its ability to retire five instructions per cycle it is actually possible that the entire loop completes every cycle with the jne, as the instruction after the most expensive instruction, being hit by most of the sampling interrupts.

And there we have it – a 64-logical-processor machine laid low by a seven-instruction loop running in the system process while holding a vital NTFS lock, fixed by disabling System Restore.

Coda

It is unclear why this code misbehaved so badly on this particular machine. I assume that it is something to do with the layout of the almost-empty 2 TB disk. When the original reporter re-enabled system restore the problem came back but with reduced ferocity. Maybe there is a pathological case for a disk with huge swathes of empty space? Maybe the memory being scanned was accidentally allocated as uncached? I don’t know.

Another twitter follower mentioned a Volume Shadow Copy bug from Windows 7 that could have O(n^2) performance. That bug was supposedly fixed in Windows 8, but maybe remains in a different form. The stack traces I have clearly show VspUpperFindNextForwardRunClearLimited (find a used bit in this 16MB region) calling VspUpperFindNextForwardRunClear (find the next used bit anywhere, but don’t return it if it’s past 16MB) which sure sounds like history repeating itself. As I said earlier this year, O(n^2) is the sweet spot of badly scaling algorithms: fast enough to make it into production, but slow enough to make things fall down once it gets there.

There have been some reports of this type of problem when doing many large deletes, but this trace showed few files being deleted so that appears to be yet another variant.

I’ll close with the system-wide CPU usage graph that I opened the post with, with the CPU usage of the problematic system process thread shown by itself in green in the bottom graph. The problem is deliciously obvious once you look at it this way. The system process is technically visible in the top graph, but when scaled to 1.5625% of the graph height it’s significance is easy to overlook.

While the picture above shows the problem well, it doesn’t actually prove anything. Correlation is not causation, as they say. Only by using the context switch events to show that the busy thread was also the one holding the critical lock could I be sure that I’d found the actual cause rather than an innocent bystander.

Requests

I’m going to close this investigation the way I usually do, with a plea for thread names. The system process has dozens of threads, many of which are special purpose, and none of which are named. The busiest system thread in this trace was the MiZeroPageThread and I had to repeatedly drill down into its stack in order to remind myself that this thread was not of interest. The VC++ compiler also doesn’t name its threads. Thread names are cheap and awesome. Use them. It’s easy. Chromium even includes a tool to list thread names in a process.

If anybody from the NTFS team at Microsoft wants to follow-up then contact me and I can connect you with the original reporter and the ETW trace.

References

- Original twitter thread

- Blog post announced on twitter

- Hacker news discussion is here

- Reddit discussion is here

- Possibly related Windows/NTFS rant

You do not need thread names once you have stack tags. When you add

https://github.com/Alois-xx/etwcontroller/blob/master/ETWController/ETW/default.stacktags

and then group Context CPU Usage Precise with

Process, New Thread (Stack Tag), New Thread Stack (Frame Tags), Readying Process, Readying Process Id then in the yellow area Count, Waits (Max), Last Switch-Out Time, Switch-In Time, Waits (Sum), CPU Usage (in View) (Sum) and graph % CPU Usage.

That is usually all I need.

The underestimated power of stacktags is that other have analyzed the same issue already. Whenever I find an issue I create a stacktag for it. Over the years I have had plenty of issues to deal with which also contains a stacktag for CheckPoint Volume.

I bundle my stacktag file with a bunch of perf tools together with an installer and do a weekly talk about the new slow things. That gained quite some traction internally where I work and we have now a growing ETW fanbase where others can also find the same issues much faster than before.

The stacktag file also contains specifically some Wait stacktags which are useful if you are after long waits. Ideally the other stacks should have zero waits so you can always focus on the right things. If something new pops up in unexpected places it is easy to spot.

Do you have any pointers to good tutorials for effective use of stack tags?

It sounds like crowd-sourcing of stack tag files is pretty powerful, but I’m not sure how that works in practice. Is there a github repo with stack tag file(s) that Windows developers can contribute to?

A good intro is on my old Blog at: http://geekswithblogs.net/akraus1/archive/2015/09/26/167117.aspx. I could make several stacktag files and supply a merged default.stacktags file as part of my ETWController project which you can include in your default wpa profile.

I have created a tool which always opens ETL files with my overview profile and the corresponding stacktag files which are referenced from my custom Profile.

In general I have two profiles

– Overview

– Diff

The second diff profile is used when I need to compare a good and bad run of automated ETW enabled regression tests. This allows me to drill deep from a simple perf number chart when something strange did happen and zoom into the region where the measurement was actually taken. Once you have this you cannot live without it.

Although it might look like ETWController is dead it is heavily used and has enabled me and others to solve many impossible issues.

For stacktag structure I would propose

– Windows.stacktags

– VirusScanners.stacktags

– .NET.stacktags

That should cover already quite a lot. I have stacktags also for Crowdstrike which is also increasingly becoming popular. I love stacktags for these ones which always fly under the radar and no one seems to capture them in the act of doing bad things to performance. With stacktags it becomes obvious and you can talk to your management about the performance impact of this or that virus scanner or a bad pattern update.

The other thing I love stack tags for is filtering out ETW overhead. Turning on a user mode provider with a ton of events or collecting lots of really deep stack traces can really bias sampling, but stack tags can trivially separate those samples out (and even one of the few things the default stacktags file does fairly well)

I have added various stacktag files at

https://github.com/Alois-xx/etwcontroller/tree/master/ETWController/ETW

and I have supplied an OverView WPA Profile which should show the power of stacktags quite nicely along with the latest capabilities of WPA whihc finally can show local time in its columns.

Thread Naming tips:

1. Hidden away in the view editor, there are the columns “Thread Start Module” and “Thread Start Function”. They’re no substitute for decent thread names, but can be about as good in some cases (particularly with System, where the Start Function identifies threads with functions like “MiZeroPageThread” and “VspWorkerThread”. Would be a lot more convenient if the ‘Thread Name’ column could just pull in the start module!function instead of needing three column to accomplish it.

2. You can use regions from Region of Interest files to apply thread names (The default AppAnalysis.Regions.xml contains a few examples). This option is not particularly great, because you need events that not only work well with regions (e.g. WPA will takes ages to load unless you can define unique start/stop pairs for an event that isn’t too numerous) but also are common & distinct enough to reliably identify threads correctly.

Thanks for the pointers. I should explore regions of interest files more. It would be nice, as you say, if the Thread Name columns wouldn’t default to completely blank.

I’m just surprised more people aren’t finding thread names incredibly helpful. When debugging Chrome’s many processes I use them to easily distinguish between process types – the main threads have different names for all of the major process types. They are a valuable type of metadata that can be used by profilers, debuggers, and random tools like Process Explorer.

how to collect Instruction retired and execution time

are collected just for the running thread that did the context

switch

I’m not sure what you are asking. The amount of time that a thread is running and idle is available in the CPU Usage (Precise) table which I have documented here:

If you are interested in instruction retired counts then you should read this article:

You seem to be asking about instruction execution times and I’m not even sure what you mean by that.

I have an AMD 3950X (16-core, Zen 2, 7nm CPU). Disabling system restore sped up my system (Windows 10.0.18363) in no uncertain terms. It’s now instantaneous.

THANK YOU!!!

Thanks for reporting this issue last year. I finally saw an internal reproduction of the issue and was able to fix the problem. The fix will be in preview next spring.

That’s great to hear, and thanks for the update.