Postgres comes with physical and logical replication features. Read on to learn more about various aspects of physical replication.

Physical Replication

Physical replication methods are used to maintain a full copy of the entire data of a single cluster (in Postgres, a cluster is a set of databases managed by a single main Postgres server process called a postmaster), typically on another machine. The source machine is called the primary in Postgres jargon, and the destination is called the standby.

Hot, Warm and “Cold” Standbys

A standby server that is kept as up-to-date as possible with the primary in real-time and allows clients to execute read-only transactions is called a hot standby, or more popularly a read replica. Hot standbys were added to Postgres in version 9, before which there were only warm standbys. A warm standby is similar to a hot standby, except that it does not let clients connect to it.

(Aside: Hot standbys cannot execute queries that create temporary tables. This is a Postgres limitation.)

A “cold” standby (not an official term) is usually a standby server that does not start until a failover. Since the cold standby is not up and running, it is possible that on startup it may first have to apply pending changes before it can start accepting client connections.

WAL Files

In the normal course of operations, a PostgreSQL server generates an ordered series of WAL (write ahead log) records. These are basically a log of changes, similar to Redis’ AOF or MySQL’s binlog. At its core, physical replication is the transport of these records onto another machine, and getting the other postmaster which is running there to accept and apply these records into its local database.

WAL records are chunked into equally-sized (usually 16MB) files called

WAL segments or just WAL files. These files are created in a directory

called pg_wal under the cluster data directory (pg_wal was called pg_xlog

in Postgres versions prior to 10). Old WAL files are discarded when no longer

needed (and also based on a couple of configuration parameters).

Recovery Mode

The postmaster can be started up in a mode called recovery mode, by placing a valid configuration file called recovery.conf in the cluster data directory. In recovery mode, Postgres will only import and apply WAL files generated by a primary server, and by itself will not generate any WAL files. Warm and hot standby servers run in recovery mode.

When started up in the recovery mode, Postgres will first try to import all WAL

files available in an archive (more of this below). When the archive does not

have any more WAL files to offer, it tries to import any files lying around in

it’s pg_wal directory. When those too are done, if a primary connection is

configured and

standby_mode

set to on in recovery.conf, Postgres will connect to the primary and pull and

apply new WAL records as they get created at the primary.

Log Shipping

Imagine having a trigger that will be invoked at the primary server whenever a

new WAL file is created. This trigger can then copy the new WAL file to another

machine using say rsync, and place it in the pg_wal directory of a postmaster

running in recovery mode. Can you make a standby like this?

The answer is yes, and indeed this was the standard practice before streaming replication was added in Postgres v9. This practice is called log shipping.

The trigger is a shell script, that can be configured using archive_command. The name and path of the WAL file can be passed to the script.

WAL Archiving

Instead of rsync-ing over the WAL file, let’s say we copy it to an S3 bucket or an NFS-mounted directory which is accessible from the standby machine also. This shared location will now contain all the WAL files generated by the primary. This now becomes an archive, and the process of storing WAL files into the archive is called continuous archiving or simply WAL archiving.

The inverse of this operation – fetching WAL files from the archive into a

recovery-mode Postgres – can be configured using

restore_command.

Similar to archive_command, this too is the path to a shell script.

The postmaster running in recovery mode, knows which WAL file it wants. The name

of the file can be passed to the script.

As an example, here are the archive and restore commands for storing and fetching WAL files to and from an S3 bucket:

archive_command = 's3cmd put %p s3://BUCKET/path/%f' # in postgresql.conf

restore_command = 's3cmd get s3://BUCKET/path/%f %p' # in recovery.confWhen starting up in recovery mode, if restore_command is configured, Postgres

will first try to fetch WAL files from the archive.

pg_standby

In recovery mode, Postgres does not, and cannot, know in advance how many WAL files have been generated so far. If restore_command is configured, Postgres will repeatedly invoke it with progressive WAL file names (the names are in a predictable sequence) until the command returns an error.

For example, the restore command was able to satisfy the requests for WAL files

000000010000000000000001 through 00000001000000000000001A but fails for

00000001000000000000001B since it was not found in the archive location.

In absence of WAL files from other sources, Postgres will assume that the WAL

file 00000001000000000000001B is yet to be generated by the primary, and will

finish recovery after applying 00000001000000000000001A.

Consider what happens if the restore command were to wait for the file

00000001000000000000001B to be available, rather than exit with error since

it was not found. Postgres will continue waiting on restore command, and will

therefore continue to be in recovery mode.

This is a valid configuration, and a valid way to setup a warm standby.

Postgres ships with a command called

pg_standby, which can

be used to setup a warm standby this way, as long as the archive is a directory.

pg_standby will wait for a file to become available, if it cannot be found.

Archive and restore commands using pg_standby will look like this:

archive_command = 'cp %p /some/path/%f' # in postgresql.conf

restore_command = 'pg_standby /some/path %f %p' # in recovery.confStreaming Replication

After processing archived WAL files as well as files in the pg_wal directory,

Postgres can connect to a primary server over the network and repeatedly fetch

and apply new WAL files as they are created. This feature, added in Postgres 9,

is called streaming replication.

The primary server to connect to can be specified in the recovery.conf file:

# recovery.conf

standby_mode = on

primary_conninfo = 'host=10.0.1.10 user=repl password=p@ssw0rd'Hot Standby

By default, when in recovery mode, Postgres will not accept client connections,

rejecting them with “database system is in recovery mode” error messages. By

adding the line hot_standby = on in recovery.conf, you can make Postgres

accept client connections and allow them to execute read-only transactions:

# recovery.conf

hot_standby = onThere is usually no reason to turn hot_standby off.

The PostgreSQL docs have more info about setting up and running a standby in the “hot standby” mode.

Replication Slots

Replication slots were introduced in Postgres 9.4. They are a mechanism to accurately and durably keep track of how far a standby is lagging behind the primary. This allows the primary to ensure that WAL files still needed for the standby to catch up are not deleted.

Before replication slots, it was not possible for the primary to determine this, and you’d end up in situations where a standby was left stranded because a WAL file it needed had been deleted by the primary. Of course, WAL archives can fix this issue. Without a WAL archive, however, the only option was to rebuild the standby from a fresh backup.

You can read more about replication slots here.

Steps to Setup A Hot Standby

Let’s have a look at the steps needed to setup a hot standby for an existing primary.

1. Create Replication User

First, we need a user for the standby to connect as:

$ psql -p 6000

psql (11.2 (Debian 11.2-1.pgdg90+1))

Type "help" for help.

postgres=# CREATE USER repluser REPLICATION PASSWORD 'p@ssw0rd';

CREATE USERAnd the corresponding changes in pg_hba.conf:

# TYPE DATABASE USER ADDRESS METHOD

host replication repluser standby-ip/32 md5

# (replace standby-ip)You can of course, use any standard authentication mechanism of PostgreSQL. The user needs to have replication and login privileges and does not require access to any specific database.

Be sure to reload the primary server for the changes to pg_hba.conf to take effect.

2. Take a Backup

The standby needs to start from a backup of the primary. You can, and should,

do this using pg_basebackup with a new replication slot:

pg_basebackup -h primary-ip -p 6000 -U repluser -C -S slot_standby1 -R -D standbyThis connects to the primary at primary-ip:6000 with the user we just created

and takes a backup of it into the directory standby. A new replication slot

slot_standby1 is created.

3. Add recovery.conf In Standby

We’ll use this slot as our standby replication slot, so that there is continuity from the backup.

We’d asked pg_basebackup to create a recovery.conf for us above (“-R”

option). Let’s have a look at that:

$ cat standby/recovery.conf

standby_mode = 'on'

primary_conninfo = 'user=repluser password=''p@ssw0rd'' host=primary-ip port=6000 sslmode=prefer sslcompression=0 krbsrvname=postgres target_session_attrs=any'

primary_slot_name = 'slot_standby1'That’s actually pretty good, and we don’t need to modify it further. Let’s simply bring up the standby now:

o$ pg_ctl -D standby -l log_standby -o --port=6001 start

waiting for server to start.... done

server started

postgres@stg1:/tmp/demo$ cat log_standby

2019-06-19 09:17:50.032 UTC [21733] LOG: listening on IPv4 address "127.0.0.1", port 6001

2019-06-19 09:17:50.034 UTC [21733] LOG: listening on Unix socket "/var/run/postgresql/.s.PGSQL.6001"

2019-06-19 09:17:50.067 UTC [21734] LOG: database system was interrupted; last known up at 2019-06-19 09:12:05 UTC

2019-06-19 09:17:50.111 UTC [21734] LOG: entering standby mode

2019-06-19 09:17:50.119 UTC [21734] LOG: redo starts at 0/2000028

2019-06-19 09:17:50.120 UTC [21734] LOG: consistent recovery state reached at 0/20000F8

2019-06-19 09:17:50.120 UTC [21733] LOG: database system is ready to accept read only connections

2019-06-19 09:17:50.138 UTC [21739] LOG: started streaming WAL from primary at 0/3000000 on timeline 1And that’s it! The log file indicates that streaming replication is up and running. You should now be able to connect to the standby at port 6001, run read-only queries and see changes get replicated from the primary more or less in real-time.

Next Steps

The PostgreSQL docs are a great place to start digging further into all replication-related features of Postgres. You’ll want to look into topics like delayed replication, cascading replication, synchronous standbys and more.

Although Postgres comes with an impressive set of features, there are still use-cases that are not supported. This Postgres wiki page has a list of third-party tools that provide additional replication-related functionality.

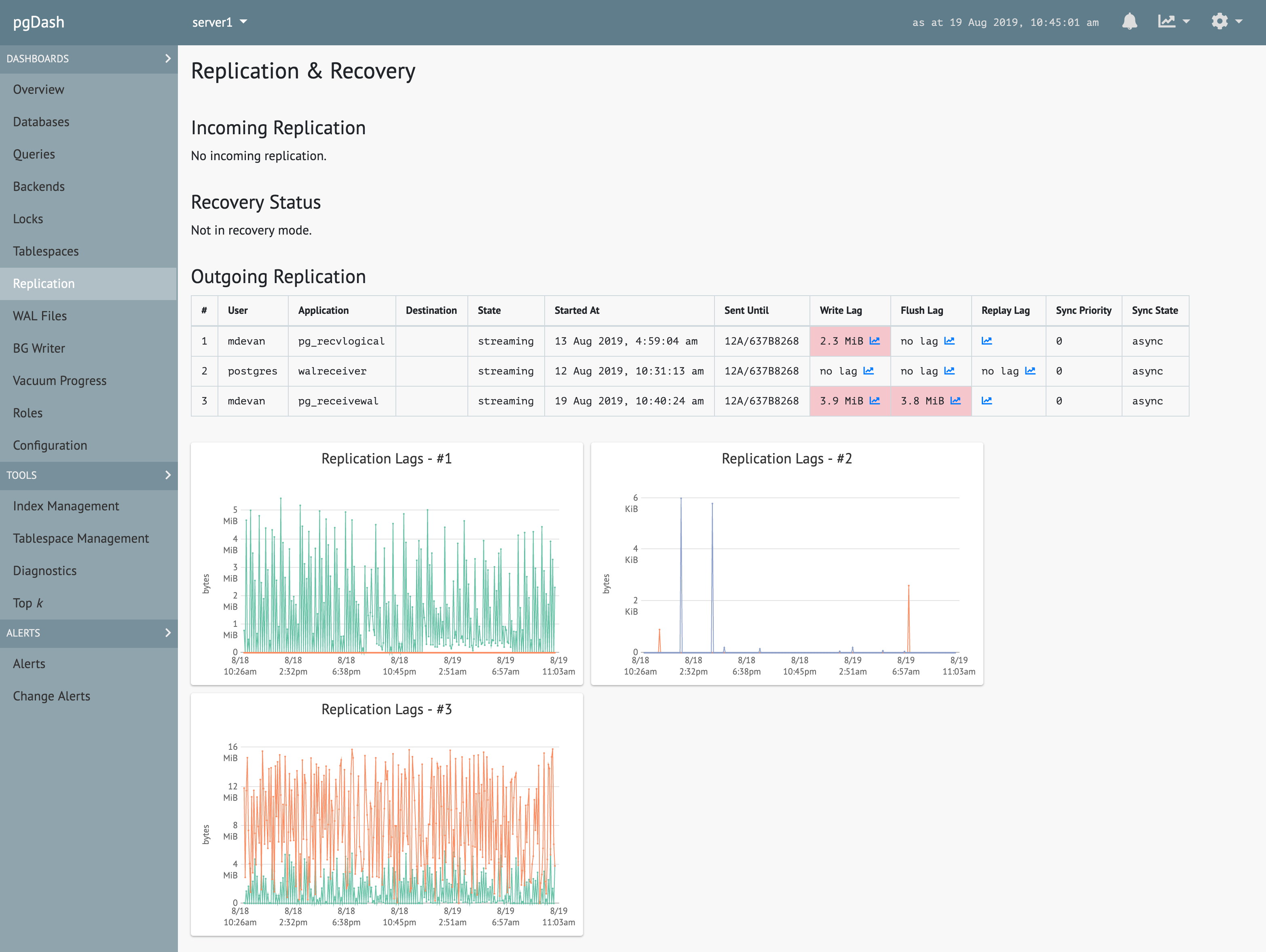

About pgDash

pgDash is a modern, in-depth monitoring solution designed specifically for PostgreSQL deployments. pgDash shows you information and metrics about every aspect of your PostgreSQL database server, collected using the open-source tool pgmetrics. pgDash provides core reporting and visualization functionality, including collecting and displaying PostgreSQL information and providing time-series graphs, detailed reports, alerting, teams and more.

pgDash can monitor replication, locks, queries and more. It can also provide quick diagnostics and extensive alerts. Learn more here or signup today for a free trial.