Understanding memory leaks in node.js part 2

Intro

This article would be the second part of the series "Understanding memory leaks in node" if you did not read the first part you can find it here.

In the second part, we will focus on event emitters and cached objects. Also, we will do some analysis using node clinic tool and chrome dev-tools to identify a nasty memory leak.

Event emitter

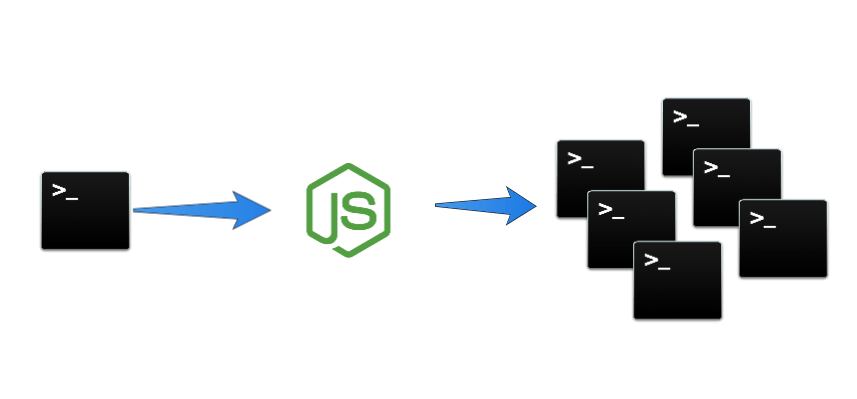

The following example will show an easy way to add a memory leak in your code, even for trained eyes is hard to spot the problem:

const connector = require('./lib/connector');

function handleServer(req, res) {

connector.on('connected', async (db) => {

res.end(await db.get());

});

}You can pause the screen and try to identify the leak.

The leak is

connector.onso every time a new request comes to the server, we attach a new handler for theconnectedevent. If you run this code, eventually you will receive a message like:

(node:10031) MaxListenersExceededWarning: Possible EventEmitter memory leak detected. Eleven event listeners added. Use emitter.setMaxListeners() to increase limitI've cheated even more and changed the code for the connector to not throw this warning:

const { EventEmitter } = require('events');

const connector = new EventEmitter();

connector.setMaxListeners(0); // You will be able to attach Indefinitly events

setInterval(() => {

connector.emit('connected', {

async get() {

return 'One'

}

});

}, 1000);

module.exports = connector;The fun part is that after we do some stress testing making 10000 calls for 60 seconds, event loop has noticeable delays of more than 10 sec and still growing the memory topped at 600 MB, and the server starts to respond with timeouts.

As you can see some memory leaks drastically affects the performance also not only the memory.

clinic doctor --autocannon [-c 10000 -d 60 http://localhost:3030] -- node event-emitter-leak

The solution is to use connector.once.

function handleServer(req, res) {

connector.once('connected', async (db) => {

res.end(await db.get());

});

}Cached objects

Even if previous leaks are pretty nasty, they are not that common. The king of the commonness of memory leaks comes from cached objects. Will present you a super simple example which inside uses a memoization technique for caching a function call.

function compute(term) {

return compute[term] || (compute[term] = doCompute(term));

function doCompute(term) {

// do some calculations with the term

return Buffer.alloc(1e3); // 1kb

}

}As you can see in this code, we leverage the fact that a function in javascript is an object. So we can store cached values.

The problem with the above code is that we do not free the allocated memory, so for every new term the result will be cached endlessly.

The solution can be to free the memory after a timeout or to use a more complex caching mechanism like LRU cache (Least Recent Usage).

function compute(term) {

setTimeout(() => {

delete compute[term];

}, 30 * 1e3); // 30 seconds

// ...

}const LRU = require('lru-cache');

const computeCache = new LRU(50); // store maximum 50 items, the least recent used will be removed

function compute(term) {

if (!computeCache.has(term)) {

computeCache.set(term, doCompute(term));

}

return computeCache.get(term);

// ...

}It is up to you when to use one approach over the other, one case for timeout cache will be when you have a heavy computation algorithm that you know that needs the cached data for a shorter period, usually the entire duration of the algorithm.

The other approach is when you know that some items will be accessed more than the others, like for example some products in a shop that is on discount, etc.

lru-cachedoes support a max-age approach also, so you can specify a time to live to your cached data, so eventually I strongly suggest to use the latter method over the first.

Debugging tools

When dealing with memory leaks, a developer should arm himself with useful tools and strategies. In this article we will focus on the following list:

- clinic - node profiling tool, created and maintained by @NearForm

- chrome dev tools (memory)

- heap snapshot

- heap-profile - experimental, async low overhead

- heapdump - make a dump of v8 Heap synchronous

Let's focus next on identifying, debugging and fixing a memory leak, the code for the cached objects chapter.

Memory leak profiling algorithm

- profile your node app using the

clinic doctor - stress test the code using

node --inspectand chrome dev tools heap-snapshot - identify and fix the leak using the above tools

- continue with another part of the application

The source code for our application containing the memory leak.

const http = require('http');

const server = http.createServer(handleServer);

server.listen(3030);

function handleServer(req, res) {

res.end(computeTerm(Math.random()));

}

function computeTerm(term) {

return computeTerm[term] || (computeTerm[term] = compute())

function compute() {

return Buffer.alloc(1e3);

}

}We start by profiling our app using node clinic and autocanon.

clinic doctor --autocannon [-c 1000 -d 30 http://localhost:3030] -- node cached-object-leak

We can see the RSS (Resident Set Size) which stands for the total amount of RAM used by the process has grown to ~700 MB.

In the top left corner, we have a notification from clinic tool Detected a potential Garbage Collection Issue, behind the scenes clinic uses tensor flow to predict issues, pretty cool ha?

The next step will be to identify which code produces the memory leak accurately; this will be done using the node-inspector and chrome dev tools as following.

Start your application with inspect flag

node --inspect cached-object-leak.jsOpen chrome browser and run chrome://inspect you will see your current session then click on inspect.

Then you should find the Memory tab of your application and click on Take snapshot.

As we can see our application when just started took only ~4.4 MB.

Let's stress it a little bit using autocannon.

autocannon -c 1000 -d 20 http://localhost:3030After we can take the second heap snapshot. The critical moment here is to use the "Comparison" filter as marked in red in the following image.

Comparison filter works in the manner that it displays the delta between 2 snapshots.

We can observe that between the two snapshots we have + 97 365 objects added and zero deleted this is a sign that the garbage collector does not release the memory.

Let's open the system / JSArrayBufferData and investigate the contents.

That's it! We found out our leaky function.

computeTerm()From the previous chapter, we know how to fix it, and why it leaks memory.

Conclusions

Memory leaks have a great impact on performance, and resource consumptions. Things can get costly if they are ignored.

To mitigate memory leaks you need to:

- know your tools

- know language capabilities and drawbacks

- make a memory dump at consistent interval using

heap-profileor any other tool. - make sure you constantly update your dependencies and check best practices.